Leaderboard

Popular Content

Showing content with the highest reputation since 11/23/2025 in Posts

-

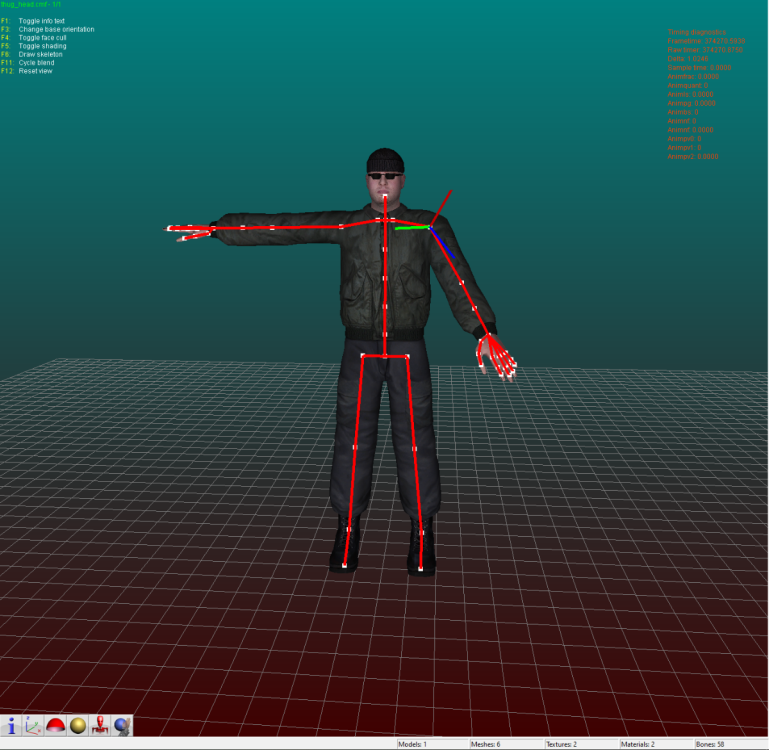

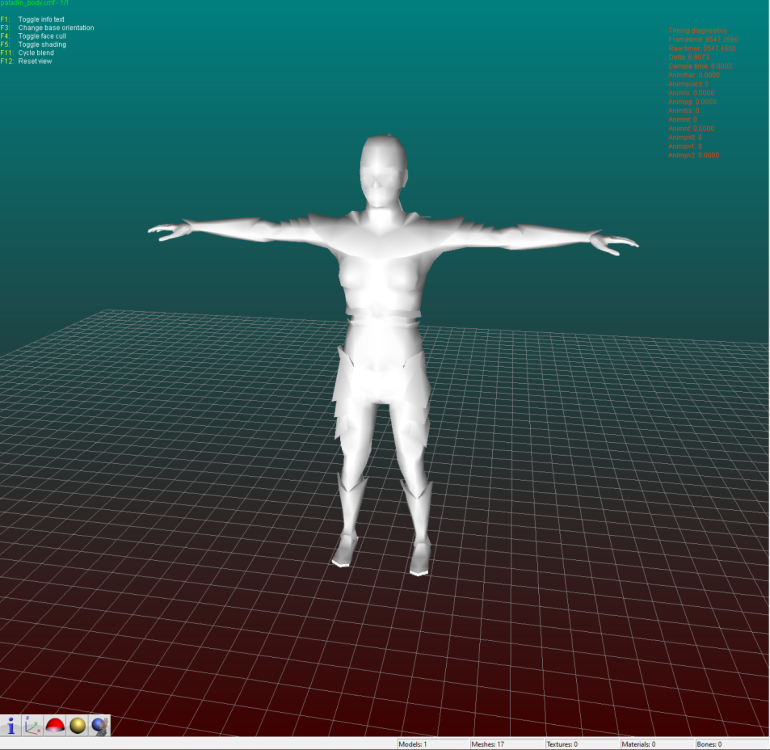

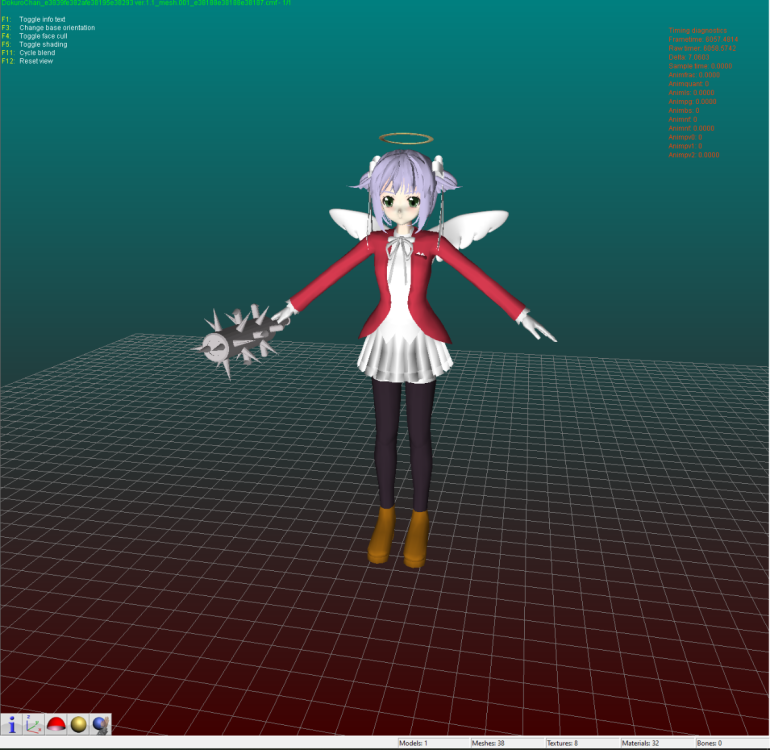

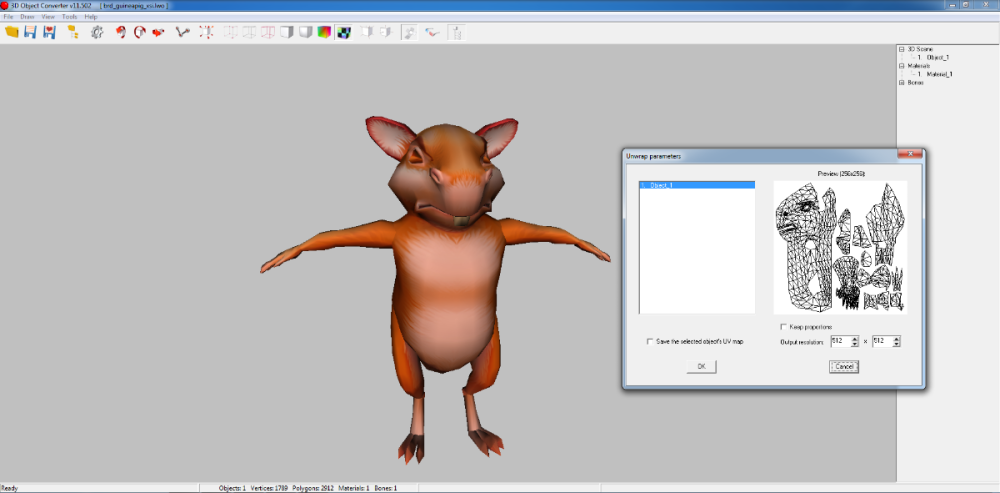

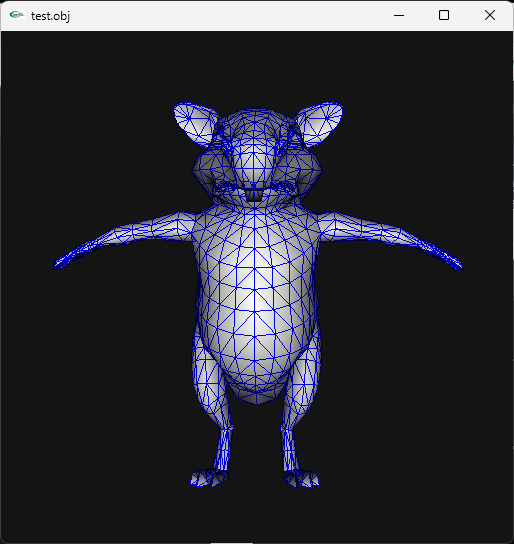

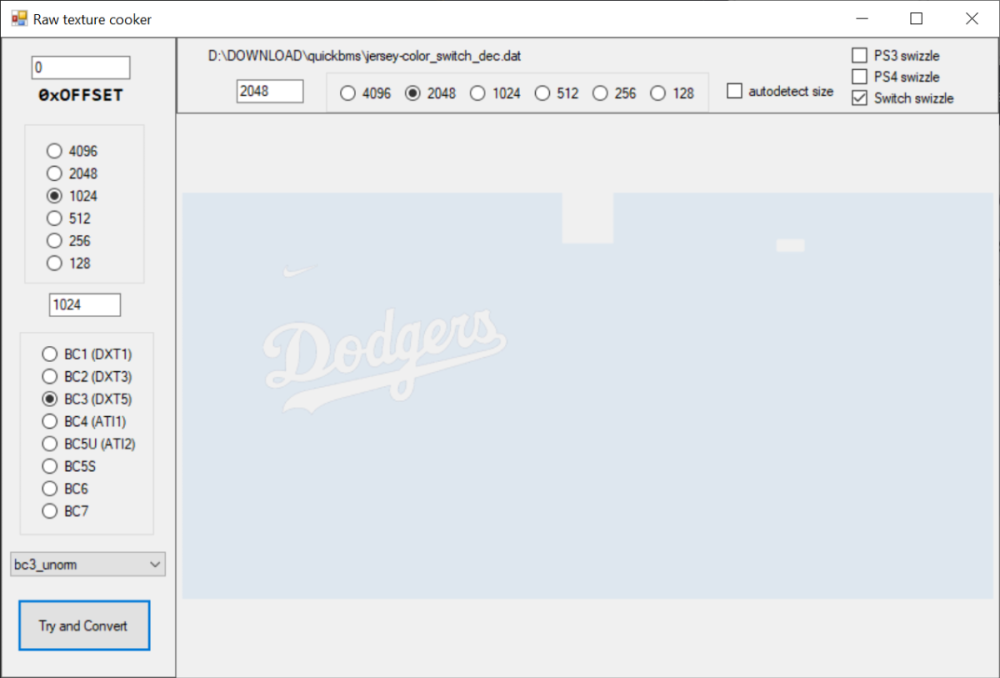

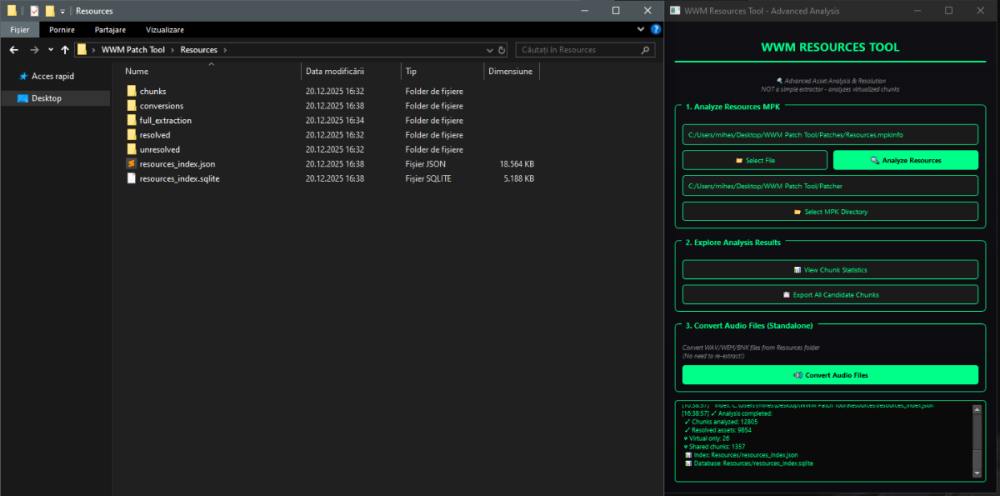

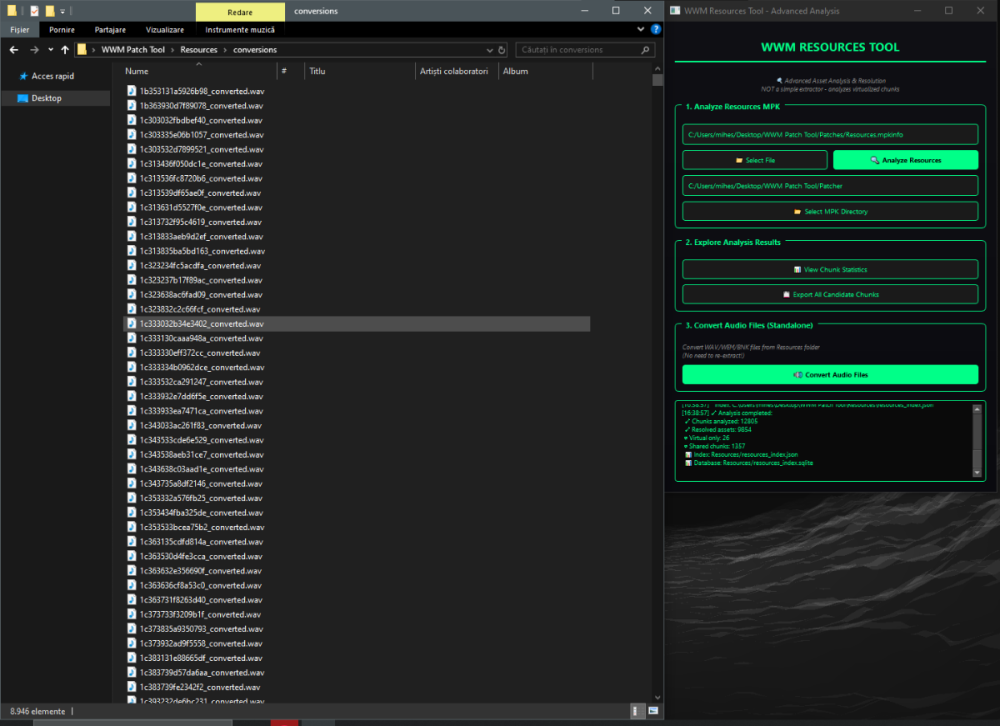

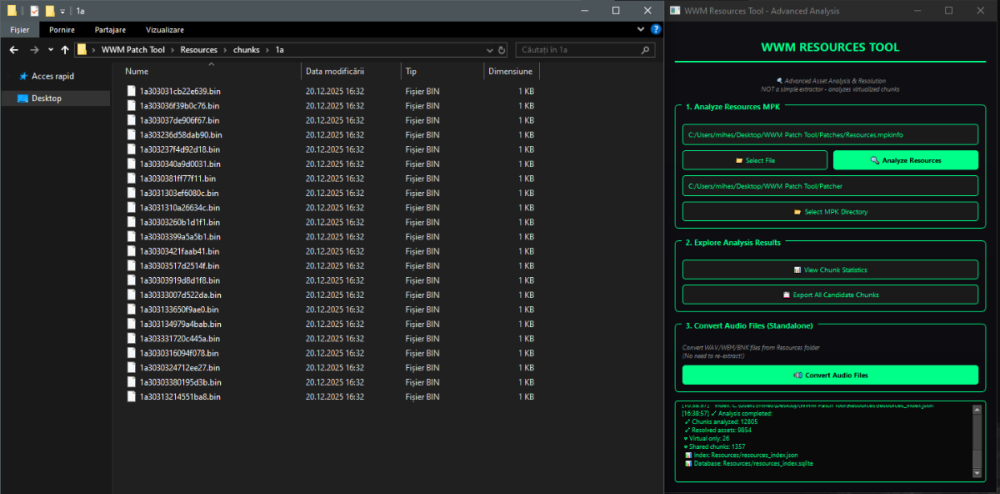

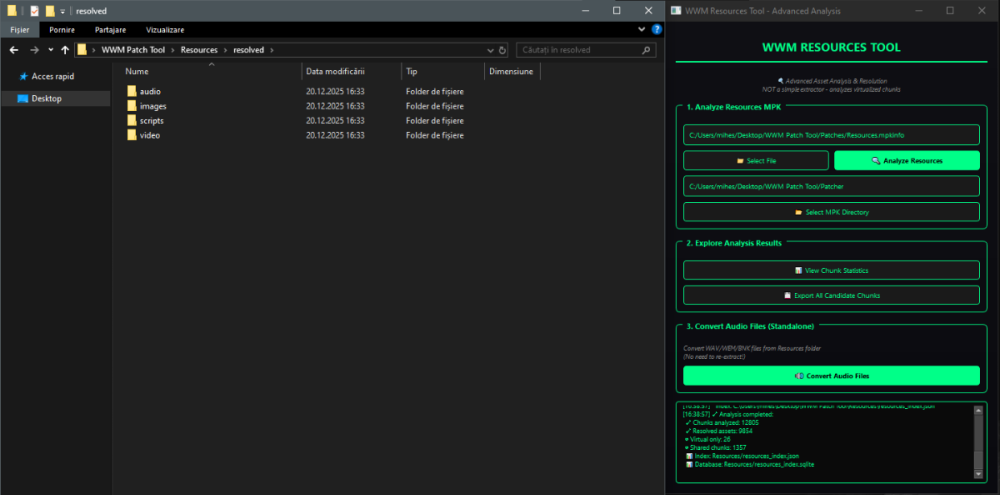

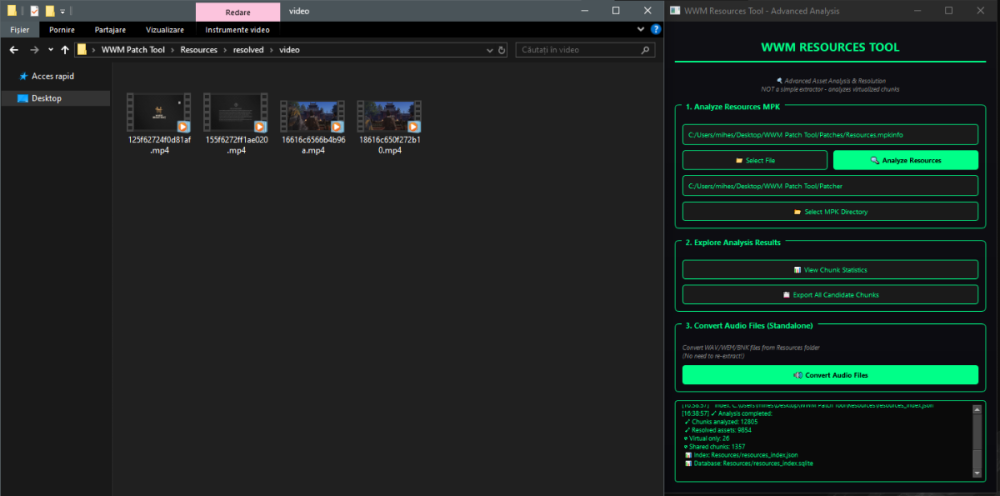

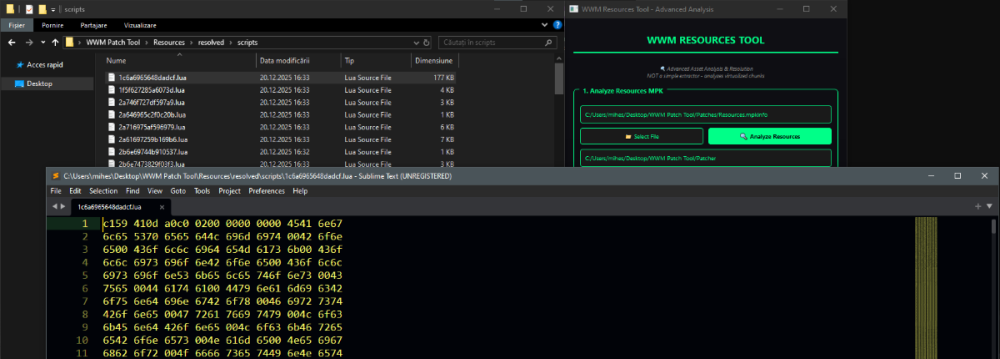

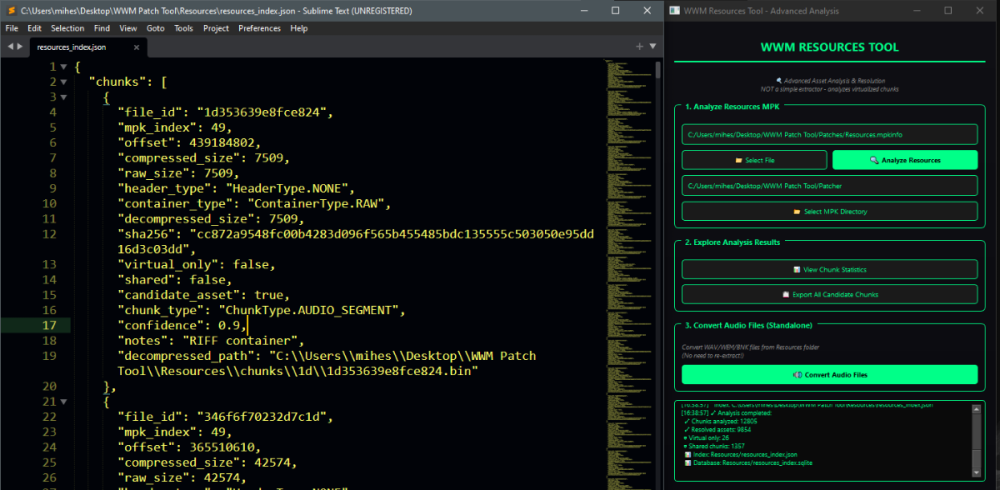

Author: Myro Scope: Educational & research-oriented reverse engineering Status: Ongoing research Introduction: This thread documents an ongoing technical research project focused on analyzing the MPK container system used by Where Winds Meet (WWM). The goal is to understand the structure, behavior, and data flow of the game’s asset containers from a reverse-engineering and archival perspective, strictly for educational and analytical purposes. No proprietary assets, binaries, or tools will be distributed as part of this work. 1. Patch*#.mpk vs Resource*#.mpk — Structural Similarity, Semantic Differences At a container level, Patch#.mpk* and Resource#.mpk* share the same base format: Indexed via mpkinfo / mpkdb Entries contain: FileID Size Offset MpkIndex Use the same compression stack: LZ4 / ZSTD / LZMA EZST (AES-encrypted) However, despite this structural similarity, their extraction semantics differ significantly. 2. Patch*#.mpk — Partial Transparency, Direct Asset Access Patch MPKs behave primarily as delta / override containers: Assets retain near-original offsets Internal file structures remain largely recognizable Minimal indirection compared to Resource MPKs As a result: ~99% of audio assets can be recovered successfully A subset of Lua scripts becomes readable after decompression Non-audio assets (including some images) are present and partially accessible Testing on Patch3100.mpk confirmed that Patch archives are not limited to sound assets. Conclusion: Patch MPKs prioritize update efficiency and fast access, with limited transformation of payload data. 3. Resource*#.mpk — Indirection and Shared Data Resource MPKs represent the primary asset pool and introduce several additional complexities: The Size field often represents a logical or expanded size, not the physically stored payload Naïve extraction pipelines tend to: materialize padding and alignment blocks duplicate shared data blobs inflate archive size artificially (e.g. ~2 GB → tens of GB) This behavior explains why Resource MPKs appear far more “opaque” when extracted without validation. Important observations: Fixed headers such as 02 02 02 01 … frequently represent logical structures (e.g. texture containers with multiple mip blocks) Headers of the form size (4 bytes) + size × 20 bytes often describe lookup tables, not standalone assets Many assets are shared, aliased, or referenced indirectly Conclusion: While Patch and Resource MPKs share the same container format, they do not share the same extraction semantics. Resource MPKs require strict validation, deduplication, and asset-type verification to avoid false positives. 4. Audio Extraction & Tooling Initial testing with Ravioli Game Tools proved sub-optimal for this pipeline: Inconsistent parsing of Patch audio assets Unreliable WAV output in multiple cases Switching to vgmstream yielded significantly better results: Correct parsing of recovered audio data Clean, fully functional WAV output Proper handling of codec structures Current result: Audio extraction and conversion are fully verified and stable. 5. Resource.mpk Extraction – Current Progress Testing on Resources49.mpk (~740.9 MB) produced the following results: Successfully extracted: Audio assets → converted to valid .wav files Over 8,500 audio files identified in a single Resource MPK Video files (.mp4) → fully playable Lua scripts (.lua) → extracted but still encrypted / obfuscated Unresolved: Files detected as images (.png, .jpg, .bmp) Headers contain the string “messiah” Strongly suggests custom packing, encryption, or misclassification Currently not valid image data despite file extensions 6. Current Status ✔️All Patch*#.mpk, Resource*#.mpk, and lt*.mpk archives can be unpacked ✔ Audio assets fully recovered and validated ✔ MP4 video assets fully playable ✔ Lua scripts extracted but not yet readable 7. Next Steps Ongoing research will focus on: Reversing additional transformation layers in Resource*#.mpk Identifying: secondary encryption stages compression chaining block or stream reordering Investigating the custom image container format Further analysis of Lua encryption / obfuscation Automating Patch vs Resource handling as two distinct pipelines Notes on Tooling & Distribution All tools used in this project are: developed entirely from scratch created specifically for WWM research used strictly for educational purposes At this stage: no tools, binaries, or scripts will be released no proprietary assets will be redistributed Closing This thread is intended solely as a technical research and documentation log, not as a release, redistribution, or exploitation guide. In accordance with applicable intellectual property laws and forum policies, no tools, binaries, scripts, or extracted assets will be posted or shared, either publicly or privately. All game data, assets, file formats, and related materials discussed here remain the exclusive proprietary property of NetEase and the developers of Where Winds Meet. Any references to assets or file structures are made strictly for educational, analytical, and research purposes, with no intent to enable misuse, circumvention, or redistribution of protected content. Should any concerns arise regarding compliance or scope, I am fully open to cooperating with forum moderation and adjusting the visibility or content of this thread accordingly. Thank you for your understanding and for supporting responsible technical research. — Myro Below are a few illustrative screenshots and log excerpts from the current stage of the research, provided solely to contextualize the findings outlined above.3 points

-

3 points

-

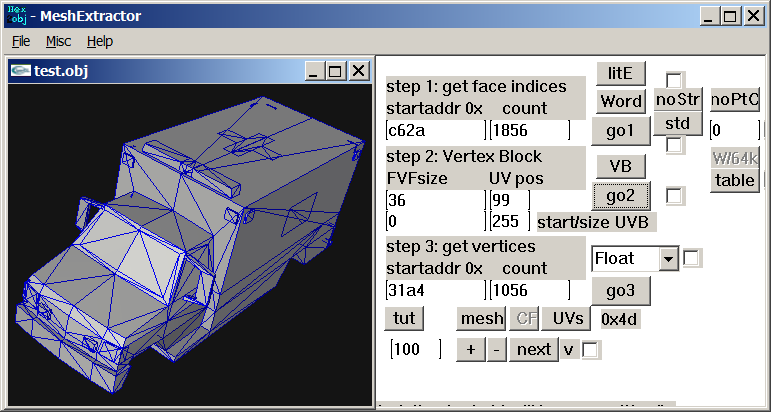

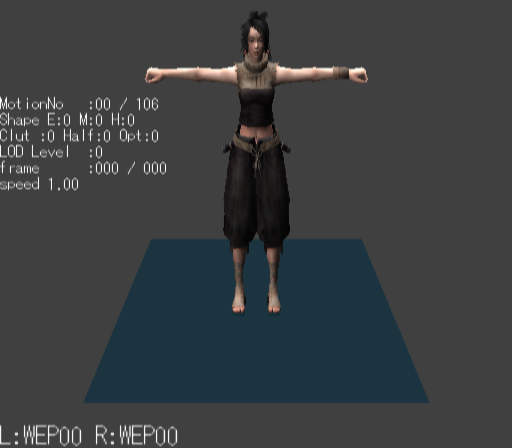

I don't know if there are more models in that unpacked folder, you need to check that so examine each file there. Just remember that characters use shorts in vertices buffer, I think I saw other file with floats but maybe that file is not a character or maybe it is but with floats, I really don't know, lol. Here is the script if you want to test it: fmt_black_ps2_prototype_DB.py3 points

-

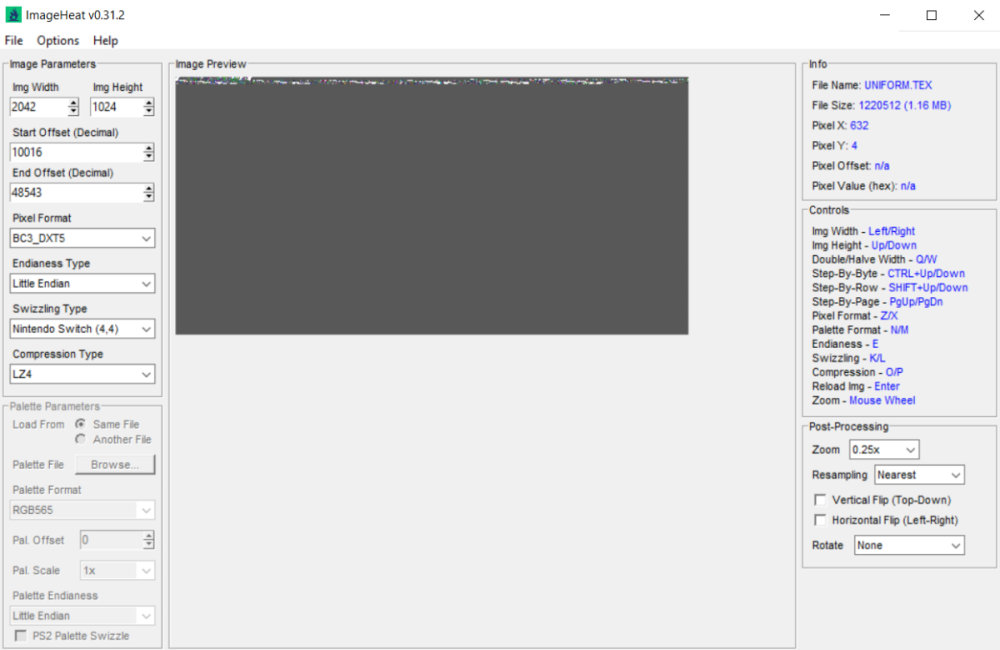

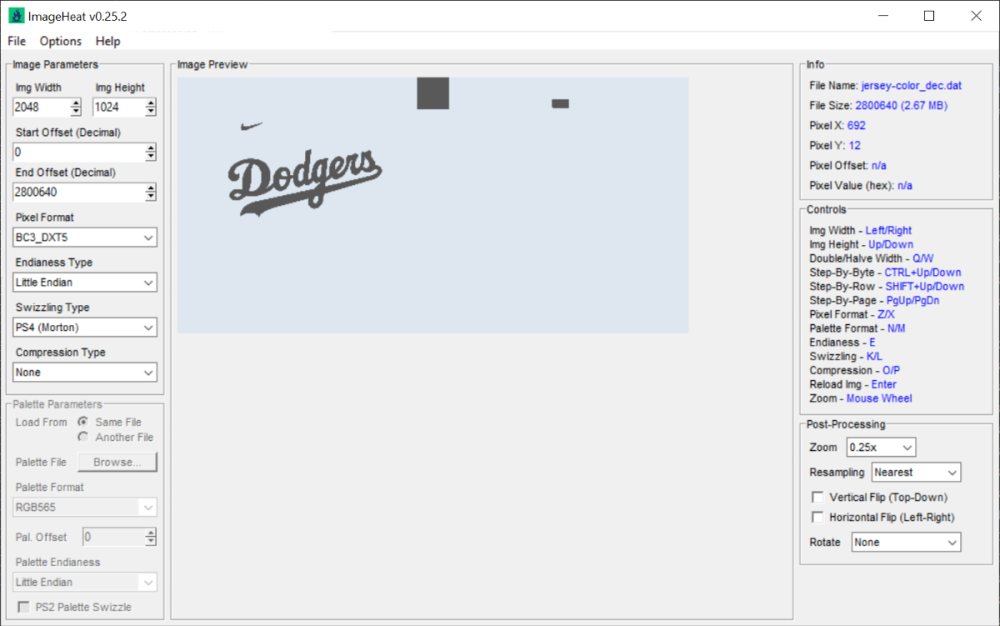

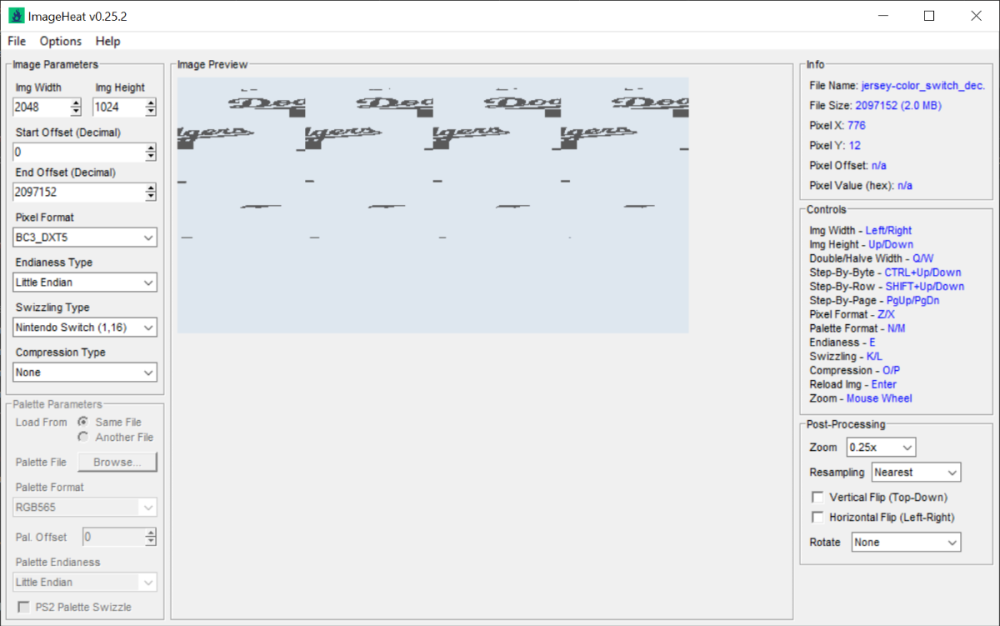

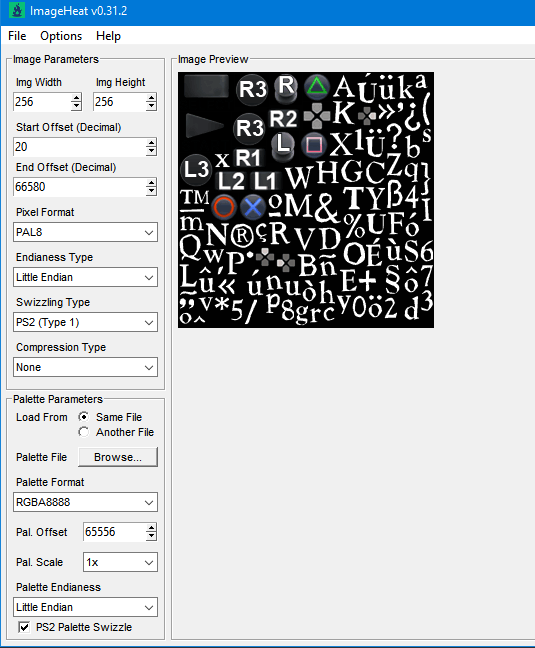

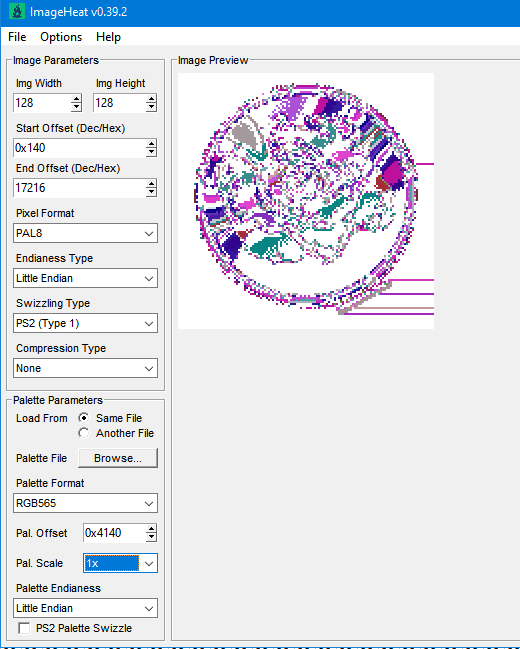

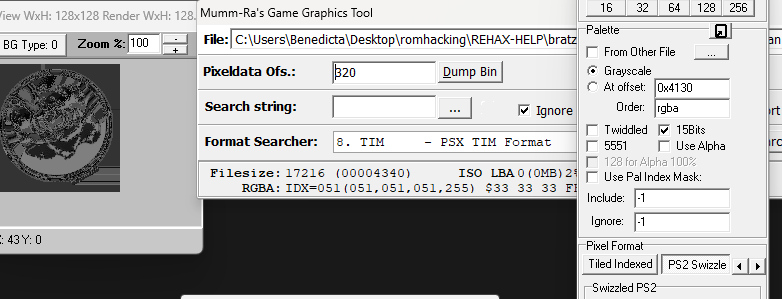

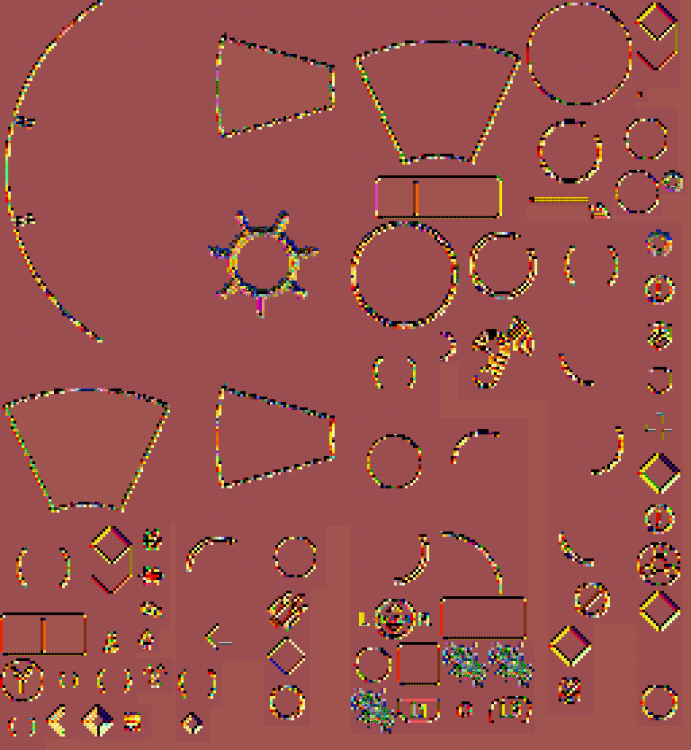

You'd be better using ImageHeat, as it has more options for swizzling, etc. For these, the image is PS2 swizzled, but I can't work out the palette. It doesn't seem to use any of the standard PS2 palette formats, maybe a different swizzling method. This is from "t_compact_rockangelz_closed_00000003":2 points

-

2 points

-

The "repeated offsets" pointing to new blocks indicate that the main BIGFILE.CAT is acting as a master container that holds smaller, self-contained archives inside it. The Master Index: Points to large chunks of data (e.g., "Level 1 Data", "Level 2 Data"). The "New Block": When you go to that offset, you find a new header (signature 01 00 01 00). The Inner Index: This new header has its own list of files. Because this block is treated as a standalone file by the game engine once loaded, its offsets start at 0 (relative to the start of that block), not relative to the start of the whole disc. [ MASTER CAT (BIGFILE) ] |-- Header |-- Index Entry 1: Offset 1000 -> Points to "Level 1 Block" |-- Index Entry 2: Offset 5000 -> Points to "Level 2 Block" | |... [Data at Offset 1000] ... | +-> [ NESTED CAT (Level 1) ] |-- Header (starts at Master Offset 1000) |-- Index Entry A: Offset 10 (Absolute: 1010) |-- Index Entry B: Offset 50 (Absolute: 1050) |-- Data... Why did developers do this? (The Logic) This approach was necessary due to the hardware limitations of the PlayStation 1 (PS1): RAM Constraints: The PS1 has only 2MB of RAM. It cannot keep a massive table of thousands of file offsets in memory at all times. Modular Loading: The game loads the "Master Index" to find the location of the current level's data. It then streams that specific "Block" (Nested CAT) into memory. Relative Addressing: Once the "Block" is loaded into a specific memory address, the game engine reads the inner offsets. Since these offsets are relative to the start of the block (0), the engine can easily calculate memory pointers without needing to know where the block was originally located on the CD.2 points

-

There is the tool PS2JunjouUnpacker-decompressor.zip2 points

-

I've just released new version of ImageHeat 🙂 https://github.com/bartlomiejduda/ImageHeat/releases/tag/v0.39.1 Changelog: - Added new Nintendo Switch unswizzle modes (2_16 and 4_16) - Added support for PSP_DXT1/PSP_DXT3/PSP_DXT5/BGR5A3 pixel formats - Fixed issue with unswizzling 4-bit GameCube/WII textures - Added support for hex offsets (thanks to @MrIkso ) - Moved image rendering logic to new thread (thanks to @MrIkso ) - Added Ukrainian language (thanks to @MrIkso ) - Added support for LZ4 block decompression - Added Portuguese Brazillian language (thanks to @lobonintendista ) - Fixed ALPHA_16X decoding - Adjusted GRAY4/GRAY8 naming - Added support section in readme file2 points

-

2 points

-

It's been a while since this topic is up and i have found a way to deal with this: -Step 1: From the .farc files, use either the tool mentioned at the first post of this thread, or download QuickBMS and use the virtua_fighter_5 bms script i included in the zip file below to extract them into bin files. -Step 2: Download noesis and install the noesis-project-diva plugin (https://github.com/h-kidd/noesis-project-diva/tree/main , or in the included zip file) in order to view and extract the textures/models and use them in Blender or a 3d modeling software of your choice. KancolleArcade.zip2 points

-

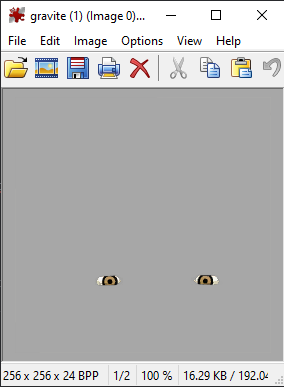

You can do it like this: 1. Open GFX file in hex editor (e.g. Hex Workshop) 2. Delete first 256 bytes 3. Save file 4. Change file extension to GXT 5. Convert GXT file with Scarlet https://github.com/xdanieldzd/Scarlet ScarletTestApp.exe "gravite (2).gxt" 6. You'll get PNG file as a result P.S. Some files are not supported by Scarlet, so you can use ImageHeat for manual conversion https://github.com/bartlomiejduda/ImageHeat1 point

-

1 point

-

1 point

-

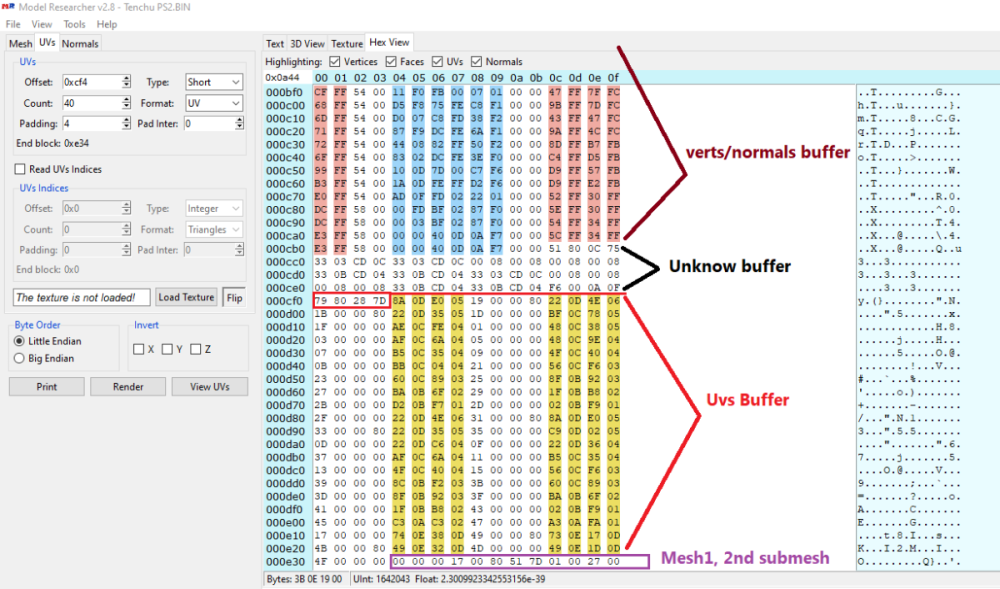

Wait, I think I did something wrong, lol. I can see other unknow block or buffer before UVs. I thought that was the start of UVs but no! So Uvs have the same count as verts after all. The format is: verts/normals buffer: 3 shorts verts, 1 short flag, 3 shorts normals, 1 short flag. unk buffer: ?? UVs buffer: 2 shorts UVs, 4 bytes unk(maybe vertex colors?) Well, it looks like that but I am not sure. About the meshes with floats is: verts/normals buffer: 3 floats verts, 1 short flag, 1 short pad, 3 floats normals, 1 short flag, 1 short pad UVs buffer: 2 shorts UVs, 4 bytes unk(maybe vertex colors?) So meshes with floats don't have that unk buffer. And like always, each buffer has a 4 bytes tag, Here is the last part of the 1st submesh, you cn see that unk buffer before UVs:1 point

-

i suspect the tool would require some minor modification but yes more or less.1 point

-

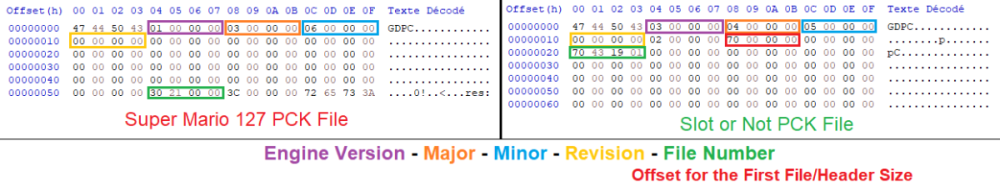

Hello! Out of boredom, I decided to replace texture from a Godot game, in this case Slot or Not, by using Python but failing and failing over again exhausted me. My script has data and their MD5 hash code replacement, and data header change. I doubt that something doesn’t work or they are others data somewhere. I also think even if both WebP data from Godot and PIL are working, their data processing are different thus incompatible. Not even replacing them with PNG data works. I suspect there is a checksum for PCK somewhere. I compared its PCK file with Super Mario 127’s. Although the WebP data for CTEX and STEX are the same, the data pointers in SM127 are at the start of the file, after the header; SoN are at the bottom. The PCK for V1 is documented in Xentax wiki but not for V3. It may be small but this comparison shouldn’t be dismissed. What I need: If there is a checksum for PCK, where it is? The Bytes leading to the data header thus making the Python script adaptable If the WebP data is the issue, a better understanding of Godot’s WebP data Feel free to reply if you find anything! Slot or Not (PCK File V3) son PCK File.zip Godot Picture Replacer Script (Work in progress) Godot Pictures Replacer Script.py Note for the creator of Slot Or Not, you don’t have to turn it into an moddable game. The game need its own resource to be good game. I do this because why not and for research purpose. Knowledge GODOT PCK FILE FORMAT (Little Endian) (From Slot or Not) HEADER (112 Bytes) 4 Bytes = Signature ("GDPC") 4 Bytes = 03 00 00 00 (Engine Version) 4 Bytes = 04 00 00 00 (Major) 4 Bytes = 05 00 00 00 (Minor) 4 Bytes = 00 00 00 00 (Revision) 4 Bytes = 02 00 00 00 4 Bytes = 70 00 00 00 (First File Pointer) 4 Bytes = 00 00 00 00 4 Bytes = File Numbers 4 x 19 Bytes = 00s (Reserved) DATA HEADER (Found at the last part of the file) 4 Bytes = Length of Path, Including the 00s X Bytes = Path Name + 00s (If not divisible by 4) 8 Bytes = Data Pointer - 112 8 Bytes = File Size 16 Bytes = Data MD5 Checksum 4 Bytes = 00 00 00 00 GODOT CTEX FILE FORMAT (Little Endian) For WebP Format { 4 Bytes = String GTS2 4 Bytes = Use Alpha or Not (01 = Yes; 00 = No) 4 Bytes = Height 4 Bytes = Width 4 Bytes = 00 00 00 0D 4 Bytes = FF FF FF FF 4 x 3 Bytes = 00s (Reserved?) 4 Bytes = 02 00 00 00 2 Bytes = Height 2 Bytes = Width 4 Bytes = 00s 4 Bytes = 05 00 00 00 4 Bytes = Data Size after this Byte 4 Bytes = String RIFF 4 Bytes = WebP Data Size after this Byte 8 Bytes = WEBPVP8L / Tag for Loseless Encoded Image Data 4 Bytes = Data Size (or Data Size - 1 if WebP Chunk ends with 00) (Rest of the File) X Bytes = WEBP VP8L Chunk (On Python, the settings are Loseless = True, EXIF = False) 0-15 Bytes = 00 for Fill Up if the CTex Data Size isn't a divisible of 16 } [NOTE: You can get the WebP data by removing the GTS2 Header.] For PNG Format { X Bytes = PNG File Data 0-15 Bytes = 00 for Fill Up if the CTex Data Size isn't a divisible of 16 } X Bytes = String for [Remap], Settings, Path, Texture, Vram X Bytes = 00s (if the length the String isn’t a divisible of 16)1 point

-

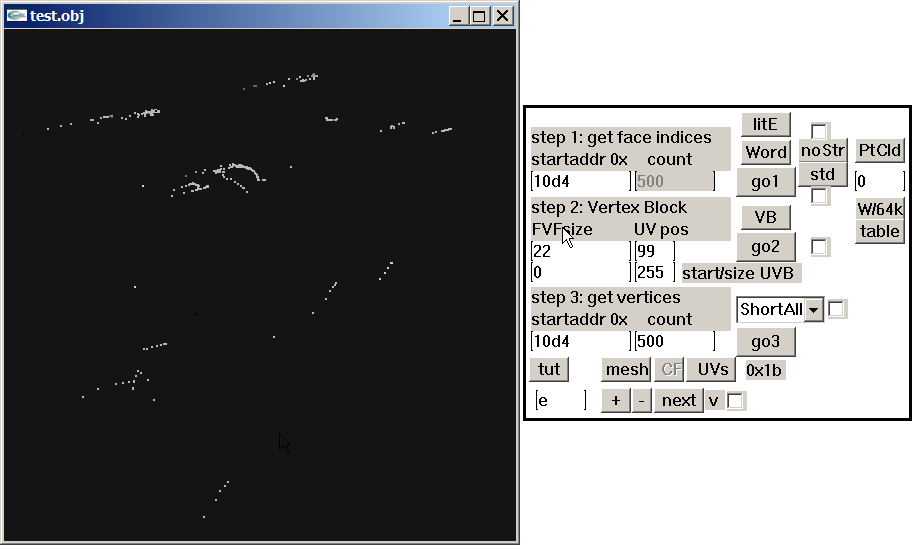

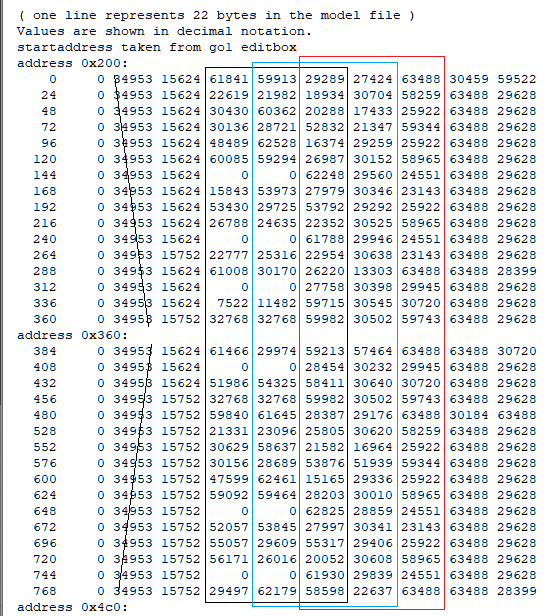

This is actually very helpful. Thank you. I seen the same repeating groups of ~10 unsigned 16-bit value and came to a similar conclusion. The constants like 0, 1632x (~0x3FCx), 21845 (0x5555), 39322 (0x999A), 43691 (0xAAAB), 52429 (0xCCCC), 56798 (0xDDDE), 63488 (0xF800) hex patterns are classic fixed-point / normalized values (fractions of 0xFFFF), which is exactly what you’d expect for compressed curves (rotations, maybe scales or tangents)... I am not 100% sure though. Your step back that you are seeing is because multiple tracks/bones are interleaved via small index tables or there’s a separate header that says “keyframe list starts at X for track Y. I have not figured it out. Also, I am only assuming. It is an educated guess. 2d72 1100 looks more smooth because it is probably for the static pieces. That is also a guess. I don't really know. I haven't found a clean stride/format yet from any area to the extent I was happy with any result. Thankfully your poking proves it isn't baked matrices. However, it might have "junk" data inside of it or switch between the two different formats on the fly which would have a call/read from the game engine the game was made with "elf statements/running". For the last two days I have been looking for a header or track and still haven't found one. I don't know what the meaning "definition" for each of the 10 values per entry (time? quat? s,t,r? tangents? flags?). Also haven't figured out how these numbers convert back to usable floats/matrices for a bone rig. You found the right haystack to be looking for the needle here and I thank you for this. The repeated 16-bit values like 0x3FCx, 0x5555, 0xAAAA, 0xF800, and the way they change over “time” gave light on this. That matches my expectation that GARO is storing proper animation curves rather than just baked matrices which most people would have assumed because of the static model additive animations. Sorry for repeating myself here. I have a client for this game that is CONSTANTLY having me repeat over and over some of this information and it started to turn into habit. Going back to the “step back” jumps you pointed out I believe show the timeline might be split into several blocks (per-bone or per-channel segments) instead of one clean linear stream, which is probably why tools that only understand standard RWANM fail on this game. I don’t have much experience with your viewer. The tools I use are far different and I have been doing a lot of direct hex poking along with using renderware tools, so I’m still trying to figure out the parameters you showcase here. When you mention 2d72 1100 in step 3, is that essentially a stride / FVF setup you’re using to visualize the data as a point cloud? That was my original assumption but now I am second guessing myself. Do you have any thoughts yet on how those 10-value records break down (e.g. time + rotation + something else), or on where the per-track headers might sit? You helped a lot with this and I am very thankful.1 point

-

Sounds complicated. Anyways, in gar.rws.dec_be_-15_anim_77.rwanm I found parts which might be animation curves. But the timeline looks somehow cut in pieces. Not talking about gaps (which might be filled by interpolated frames, as you may know), it's these steps "back in time," haha. address 0x10c8: 4392 0 48060 16315 0 0 61541 30111 24841 63488 62256 4416 0 48060 16315 32768 0 21047 30717 23725 63488 62256 4440 0 0 16320 53301 30676 22558 24629 63330 63488 63488 step back? 4152 0 26214 16358 0 0 24224 30693 29960 63488 62256 4176 0 0 16320 52246 24488 61447 30130 30720 63488 62256 4464 0 0 16320 29178 29826 41088 49358 58949 63488 62256 one single interruption? 2616 0 0 16320 19549 62621 53216 29139 26173 63488 62256 4512 0 0 16320 57603 60936 21535 30255 58578 63488 62256 4536 0 0 16320 0 0 61231 30230 24841 63488 62256 4560 0 0 16320 57771 18609 21233 30669 23725 63488 62256 4584 0 0 16320 54112 29811 54832 29180 26173 63488 62256 4608 0 0 16320 25814 28071 57693 30186 58578 63488 62256 4632 0 0 16320 0 0 61565 30096 24841 63488 62256 4656 0 0 16320 52935 22002 20388 30710 23725 63488 62256 4680 0 17476 16324 28679 28658 61447 61426 63420 30697 62911 one single int/ step back 3024 0 17476 16324 44238 15564 43253 30720 57885 63488 62256 address 0x1228: 4248 0 17476 16324 29178 29826 11892 49786 58949 63488 62256 4776 0 17476 16324 20137 62612 53593 29149 26173 63488 62256 4800 0 17476 16324 57236 61086 21117 30230 58578 63488 62256 4824 0 17476 16324 0 0 61456 30162 24841 63488 62256 4848 0 17476 16324 62036 28766 59130 28692 23725 63488 62256 4872 0 17476 16324 54297 29800 55031 29192 26173 63488 62256 4896 0 17476 16324 26484 27725 58682 30174 58578 63488 62256 4920 0 17476 16324 0 0 61606 30070 24841 63488 62256 4944 0 17476 16324 55822 24945 19244 30661 23725 63488 62256 4968 0 34953 16328 53047 30677 22461 24619 63330 63488 63488 step back 4704 0 17476 16324 52133 24362 61452 30130 30720 63488 62256 4752 0 52429 16332 28686 28644 61454 61412 63418 30697 62906 4992 0 21845 16341 10682 15408 39411 30720 57885 63488 62256 5016 0 39322 16345 29178 29826 43782 50136 58949 63488 62256 5040 0 34953 16328 20579 62599 53912 29163 26173 63488 62256 5064 0 34953 16328 56688 61223 20816 30203 58578 63488 62256 address 0x1388: 5088 0 34953 16328 0 0 61592 30079 24841 63488 62256 5112 0 34953 16328 62115 28717 61186 26846 23725 63488 62256 5136 0 34953 16328 54424 29790 55156 29204 26173 63488 62256 5160 0 34953 16328 27053 27110 59618 30138 58578 63488 62256 5184 0 34953 16328 0 0 61665 30032 24841 63488 62256 5208 0 34953 16328 57532 26626 19092 30549 23725 63488 62256 5232 0 34953 16328 52021 24237 61456 30129 30720 63488 62256 5280 0 52429 16332 53754 30675 55574 57291 63330 63488 60606 one single step back 4488 0 52429 16332 20774 62580 54116 29185 26173 63488 62256 5376 0 52429 16332 56853 61202 20694 30206 58578 63488 62256 5400 0 52429 16332 0 0 61788 29946 24841 63488 62256 5424 0 52429 16332 62044 28380 61696 26151 23725 63488 62256 5448 0 52429 16332 54547 29777 55268 29218 26173 63488 62256 5472 0 52429 16332 27445 26230 60149 30073 58578 63488 62256 5496 0 52429 16332 0 0 61728 29989 24841 63488 62256 5520 0 52429 16332 58316 27162 19461 30435 23725 63488 62256 address 0x14e8: 5544 0 39322 16345 52592 30677 22509 24635 63330 63488 63488 5256 0 21845 16341 52111 24340 61455 30128 30720 63488 62256 5568 0 0 16384 28686 28644 61454 61412 63326 30697 62825 5304 0 43691 16362 30693 57026 15938 9533 26173 63488 62256 strange 576 0 4369 16337 20851 62555 54153 29215 26173 63488 62256 5616 0 4369 16337 57604 60892 21068 30267 58578 63488 62256 5640 0 4369 16337 0 0 62039 29750 24841 63488 62256 5664 0 4369 16337 62168 28395 61422 26828 23725 63488 62256 5688 0 4369 16337 54672 29762 55334 29235 26173 63488 62256 5712 0 4369 16337 27496 25516 60464 30038 58578 63488 62256 5736 0 4369 16337 0 0 61802 29936 24841 63488 62256 5760 0 4369 16337 58935 27260 19496 30384 23725 63488 62256 5784 0 43691 16362 53606 30676 55498 57321 63330 63488 60606 5592 0 21845 16341 20844 62524 54074 29253 26173 63488 62256 5904 0 21845 16341 58154 60378 22229 30354 58578 63488 62256 5928 0 21845 16341 0 0 62292 29517 24841 63488 62256 address 0x1648: 5952 0 21845 16341 62256 28360 60963 27198 23725 63488 62256 5976 0 21845 16341 54773 29744 55367 29255 26173 63488 62256 6000 0 21845 16341 27366 25291 60654 30032 58578 63488 62256 6024 0 21845 16341 0 0 61886 29873 24841 63488 62256 6048 0 21845 16341 59449 27129 19091 30370 23725 63488 62256 6072 0 43691 16362 0 0 24688 30684 29960 63488 62256 step back 4224 0 21845 16341 19132 57034 61493 30107 30720 63488 62256 step back 3264 0 39322 16345 14059 16222 10554 30720 57885 63488 62256 5328 0 39322 16345 20817 62489 53965 29295 26173 63488 62256 6120 0 39322 16345 58505 59791 23174 30435 58578 63488 62256 6144 0 39322 16345 0 0 62518 29271 24841 63488 62256 6168 0 39322 16345 62322 28254 60582 27535 23725 63488 62256 6192 0 39322 16345 54856 29727 55388 29274 26173 63488 62256 6216 0 39322 16345 27173 25274 60786 30038 58578 63488 62256 6240 0 39322 16345 0 0 61964 29812 24841 63488 62256 6264 0 39322 16345 59711 26929 17414 30364 23725 63488 62256 address 0x17a8: 6288 0 39322 16345 52220 24462 61451 30128 30720 63488 62256 and so on 5832 0 43691 16362 19209 57095 61486 30110 30720 63488 62256 6336 0 56798 16349 15191 16672 12805 30720 57885 63488 62256 6360 0 56798 16349 29178 29826 46966 49975 58949 63488 62256 5352 0 56798 16349 20837 62449 53905 29341 26173 63488 62256 6384 0 56798 16349 58615 59023 23685 30505 58578 63488 62256 6408 0 56798 16349 0 0 62719 29013 24841 63488 62256 6432 0 56798 16349 62391 28041 60163 27919 23725 63488 62256 6456 0 56798 16349 54925 29711 55403 29292 26173 63488 62256 6480 0 56798 16349 27014 25159 60900 30044 58578 63488 62256 6504 0 56798 16349 0 0 62028 29759 24841 63488 62256 6528 0 56798 16349 59959 26765 47430 30348 23725 63488 62256 6552 0 43691 16362 52854 30676 22596 24625 63330 63488 63488 edit: using 2d72 1100 in step 3 will give some more obvious animation curves, imho edit 2: press 'mesh' to get the visualization. (toggle 'PtCld' to 'noPtC' and press 'table' to get the timeline list)1 point

-

1 point

-

Hi i was wondering if there is a way to open the .arc files for The Sims on the original Xbox I'm curious to see if the archived files are in .IFF format i tried using The Sims 2 .arc QuickBMS script but to no avail EDIT: Found a script that work ironically it was a Hulk .arc script. Thank You ikskoks1 point

-

The armature is with the model. When you import the DFF using DragonFF in blender it will have the armature with it. The bones are very small. You can use the side menu GUI to better find the bones. Models and Skeletons are 100% workable. It is the custom renderware animation format that is the problem here. In the files I uploaded in the topic post, there should be two rwanm files. Those are some of the animations. If you have the game I can give you one of my scripts that will extract everything for you so you can go through all the files for testing. I tried figuring this out through the elf file. Let me know if you want my script so you can poke at all the animations in the game. There are two different sets of animations per character even if they don't have a weapon. The animations specific for weapons would be much easier to look at since they are smaller. There are mot files that give some additional information but for the most part they are irrelevant. Mot files for this game is more of a listing of animations and positioning for static model pieces. Not the main animations. I am uploading the mot file so you can see it is mostly just for static pieces. The txt file for the character abbreviated with gar that states all the files for him and the gmobj data base text for it as well. I used a renderware tool "forgot the name of it" that allows me to look at how the files are structured. Sending you a section_tree.txt that was made by that program. I was offered a job to make a modding tool for this game that would include editing animations..... As it stands, I don't believe I will be able to finish it. Will just make everything I have for this game public if that happens. Adding two scripts as well. The gar_rws_test.py script does a simple test on the files for the correct compression to decompress the files so you can get everything basically. The garou_rws_extractor is a slightly upgraded version that needs work and is for extracting the models/animations. On aluigi's website there is a bms script called ougon_kishi_garo. That would be your first step to getting all the files out. Then you will have to use the scripts I posted here. The unfinished one will have errors. The test one will work better. gar_mot.rar gar_files_txt.rar gmobj_DB_Files_txt.rar Section_Tree_txt.rar gar_rws_test.py Garou_RWS_extractor.py1 point

-

Drag and drop .pack files into this script, it will unpack all the .wem and .bnk audio files. Drag and drop all of the .wem and .bnk files into the Game Files folder from the Wwise-Unpacker-1.0.3 directory, run the either into MP3 or OGG BAT-converter. wolfenstein_2_resources.py Wwise-Unpacker-1.0.3.zip1 point

-

I've almost finished extracting Wolfenstein: The New Colossus .bimage files, the structure again is very resembling the previous one, hovewer I noticed that this time .bimages were very, very rarely used in game at all. This is what I was capable of extracting instantly after looking at... The structure: [Little-Endain] 0x0-0x3 = File signature ("42 49 4D 0E", BIM) 0x0C = Width 0x10 = Height 0x52 = Payload1 point

-

Drag and drop .resources files into this script, it will extract all of the assets from there (.texdb files not implemented yet). Nest steps: .MD6MESH - https://reshax.com/topic/18614-wolfenstein-the-new-colossus-md6mesh-files/ .BIMAGE/.TGA - https://reshax.com/topic/18617-wolfenstein-the-new-colossus-bimage/#comment-101572 .PACK (.WEM .BNK) - https://reshax.com/topic/18618-wolfenstein-the-new-colossus-pack-containers-wem-and-bnk-files/#comment-101573 wolfenstein_2_resources.py wolfenstein_2_resources.zip1 point

-

there is a lot of overlap, in fact the Kameo alpha uses the exact same CAFF version as the Conker Demo. the lvl files are just an archive/container of sorts while the assets themselves are packaged inside individual "CAFF" containers. for example: the file struct is fairly basic for the LVL container itself(for imhex): struct LVL_ENTRY { u32 unk_00; u32* address : u32; }; struct LVL_FILE_TABLE { u32 count; LVL_ENTRY array[count]; }; LVL_FILE_TABLE table @ 0x00; they're similar in a sense that Conker stores the assets inside a "ZPackage" CAFF container instead of using an external container(.LVL) to store the individual CAFF assets. unlike the earlier games like GBTG kameo also stores a lot of data inside pushbuffer commands including things like the triangles/shaders/shaderparams. but as far as models go theyre very similar (as thats what i've spent the most time on) not sure about the other assets tho.1 point

-

When debugging function 0010D850, I found these filenames in the t0 register (after the decryption result; it also loads the filenames of files with the .JRS header into memory): LOGO.JRS MAINSCRIPT.JRS SCENARIO.JRS SCENARIO00_ROMA.JRS SCENARIO00_ROMA_TGS.JRS SCENARIO00_ROMA_TRIAL.JRS SCENARIO01_EGOI.JRS SCENARIO01_EGOI_TGS.JRS RES/SCRIPT RES/SCRIPT/SC RES/SCRIPT/SC/00_ROMA RES/SCRIPT/SC/01_EGOI RES/SCRIPT/SC/02_TERO RES/SCRIPT/SC/10_KAISOU SCRIPT.SVL etc... The flow is: SCRIPT.PTD (disk) → [AT GAME STARTUP] → Decompress YKLZ → Decrypt .JRS → Load into PS2 memory --- Knowing this, I set a read/write/change breakpoint in the PS2 debugger after the initial file-loading process in RAM. In this case, I set it at 0056AC20 (which corresponds to `SCENARIO00_ROMA.JRS`), and as expected, this is the first dialogue shown in the Romantica route. 0011A010 (game_load_resource) ↓ 0010DED0 (file_system_wrapper) ↓ 0010DB58 (process_filename) → Converts "SCENARIO00_ROMA.JRS" to something ↓ 0010DC48 (binary_search) → Searches table using 0018E4FC (optimized strcmp) ↓ 0010DD98 (get_file_info) → Returns data_ptr (already in memory?) ↓ RETURNS to 0011A010 ↓ ...... 00106800 (PROCESS .JRS?) → UNKNOWN ↓ 001068D0 (FINISHES?) → Another unknown --- FUNCTIONS 0010DC48 – Binary Search - Searches for files in a master table sorted alphabetically (only to verify file calls are correct) 0010DD98– Get File Info - Returns data_ptr (already in memory), size, and flags 0010DED0 – File System Wrapper - Orchestrates the search and retrieval process 0011A010 – Game Resource Loader - Manages memory pool - Calls the entire system --- The only thing left to do is trace the flow and see what gets called after the .JRS file is loaded (which is obviously to process and render the text). With the information on how the game processes and displays text, we can process the previously extracted files from script.ptd to view the Japanese dialogues. I need to rest my brain… haha1 point

-

1 point

-

1 point

-

1 point

-

Yeah if you are fan of defining almost 800 resource types then go for it. I have faith in you...1 point

-

D:\88>py fgo_arcade_mot_parser.py --mot CHARA_POSE_SVT_0088_S01.mot --skl SVT_0088_S02.skl --bone arm_r --frames 100 0 0.000007 0.402854 0.002541 0.915261 1 0.000120 0.975284 0.052175 -0.214706 2 0.069035 0.000000 0.000000 -0.997614 3 0.000000 -0.276927 -0.000008 -0.960891 4 0.000000 -0.736034 -0.000022 -0.676945 5 0.000000 0.738174 0.000023 0.674610 6 0.000000 0.848413 0.000028 0.529335 7 0.000000 0.998036 0.000034 0.062648 8 0.000000 0.955551 0.000035 0.294825 9 0.000000 0.787267 0.000030 0.616612 10 0.000000 0.853046 0.000034 0.521836 11 0.000000 0.978375 0.000040 0.206837 12 0.000000 0.950974 0.000040 -0.309270 13 0.000000 0.988709 0.000043 -0.149846 14 0.000000 0.955742 0.000043 0.294205 15 0.000000 0.335155 0.000015 0.942163 16 0.000000 0.042013 0.000002 0.999117 17 0.000000 0.055729 0.000004 0.998446 18 0.000000 0.600873 0.000037 0.799344 19 0.000000 0.431736 0.000025 0.902000 20 0.000000 -0.474964 -0.000031 0.880005 21 0.000000 0.005943 0.000002 0.999982 22 0.000000 0.472832 0.000034 0.881153 23 0.000000 0.562161 0.000044 0.827028 24 0.000000 0.544627 0.000045 0.838678 25 0.000000 0.498805 0.000045 0.866714 26 0.000000 0.663745 0.000065 0.747959 27 0.000000 0.598301 0.000061 0.801271 28 0.000000 0.237227 0.000025 0.971454 29 0.000000 -0.314591 -0.000039 0.949227 30 0.000000 -0.813891 -0.000100 0.581017 31 0.000000 -0.989480 -0.000131 -0.144672 32 0.000000 -0.995146 -0.000143 -0.098406 33 0.000000 -0.920574 -0.000144 0.390568 34 0.000000 -0.220975 -0.000032 0.975279 35 0.000000 0.662326 0.000116 0.749216 36 0.000000 0.995018 0.000179 -0.099691 37 0.000000 0.960525 0.000182 0.278192 38 0.000000 0.592240 0.000118 0.805762 39 0.000000 -0.021210 -0.000011 0.999775 40 0.000000 -0.047665 -0.000008 0.998863 41 0.000000 0.314114 0.000081 0.949385 42 0.000000 0.421506 0.000118 0.906826 43 0.000000 0.740391 0.000225 0.672176 44 0.000000 0.996086 0.000322 -0.088392 45 0.000000 0.610455 0.000199 -0.792051 46 0.000000 -0.147819 -0.000063 -0.989014 47 0.000000 -0.758611 -0.000329 -0.651544 48 0.000000 -0.633201 -0.000271 -0.773987 49 0.000000 0.144974 0.000071 -0.989435 50 0.327163 0.016943 0.000008 -0.944816 51 0.000075 0.714520 0.031271 -0.698916 52 0.000000 0.231376 0.000223 0.972864 53 0.000000 0.589910 0.000409 0.807469 54 0.000000 0.814108 0.000585 0.580713 55 0.000000 0.510365 0.000383 0.859958 56 0.000000 0.119739 0.000083 0.992805 57 0.000000 -0.291270 -0.000258 0.956641 58 0.000000 -0.409780 -0.000399 0.912184 59 0.000000 -0.622229 -0.000662 0.782835 60 -0.000002 -0.941428 -0.001064 0.337213 61 -0.000002 -0.966760 -0.001144 0.255684 62 -0.000002 -0.940971 -0.001175 0.338484 63 -0.000002 -0.957375 -0.001293 0.288845 64 -0.000003 -0.922744 -0.001354 0.385411 65 -0.000003 -0.793428 -0.001291 0.608662 66 -0.000004 -0.998795 -0.001788 -0.049037 67 -0.000003 -0.836432 -0.001628 -0.548068 68 -0.000004 -0.931258 -0.001932 -0.364356 69 -0.000005 -0.993580 -0.002216 -0.113106 70 -0.000005 -0.983486 -0.002361 0.180970 71 -0.000005 -0.812511 -0.002115 0.582941 72 -0.000005 -0.710143 -0.002080 0.704054 73 -0.000006 -0.820454 -0.002625 0.571706 74 -0.000008 -0.999984 -0.003401 0.004570 75 -0.000007 -0.792709 -0.002817 -0.609593 76 -0.000002 -0.308776 -0.001126 -0.951134 77 0.000004 0.259678 0.001201 -0.965695 78 0.000009 0.733887 0.003312 -0.679263 79 0.000013 0.975185 0.004912 -0.221336 80 0.000014 0.997418 0.005142 0.071628 81 0.000013 0.998641 0.004884 0.051882 82 0.000012 0.999473 0.004623 0.032116 83 0.000012 0.999914 0.004360 0.012337 84 0.000011 0.999964 0.004096 -0.007446 85 0.000010 0.999622 0.003830 -0.027227 86 0.000009 0.998889 0.003563 -0.046997 87 0.000009 0.997764 0.003294 -0.066748 88 0.000008 0.996250 0.003024 -0.086474 89 0.000007 0.994345 0.002753 -0.106165 90 0.000007 0.992051 0.002481 -0.125815 91 0.000006 0.989368 0.002208 -0.145416 92 0.000005 0.986298 0.001934 -0.164960 93 0.000004 0.982843 0.001659 -0.184439 94 0.000004 0.979002 0.001383 -0.203846 95 0.000003 0.974778 0.001107 -0.223173 96 0.000002 0.970173 0.000831 -0.242413 97 0.000001 0.965188 0.000554 -0.261558 98 0.000000 0.959825 0.000277 -0.280601 99 0.000000 0.954086 0.000000 -0.2995341 point

-

SLES_526.07.ELF (PS2 game executable) SCRIPT.PTD (1,728,512 bytes) 1. INITIAL FILE ANALYSIS: hexdump -C SCRIPT.PTD | head -50 First 32 bytes: unknown header Bytes 0x20-0x11F: 256-byte table Rest: encrypted data With Radare2, I examined the ELF a bit: Found function at 0x0010da30 (file handling?) 3. CALL TRACING: 0x0010da30 → 0x10ccf0 → 0x10a3f8 → 0x0010d850 4. DECODING FUNCTION ANALYSIS (0x0010d850): 🔓 ALGORITHMS FOUND: 1. LZSS DECOMPRESSION: def yklz_lzss_decompress(data): param_byte = data[7] # Always 0x0A shift = param_byte - 8 # 2 mask = (1 << shift) - 1 # 3 decompressed_size = int.from_bytes(data[8:12], 'little') # ... standard LZSS implementation 2. DECRYPTION ALGORITHM (0x0010D850): def junroma_decrypt_exact(data): if len(data) <= 0x120: return data result = bytearray(data) t1 = 0 # Initialized to 0 base_ptr = 0 # Base pointer (t3 in code) for i in range(0x120, len(data)): encrypted_byte = data[i] # t7 = (t1 XOR encrypted_byte) + base_ptr t7 = (t1 ^ encrypted_byte) + base_ptr # decrypted_byte = TABLE[(t7 + 0x20) & 0xFF] idx = (t7 + 0x20) & 0xFF decrypted_byte = SUBSTITUTION_TABLE[idx] result[i] = decrypted_byte # t1 = (t1 - 1) & 0xFF t1 = (t1 - 1) & 0xFF return bytes(result) 3. SUBSTITUTION TABLE (0x0055F7A0): SUBSTITUTION_TABLE = bytes([ 0x82, 0x91, 0x42, 0x88, 0x35, 0xBB, 0x0F, 0x85, 0x96, 0x2C, 0x56, 0xFF, 0x8E, 0x3C, 0x7C, 0x0D, 0x61, 0xBF, 0xB8, 0xEF, 0xD1, 0x16, 0x07, 0xEE, 0x4F, 0x09, 0xCB, 0x0C, 0xE2, 0xB1, 0xDD, 0x12, 0xFB, 0x08, 0x89, 0x8B, 0x03, 0xC9, 0x27, 0x19, 0x6A, 0x32, 0x5D, 0xCD, 0x98, 0x17, 0xF4, 0xE7, 0x9F, 0x1A, 0xF9, 0x1B, 0x6C, 0x5C, 0x44, 0x3B, 0x6E, 0x3E, 0x60, 0xD5, 0x4D, 0x21, 0x43, 0x4E, 0x65, 0xFD, 0x0B, 0x92, 0x8C, 0x2B, 0x41, 0xED, 0x76, 0x22, 0xC1, 0x74, 0xA3, 0x47, 0x14, 0x67, 0xE0, 0xDE, 0x0A, 0xE3, 0x1E, 0x5F, 0x1C, 0x84, 0xEA, 0xA0, 0x02, 0x69, 0x52, 0xB9, 0xC5, 0x20, 0x6D, 0xC8, 0x79, 0xD0, 0x05, 0x77, 0xB3, 0xDA, 0x7F, 0xBA, 0xF1, 0xB2, 0x72, 0x9E, 0x9A, 0xB5, 0x6B, 0x1F, 0x58, 0xD2, 0x11, 0xA6, 0xD8, 0x80, 0x23, 0x46, 0x73, 0xB6, 0x2E, 0xE4, 0xAD, 0x81, 0xC6, 0xDB, 0x57, 0x95, 0x01, 0xEC, 0xC4, 0xF2, 0xEB, 0xDF, 0xC0, 0x28, 0x49, 0xE9, 0x37, 0x15, 0x5E, 0x34, 0x31, 0x00, 0xA8, 0x8D, 0x9C, 0xBC, 0xA2, 0x62, 0x90, 0xCA, 0x66, 0x3D, 0x70, 0x4C, 0x24, 0x48, 0xBE, 0xA9, 0x5A, 0x94, 0xD4, 0xF5, 0x1D, 0x38, 0x25, 0x8F, 0x26, 0xB4, 0x83, 0x45, 0x8A, 0x5B, 0xFC, 0x63, 0xA4, 0xFA, 0xAF, 0xF8, 0x10, 0xAB, 0x53, 0x54, 0x2F, 0xDC, 0xF6, 0xD3, 0x0E, 0x68, 0xE1, 0x59, 0xAA, 0x30, 0xC2, 0x51, 0xD7, 0xE6, 0xB0, 0xBD, 0x6F, 0x06, 0x93, 0x7D, 0x3A, 0xF7, 0x04, 0x78, 0x2D, 0x55, 0xA5, 0x2A, 0xA7, 0x40, 0x71, 0x9B, 0x7A, 0xC3, 0xD6, 0xFE, 0xCF, 0xE5, 0x4A, 0x7B, 0xC7, 0x99, 0xF0, 0xCC, 0x3F, 0xAC, 0xB7, 0x87, 0x7E, 0x33, 0x13, 0x97, 0xE8, 0x75, 0xCE, 0xA1, 0x50, 0x4B, 0x39, 0xD9, 0x86, 0x64, 0x9D, 0x29, 0x36, 0x18, 0xAE, 0xF3 ]) EXTRATION CODE (PYTHON) import os import struct # ==================== SUBSTITUTION TABLE ==================== SUBSTITUTION_TABLE = bytes([ 0x82, 0x91, 0x42, 0x88, 0x35, 0xBB, 0x0F, 0x85, 0x96, 0x2C, 0x56, 0xFF, 0x8E, 0x3C, 0x7C, 0x0D, # ... (same as above, shortened for brevity) 0xE8, 0x75, 0xCE, 0xA1, 0x50, 0x4B, 0x39, 0xD9, 0x86, 0x64, 0x9D, 0x29, 0x36, 0x18, 0xAE, 0xF3 ]) # ==================== LZSS DECOMPRESSION ==================== def yklz_lzss_decompress(data): if len(data) < 16: raise ValueError("File too small (smaller than the 16-byte header).") # Extract header parameters param_byte = data[7] shift = param_byte - 8 if shift < 0: shift = 4 mask = (1 << shift) - 1 # Decompressed size (uint32, little-endian) decompressed_size = int.from_bytes(data[8:12], 'little') # LZSS decompression src_pos = 16 output = bytearray() while len(output) < decompressed_size and src_pos < len(data): flags = data[src_pos] src_pos += 1 for bit in range(8): if len(output) >= decompressed_size or src_pos >= len(data): break is_reference = (flags & 0x80) != 0 flags = (flags << 1) & 0xFF if not is_reference: # Literal byte output.append(data[src_pos]) src_pos += 1 else: # Reference (match) if src_pos + 1 >= len(data): break b1 = data[src_pos] b2 = data[src_pos + 1] src_pos += 2 length = (b1 >> shift) + 3 offset = ((b1 & mask) << 8) | b2 offset += 1 start_index = len(output) - offset for i in range(length): idx = start_index + i if idx < 0: output.append(0) else: output.append(output[idx]) return bytes(output) # ==================== JRS DECRYPTION ==================== def junroma_decrypt_exact(data): """ EXACT implementation of the decryption algorithm (0x0010D850). Confirmed by debugger analysis. Initial t1 = 0 t3 (base_ptr) = 0 for our data Start offset: 0x120 """ if len(data) <= 0x120: return data result = bytearray(data) # Initial t1 = 0 (confirmed by debugger) t1 = 0 # base_ptr = 0 (t3 = base pointer to data) base_ptr = 0 for i in range(0x120, len(data)): encrypted_byte = data[i] # t7 = (t1 XOR encrypted_byte) + base_ptr t7 = (t1 ^ encrypted_byte) + base_ptr # decrypted_byte = TABLE[(t7 + 0x20) & 0xFF] idx = (t7 + 0x20) & 0xFF decrypted_byte = SUBSTITUTION_TABLE[idx] result[i] = decrypted_byte # t1 = (t1 - 1) & 0xFF t1 = (t1 - 1) & 0xFF return bytes(result) # ==================== JRS FILE EXTRACTION ==================== def extract_jrs_files(data): """Extracts individual .JRS files from decrypted data.""" jrs_magic = b'\x8F\x83\xDB\xCF' # JRS Magic: "純ロマ" files = [] pos = 0 while pos < len(data): idx = data.find(jrs_magic, pos) if idx == -1: break # Determine size (find next magic or use header size) next_magic = data.find(jrs_magic, idx + 4) if next_magic != -1: file_size = next_magic - idx else: file_size = len(data) - idx file_data = data[idx:idx + file_size] files.append({ 'offset': idx, 'size': file_size, 'data': file_data, 'is_jrs': True }) pos = idx + file_size return files # ==================== COMPLETE EXTRACTOR ==================== def extract_all_yklz_sections(): """Extracts and processes all YKLZ sections from SCRIPT.PTD.""" print("=== JUNJOU ROMANTICA PS2 EXTRACTOR ===") # Read file try: with open('SCRIPT.PTD', 'rb') as f: raw_data = f.read() print(f"File SCRIPT.PTD read: {len(raw_data):,} bytes") except FileNotFoundError: print("❌ ERROR: SCRIPT.PTD not found") return # Search for YKLZ sections yklz_signature = b'YKLZ' positions = [] pos = 0 while True: idx = raw_data.find(yklz_signature, pos) if idx == -1: break positions.append(idx) pos = idx + 1 print(f"YKLZ sections found: {len(positions)}") if not positions: print("❌ No YKLZ sections found") return # Create directories os.makedirs('EXTRACTED', exist_ok=True) os.makedirs('EXTRACTED/JRS_FILES', exist_ok=True) total_jrs = 0 # Process each section for i, pos in enumerate(positions): print(f"\n--- Processing section #{i:03d} (offset 0x{pos:08X}) ---") # Extract YKLZ data if i + 1 < len(positions): next_pos = positions[i + 1] yklz_data = raw_data[pos:next_pos] else: yklz_data = raw_data[pos:] try: # 1. Decompress LZSS decompressed = yklz_lzss_decompress(yklz_data) print(f" Decompressed: {len(decompressed):,} bytes") # Save decompressed version decomp_filename = f'EXTRACTED/section_{i:03d}_decompressed.bin' with open(decomp_filename, 'wb') as f: f.write(decompressed) # 2. Apply decryption (except section 0) if i == 0: # Section 0 is ASCII text without encryption decrypted = decompressed print(f" Section 0 (metadata) - not decrypted") else: decrypted = junroma_decrypt_exact(decompressed) print(f" Decryption applied (from offset 0x120)") # Save decrypted version decrypted_filename = f'EXTRACTED/section_{i:03d}_decrypted.bin' with open(decrypted_filename, 'wb') as f: f.write(decrypted) # 3. Extract JRS files if decrypted[:4] == b'\x8F\x83\xDB\xCF': print(f" ✅ Contains JRS file(s)") jrs_files = extract_jrs_files(decrypted) for j, jrs in enumerate(jrs_files): filename = f'EXTRACTED/JRS_FILES/section_{i:03d}_file_{j:03d}.jrs' with open(filename, 'wb') as f: f.write(jrs['data']) print(f" JRS file #{j}: {jrs['size']:,} bytes") total_jrs += 1 # Analyze JRS header if len(jrs['data']) >= 0x40: version = int.from_bytes(jrs['data'][4:8], 'little') declared_size = int.from_bytes(jrs['data'][12:16], 'little') print(f" Version: {version}, Declared size: {declared_size:,}") # 4. For section 0, show ASCII content if i == 0: try: text = decrypted.decode('ascii', errors='ignore').strip() if text: print(f" ASCII content: {text[:100]}...") # Save as text text_filename = f'EXTRACTED/section_000_metadata.txt' with open(text_filename, 'w', encoding='utf-8') as f: f.write(text) except: pass except Exception as e: print(f" ❌ Error: {e}") import traceback traceback.print_exc() print(f"\n{'='*60}") print("EXTRACTION COMPLETED!") print(f"Total JRS files extracted: {total_jrs}") print(f"Everything saved to: EXTRACTED/") # ==================== EXECUTION ==================== if __name__ == "__main__": extract_all_yklz_sections() NOW, THE RESULTING FILES ALL HAVE A HEADER WITH THE MAGIC NUMBER "JUNROMA" AND APPEAR TO BE ENCRYPTED. TRYING THE SAME ALGORITHMS OR APPLYING SHIFT-JIS JAPANESE DIDN'T WORK.1 point

-

D:\88>py mot_rotation_extractor.py CHARA_POSE_SVT_0088_S01.mot arm_r 0 4.889439125475239e-10 0.5407554599817733 1.4406841468094752e-05 0.8411798453911885 1 -6.857191399194769e-10 -0.6332053752420114 -1.7710689242085512e-05 0.7739838192436295 2 9.680781275471423e-10 0.7419904178008506 2.197732230619127e-05 0.6704104857538526 3 1.168805020248789e-09 0.7357420612946057 2.3368850020973145e-05 0.6772618538614599 4 1.949070875265381e-09 0.9986487356304239 3.447117855729147e-05 -0.05196827527918962 5 1.8955871688974175e-09 0.7756976401807586 2.9743424602522006e-05 0.6311047220020868 6 2.5455886772310853e-09 0.878827057772032 3.542967924363111e-05 0.4771404418748128 7 3.226245697914568e-09 0.9417279975589877 3.9870071014519985e-05 -0.3363753513917405 8 4.099035407402478e-09 0.999881701599271 4.486528620122835e-05 -0.015381183116150696 9 4.475697367806648e-10 0.09022508301908228 4.352295069325249e-06 0.9959213996974146 10 -9.900496580111543e-11 -0.016108207506788277 -8.63153966278132e-07 0.9998702544081274 11 6.059799266392733e-09 0.8019628892165832 4.840268748269343e-05 0.597373854446753 12 -5.033915462308732e-09 -0.550395433826452 -3.5755896897761944e-05 0.8349041053585182 13 5.053784333516648e-09 0.4450858715305767 3.1563178794486055e-05 0.8954878926974015 14 8.419066313234432e-09 0.5885535705971041 4.6467524984686766e-05 0.8084582193151163 15 8.90557735051792e-09 0.48400980850508935 4.3647539557974e-05 0.8750625711146369 16 1.705888293133057e-08 0.739653758245311 7.467434392777951e-05 0.6729876019194776 17 5.1572109382203555e-09 0.18538461353349664 2.0212396550665652e-05 0.9826660392302642 18 -2.328339814495478e-08 -0.6828563164678986 -8.178477919607272e-05 0.7305526978741114 19 -3.913764879296982e-08 -0.916708464375864 -0.0001236022330290307 -0.39955672446366336 20 -5.154739810461322e-08 -0.9363174368121112 -0.0001465770889527342 0.35115471808983634 21 1.9941416689432306e-08 0.30514574357555446 5.101391250695028e-05 0.9523056613164191 22 7.665103345684452e-08 0.9660344250734825 0.00017489193652521154 -0.2584133490858223 23 7.134766782413641e-08 0.7025958983240921 0.0001410635188906553 0.7115890553959081 24 -3.6214565545341617e-08 -0.27067645581828326 -6.240843221417008e-05 0.9626703757625655 25 5.1790279998520676e-08 0.31343069120738587 8.093030035361684e-05 0.9496110757881587 26 9.76471751687859e-08 0.48348140665826506 0.00013865182460356674 0.8753546196778808 27 2.4320931108231546e-07 0.9660679133066328 0.00031516943800675713 -0.2582879934250419 28 -5.760116220776852e-09 -0.01787397162264415 -6.858465480027745e-06 -0.9998402477853122 29 -3.6387090286711984e-07 -0.9183475143749914 -0.0003981115505918134 -0.39577478993530635 30 8.160074093917339e-08 0.1715509171534115 8.22720490679595e-05 -0.9851752514426693 31 5.819853717302611e-07 0.9980718776550587 0.0005416464023315233 -0.06206636490839266 32 2.1510279639614338e-07 0.29228983646104745 0.00018557380336221306 0.9563297637655598 33 7.59445645377239e-07 0.8474814676856007 0.0006068034885069383 0.5308246355609446 34 3.5690964072418125e-07 0.33639013436576054 0.0002642112401393917 0.9417226808852375 35 -4.091057811462368e-07 -0.3193635112840012 -0.0002813906752493844 0.94763224326712 36 -7.546453676244599e-07 -0.47343991028694626 -0.0004824099506598264 0.8808259865759857 37 -1.8906289688167591e-06 -0.982952229475143 -0.0011231856476135507 0.183857697745491 38 -2.1105062508406607e-06 -0.9390839443880565 -0.0011623317184248968 0.3436858949289188 39 -2.5853066957476077e-06 -0.9646240712325369 -0.0013513238739472448 0.2636258240686401 40 -2.548795549486021e-06 -0.7758524791739349 -0.0012792661159138326 0.6309130637666075 41 -3.1615860075804858e-06 -0.8029301935402714 -0.0015291192910664463 -0.5960711082459713 42 -4.322600984749709e-06 -0.953658460734394 -0.0020134870391519846 -0.3008845062826679 43 -5.25241455552712e-06 -0.988412035710498 -0.002360985304159304 0.15177639270794138 44 -4.161603802056902e-06 -0.6521624127905219 -0.001807772378551133 0.7580771196157773 45 -6.4076487092003816e-06 -0.8348013728080191 -0.0026990522530703945 0.5505446240167313 46 -7.580466976304793e-06 -0.8733343844600407 -0.003096347434863975 -0.4871113481483479 47 -9.462704350659117e-07 -0.09508303543407069 -0.00037510524919442804 -0.9954692740952882 48 8.425608187935311e-06 0.7236249853299245 0.0032503281231428655 -0.690185711169369 49 1.3974775863233788e-05 0.9967865728728194 0.005250760229253485 0.0799309543429847 50 1.0252517924129751e-05 0.6522193732200672 0.003760479792529784 0.7580209415860742 51 7.079466725136183e-06 0.4028542154397706 0.002540938285457269 0.9152606321070067 52 3.595970832695169e-06 0.18027461327948976 0.001262493988347378 0.9836155091817782 53 9.582308647070628e-07 0.04164757493191193 0.00032967767325522203 0.9991323089631344 54 3.0435151035103606e-06 0.11275994047819692 0.0010266722975638299 0.9936217297131157 55 2.9213899144023735e-05 0.9943722786079471 0.009681902328738926 -0.10549896421237603 56 4.16363697258811e-06 0.12927425161304473 0.0013598656852372205 0.9916079460239664 57 -3.495453234750954e-05 -0.9799068872688088 -0.0112615781923993 0.1991373092063645 58 -1.19122282820623e-05 -0.2979602519629299 -0.003812380482884125 -0.9545706646777596 59 4.098904589922595e-05 0.8996510312258749 0.013230551163297976 -0.436409180529046 60 4.5509829644210436e-05 0.9224974013820024 0.014836853340391197 0.38571804489194406 61 2.5386896899756216e-05 0.4819785870349148 0.008372019390152027 0.8761429964832588 62 -7.74043605304678e-06 -0.13710430629077341 -0.0025848694109572466 0.9905532431862206 63 -4.197002811754517e-05 -0.6905692943694501 -0.014249406220070262 0.7231258551144275 64 -6.446179774219989e-05 -0.9784274441560907 -0.022294390233699647 0.20538425580108186 65 -6.738314184076315e-05 -0.9344167343406218 -0.02371722618609395 -0.3553911299199207 66 -5.318598254396075e-05 -0.6860462826797571 -0.019139229784659265 -0.727306115109579 67 -3.8073695903130686e-05 -0.4746078275031473 -0.01399468271587061 -0.8800861079910411 68 -2.0410335231135395e-05 -0.2460513518917346 -0.007675809464634084 -0.9692263996428959 69 -1.2414483692513633e-06 -0.014420071326659416 -0.0004768556868665043 -0.999895911657832 70 1.8390372862545596e-05 0.20651283622780844 0.0072618292052958125 -0.9784169428068659 71 3.720258570931797e-05 0.40298122945248555 0.015066659318015195 -0.9150842163986469 72 5.4747583197640296e-05 0.5694511849406998 0.02265236859910118 -0.8217129761475023 73 7.145976821520378e-05 0.7041115041467583 0.0298058800041412 -0.7094635960631596 74 8.65754632068576e-05 0.8079792090272402 0.036359064406225806 -0.5880880960535662 75 0.00010014190563846156 0.8858057159534748 0.042406154240265545 -0.4621146412291018 76 0.00010775037244367866 0.9231688063963739 0.04585370721855432 -0.381650076406211 77 0.0001119465641689991 0.9431990123522885 0.047940303828193226 -0.3287511792138469 78 0.00011522374207694951 0.9580387962502455 0.049692902280489296 -0.28229818820851793 79 0.00011812670846677753 0.9687373316043265 0.05115606062490537 -0.24275713349673386 80 0.00012029059110273124 0.9757962307228829 0.05223183248698172 -0.2123523894667537 81 0.00012149859992430558 0.9800959610243474 0.0529662230739378 -0.19132817783884565 82 0.000122493452797258 0.982595143704861 0.053448177709211446 -0.17790463980049037 83 0.00012276327248308762 0.9836103161533365 0.05364896424336061 -0.1721409873374937 84 -5.960464477538421e-07 -5.364418029784578e-07 -5.9604644775384206e-08 -0.9999999999996767 85 -4.172325134277023e-07 -5.364418029784743e-07 -0.0 -0.9999999999997691 86 -0.498829392620764 -0.09178566173618598 -0.860177822755763 -0.05327985169278694 87 -0.7640124337551579 -1.0923536302676896e-08 -0.0 -0.6452015197343542 88 -1.6689300537085817e-06 -0.0 -2.3841857910122597e-06 -0.9999999999957652 89 -0.0 -3.09944152831535e-06 -0.0 -0.9999999999951967 90 -3.814697265602702e-06 -0.0 -4.529953002903208e-06 -0.9999999999824638 91 -0.0 -5.2452087402103235e-06 -0.0 -0.9999999999862439 92 -5.960464477503769e-06 -0.0 -0.0 -0.9999999999822364 93 -5.960464477539059e-08 -0.0 -0.0 -0.9999999999999982 94 0.1485628140697129 1.683760545851179e-05 6.091795376621932e-13 -0.988902972991882 95 -5.960464477539059e-08 -0.0 -0.0 -0.9999999999999982 96 0.3462483126415183 4.290287474297634e-06 -2.1494426223936043e-09 -0.9381429027469615 97 -5.960464477539059e-08 -0.0 -0.0 -0.9999999999999982 98 0.36087411019359966 -0.00038851728707961855 -6.715246250684779e-09 -0.9326144571291479 99 -5.960464477539059e-08 -0.0 -0.0 -0.9999999999999982 100 0.0 0.9540857816096563 2.1230699129955274e-07 -0.299533506189618441 point

-

Today I am gonna discuss on how we can reverse engineer the extraction of the game archives, sit back because this is where it starts to get interesting... +==== TUTORIAL SECTION ====+ But how do those files store game assets like 3D Models, Textures, Sounds, Videos and etc... Well, the anwser is simple, they usually bundle them, they pack them close together in their eighter compressed or even encrypted form (Rarely). To understand let's first quickly move into the basics, into how the Computer stores any file at all. DATA TYPES Those are the most frequent Data types: Byte/Character = 1 Byte, so 8 Bits Word/Short = 2 Bytes, so 16 Bits Dword/Int = 4 Bytes, so 32 Bits ULONG32/Long = 4 Bytes, so 32 Bits ULONG64/Long Long = 8 Bytes, so 64 Bits Float = 4 Byte, so 32 bits Double = 8 Bytes, so 64 Bits String = A sequence of 1 Byte Characters terminated with null ("00") Where Bit is literally one of the smallest Data that we can present, it's eighter 0 or 1 but combining those 8 Bits together (Example: 0 1 1 1 0 0 1 1) so we get a whole byte. So, all files literally look like this: Addres: HEX: ASCII: 01 02 03 04 05 06 07 08 09 0A 0B 0C 0D 0E 0F 0x00000040 2a 2a 20 2a 2f 0a 09 54 61 62 6c 65 20 77 69 74 ** */..Table wit 0x00000050 68 20 54 41 42 73 20 28 30 39 29 0a 09 31 09 09 h TABs (09)..1.. 0x00000060 32 09 09 33 0a 09 33 2e 31 34 09 36 2e 32 38 09 2..3..3.14.6.28. 0x00000070 39 2e 34 32 0a 9.42. This is called a Hex dump, it's essentially a mkore human readable code of binary file that aside the actual Binary data in HEX shows us the Adresses and the ASCII representation for each 0x..0 to 0x..F line. The packed file usually contains compressed data and a small separator/padding between them, hover it doesn't tell us the name and the path of the file we want to com press, whch is a problem. Heck, we don't even know which compression method was used and which "flavour/version" and how the decompressed file should look like... That's where QuckBMS comes to help. QuickBMS QuickBMS has one very specific function I wanna talk about, it's "comptype unzip_dynamic" it supports millions methods and their "flavours/versions". It has also a very fast perfomance and is good for extracting the multiple files out of the package at once. There are also already lots of QuckBMS scripts out there for extracting specific archives, but I'll talk about that later. Compression types As said previously, the block separators/markers are very usefull to identify but turns out most of the compression methods have their own headers and magic numbers, here are few of them: Magic numbers: ZLIB: 78 01 (NoComp) 78 5E (Fastest) 78 9C (Default) 78 DA (Maximum) LZ4: [No Magic Numbers] LZ4 Frame: 04 22 4D 18 (Default) LZW: [No Magic Numbers] LZO: [No Magic Numbers] BZIP/BZIP2: 42 5A 68 GZIP: 1F 8B 08 Practical steps Below is the example of how average QuickBMS Archive extractor looks like, it's also one of my first favourites and the first one, it's designed for extraction of assets from Wolfenstein: The New Order & Wolfenstein: The Old Blood: wolfenstein.bms: open FDDE index 0 open FDDE resources 1 comtype unzip_dynamic endian big goto 0x24 get files long get unk long math TMP = files math TMP - 1 for i = 0 < files endian little get FNsize1 long getdstring FN1 FNsize1 get FNsize2 long getdstring FN2 FNsize2 get namesize long getdstring name namesize endian big get offset long get size long get zsize long get unksize long math unksize * 0x18 math unksize + 5 getdstring unkdata unksize if size = zsize log name offset size 1 else clog name offset zsize size 1 endif if i != TMP get filenumber long endif next i Script summary: Open index + data files Read file count For each file: Read filename Read offset and sizes Skip metadata Extract raw or compressed data Compression logic ConditionAction | size == zsizeRaw copy | | size != zsizeZlib decompress | In ID Tech 5, games have all assets packed inside the .resources files and all of the metadata like name, path, extension, compressed size, decompressed size are in the .index files that means is that we open both files in this way: 1. Setup open FDDE index 0 open FDDE resources 1 open = tells QuickBMS to open files. FDDE = the format ID (arbitrary, just a label). index -> is opened as file 0 resources -> is opened as file 1 comtype unzip_dynamic 2. Loop This sets compression to Zlib dynamic (handles soo many decompressions). endian big This sets reading data in Big-Endian. goto 0x24 Jumps to 0x24 to skip archive header get files long get unk long files -> total number of files in the archive unk -> unknown value (probably versioning or flags) math TMP = files math TMP - 1 TMP = holds files - 1 (Used later to avoid reading an extra value after the last entry). for i = 0 < files The script now iterates once per file entry. endian little The filename block is little-endian, even though the rest of the archive is big-endian. get FNsize1 long getdstring FN1 FNsize1 Reads length of string Reads string data get FNsize2 long getdstring FN2 FNsize2 Another string component get namesize long getdstring name namesize This is the real filename name is used later by log / clog This determines the extracted file name on disk endian big Switch back to big-endian. get offset long get size long get zsize long offset = Where the file data is located in resources size = Uncompressed size zsize = Compressed size get unksize long math unksize * 0x18 math unksize + 5 getdstring unkdata unksize Reads a count (unksize) Multiplies it by 0x18 (24 bytes per entry) Adds 5 extra bytes Skips a block of unknown metadata This is not used for extraction, only skipped to reach the next file entry. 3. Extract the files if size = zsize log name offset size 1 If compressed size equals uncompressed size: File is stored raw log copies data directly from file 1 (resources) else clog name offset zsize size 1 endif clog means compressed log Reads zsize bytes Decompresses using unzip_dynamic Writes size bytes to disk if i != TMP get filenumber long endif Between entries, there is an extra long Probably an ID or index value Not present after the last entry This prevents reading past the table next i Repeats until all files are extracted1 point

-

1 point

-

Skeleton deformations for the character creator is probably a more accurate term for Veilguards “morph targets” (DAO/DA2 use straight up targets while I/VG use the skeleton to deform morphs with different bone positions) But I’m not a game dev. 😉1 point

-

1 point

-

I am attaching the fmodel json file. With uassetgui, what procedure did you follow to obtain that result? Maybe I'm missing something, as this is the first time I've used uassetgui. Edit: Ah ok, thanks, with .\UAssetGUI tojson GameTextUI.uasset GameTextUI.json VER_UE5_4 Mappings.usmap I can get the base64 code, but it is unreadable: ����������m_DataList�d��m_id��m_gametext��No data.�������������m_id�m_gametext��Held�������������m_id �m_gametext��None�������������m_id! GameTextUI_fmodel.rar1 point

-

1 point

-

When you choose "uncompressed" the file size should be bigger than for a DXT5 file. I'd try some other tool, maybe Gimp, for testing.1 point

-

ImageHeat v0.39.2 (HOTFIX) https://github.com/bartlomiejduda/ImageHeat/releases/tag/v0.39.2 Changes: - Fixed hex input - Changed endianess and pixel formats bindings (required for hex input fix)1 point

-

So here's pixel format for ps4. PF_DXT5 = 7 PF_DXT1 = 13 PF_BC7U = 22 PF_UNKNOWN = 2 not sure but can be RGBA1 point

-

I remember to make a request in your github about it. 👍 Somehow, we were not able to see these textures in ImageHeat, only after extraction and decompression. Anyway, for the Switch textures it seems to be an issue as h3x3r said above and I confirm it too. In the attachment you find all the textures in UNIFORM.TEX (including jersey-color) from the Switch version already decompressed. The stock texture file is in the Switch files in the first post (UNIFORM.TEX). In the screenshot below you see the parameters for the jersey-color texture. Maybe useful when you have time to check it to help you fix ImageHeat. UNIFORM Switch decompressed.zip1 point

-

1 point

-

1 point

-

I've moved this topic to graphic file formats, rather than 3d Models where you posted it. Also, please don't start another post for the exact same thing. I've deleted your other post as a duplicate. Please read the rules before posting again.1 point

-

Please use this updated script to repackage the data file. If you have any questions, please let me know so that other capable people or you can continue to process these .pxc files yourself # Update the decompression of pxc file(script 0.2) get FILE_SIZE asize xmath TOC_PTR "FILE_SIZE - 8" goto TOC_PTR get TOC_OFFSET long goto TOC_OFFSET get FILE_COUNT long for i = 0 < FILE_COUNT get OFFSET long get SIZE long get COMP_FLAG byte get NAME_LEN short getdstring NAME NAME_LEN get UNK long savepos TOC_ENTRY_POS if COMP_FLAG == 0 goto OFFSET getdstring MAGIC 4 if MAGIC == "PxZP" comtype zlib get UNCOMP_SIZE long get COMP_SIZE long savepos DATA_START clog NAME DATA_START COMP_SIZE UNCOMP_SIZE else log NAME OFFSET SIZE endif else goto OFFSET get MAGIC long get UNCOMP_SIZE long get COMP_SIZE long savepos COMP_START clog NAME COMP_START COMP_SIZE UNCOMP_SIZE endif goto TOC_ENTRY_POS next i pxc.zip1 point

-

Hello, although the file is recognized as .wav format by the system, it is actually a .wem format commonly used by the Messiah Engine. Of course, the .bnk format may also appear. You can use this tool to download a version suitable for your system. You can use the command Lines convert it to standard .wav audio or other audio formats https://github.com/vgmstream/vgmstream Of course you can also use the following exe program. I have used it before and can be converted in batches. Before using it, you should modify the file name to .wem. If the file header is BKHD, you should also modify and expand to .bnk RavioliGameTools_v2.10.zip1 point

ResHax.com: Empowering Curious Minds in the World of Reverse Engineering

Delving into the Art of Code Unraveling: ResHax.com - Your Gateway to the Thrilling World of Reverse Engineering, Where Curiosity Meets Innovation!

.thumb.png.08ad2d1b17847ccbf06b8097d586bddf.png)

.png.9b08304262ae359ed19d073939f5a51e.png)

.png.c8c2fc834c55e374073ccb22bc492e97.png)

.png.10d8f98d25c93338d0d9941a56af370e.png)