Leaderboard

Popular Content

Showing content with the highest reputation since 11/22/2025 in Posts

-

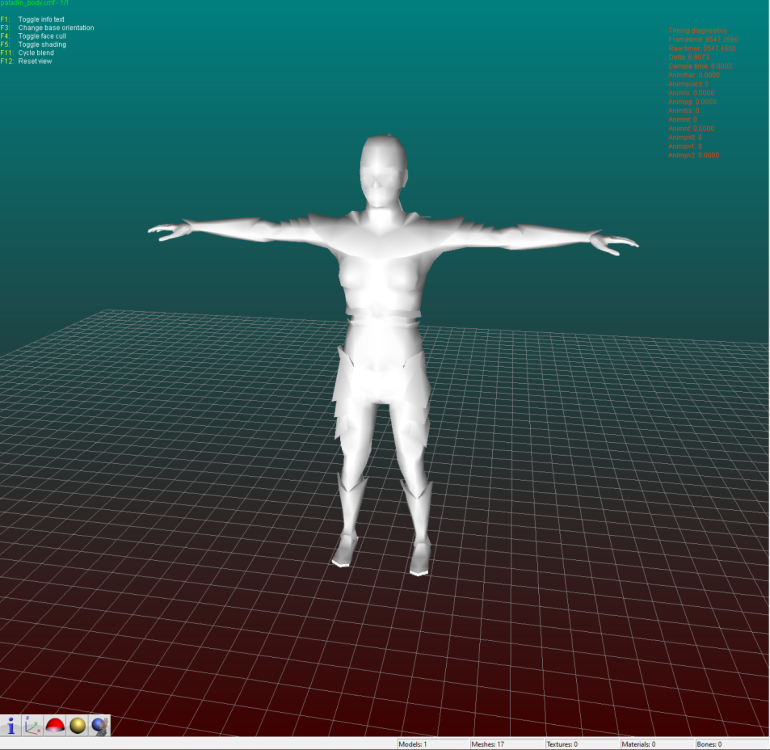

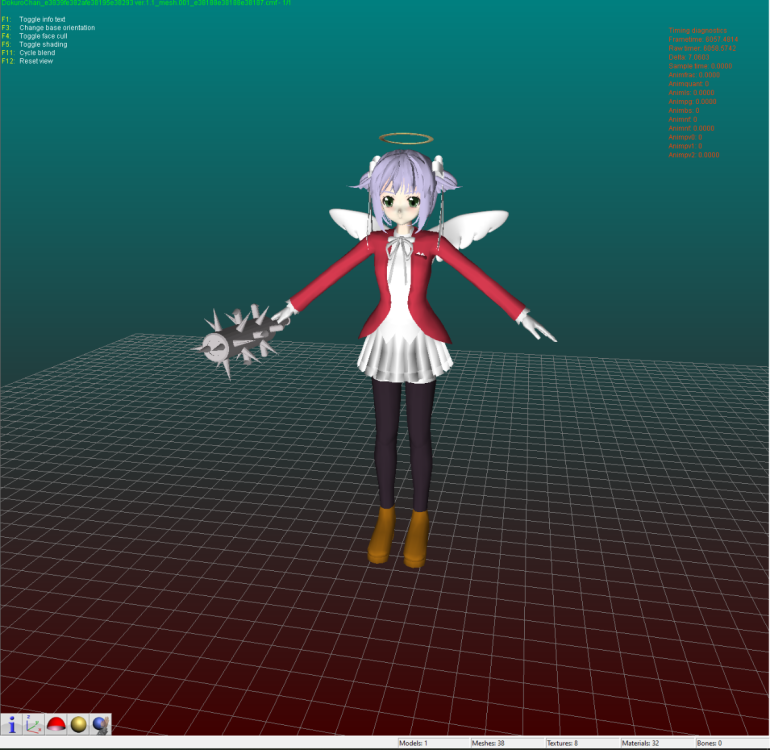

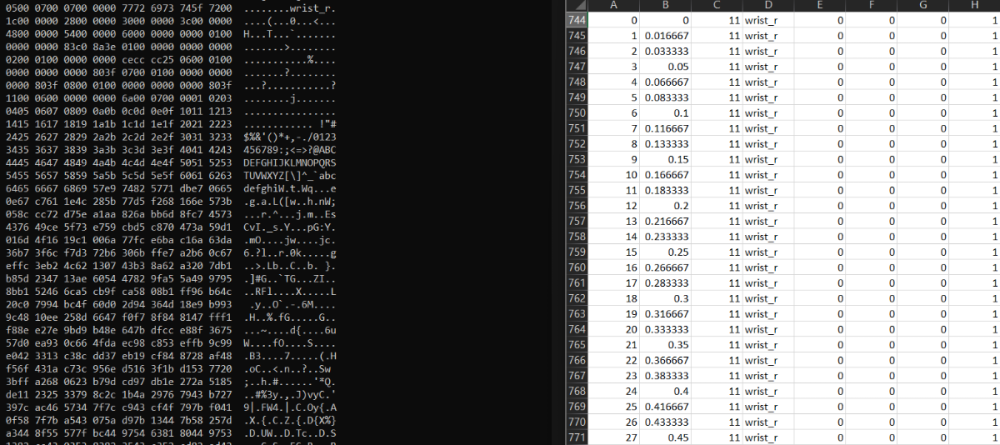

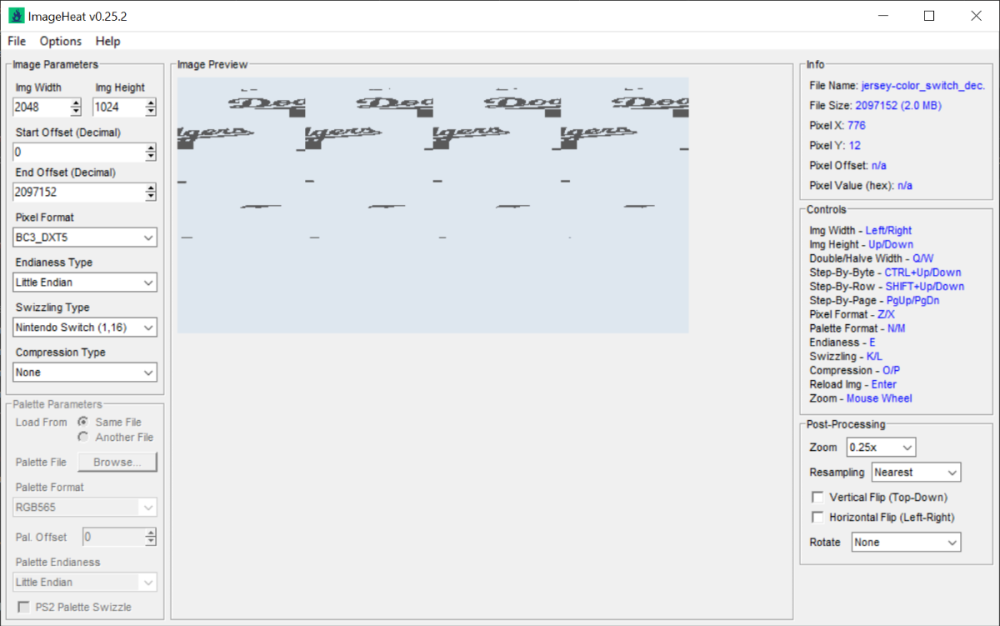

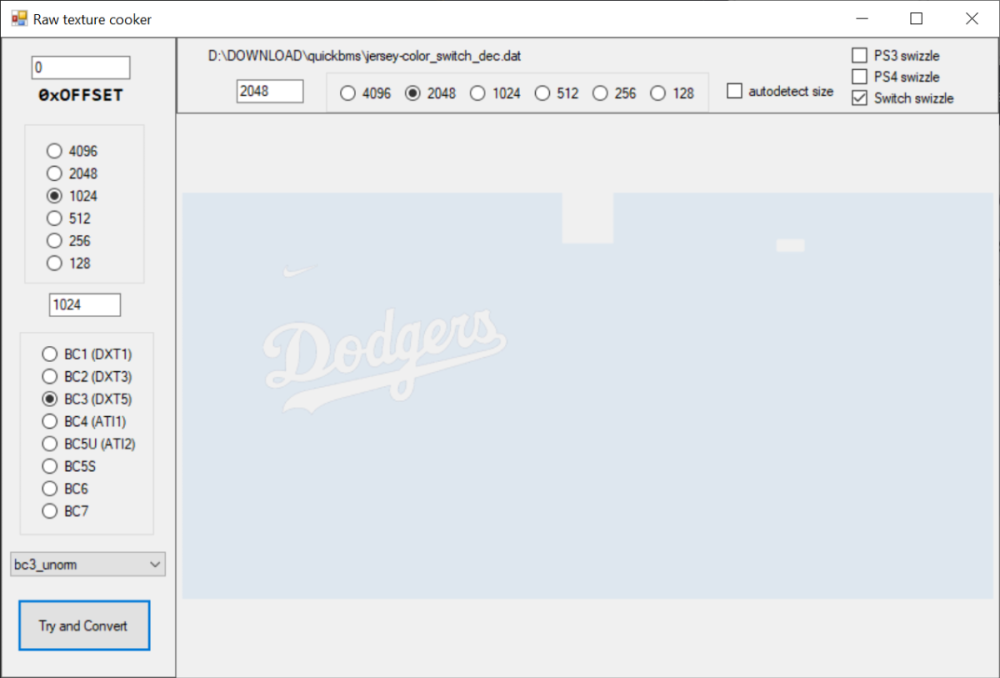

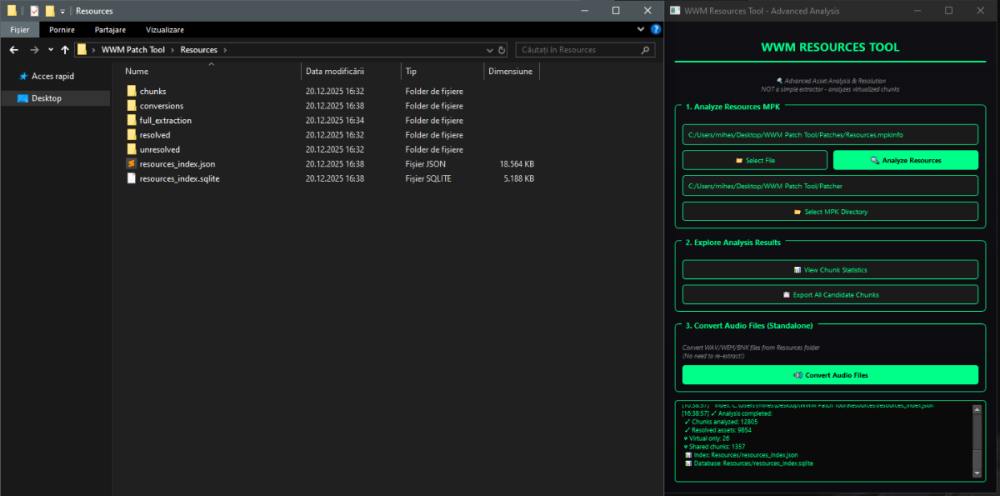

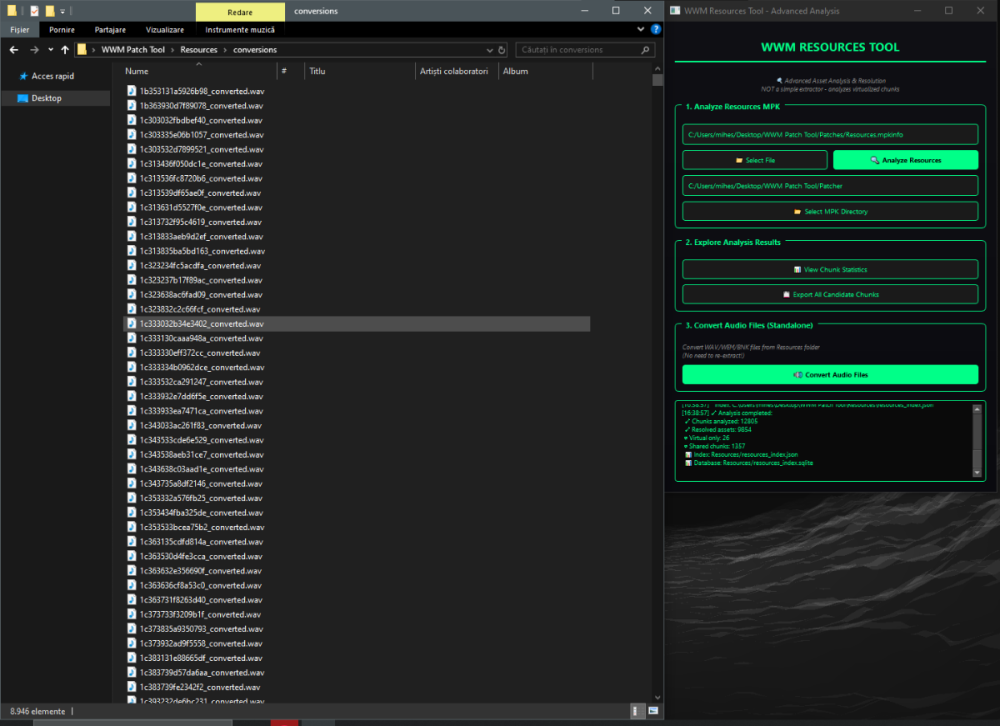

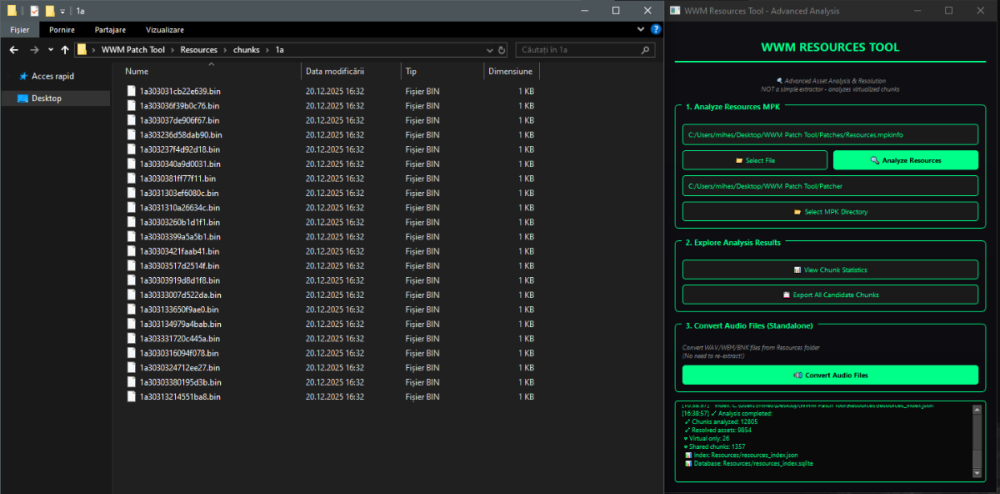

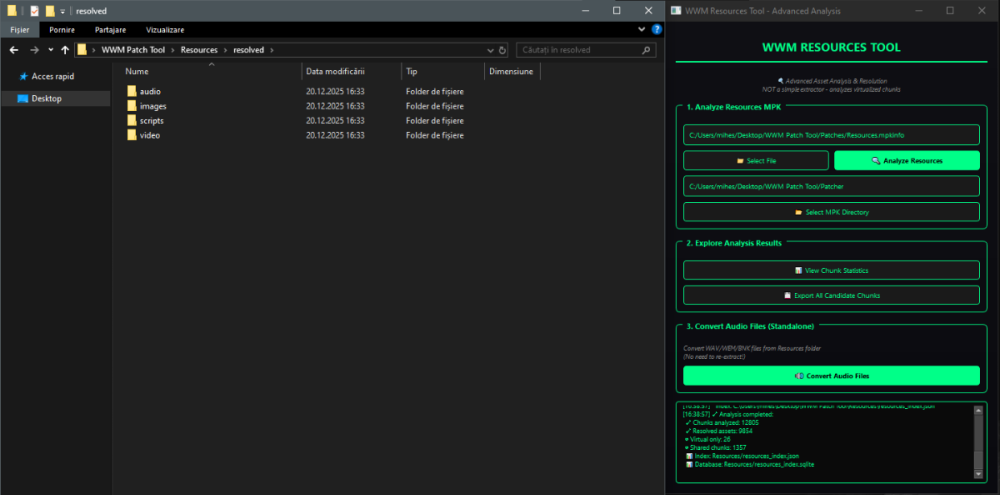

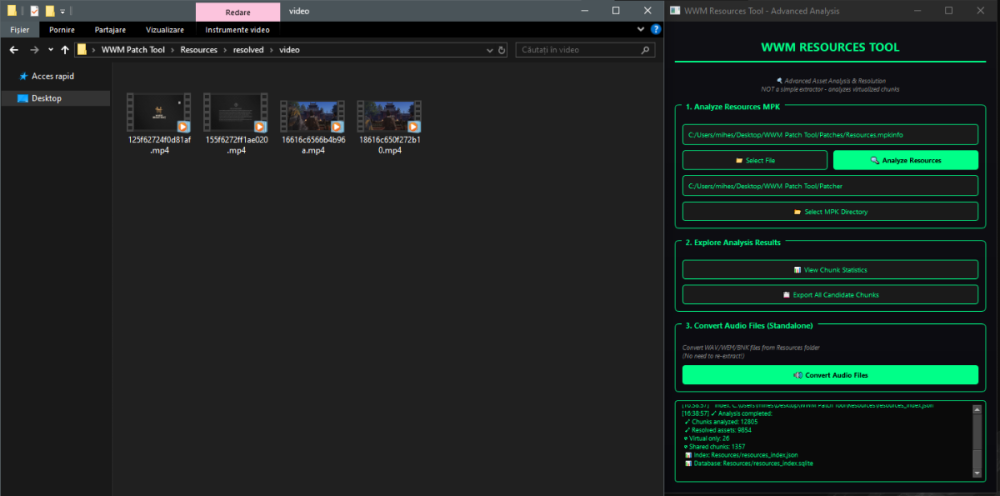

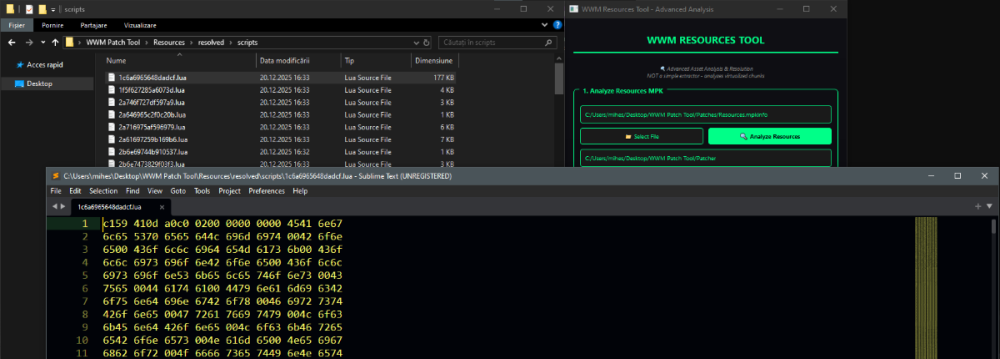

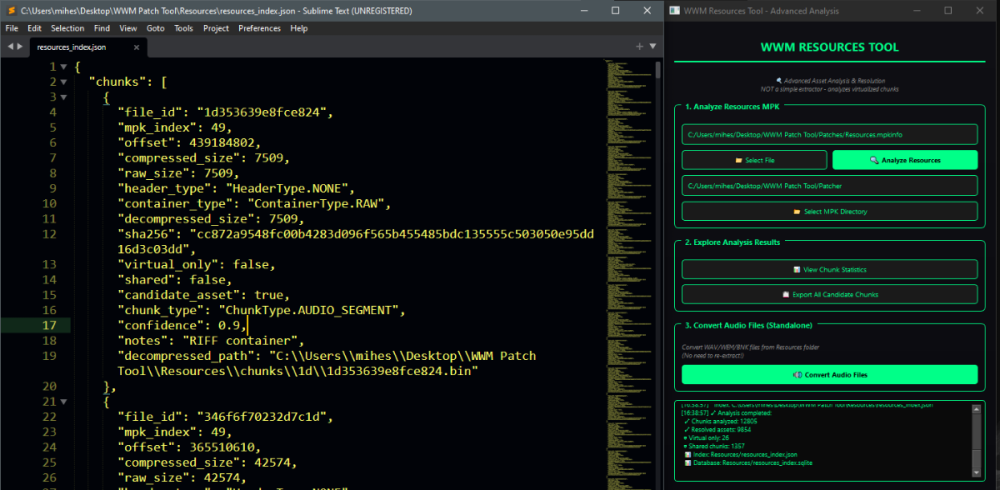

Author: Myro Scope: Educational & research-oriented reverse engineering Status: Ongoing research Introduction: This thread documents an ongoing technical research project focused on analyzing the MPK container system used by Where Winds Meet (WWM). The goal is to understand the structure, behavior, and data flow of the game’s asset containers from a reverse-engineering and archival perspective, strictly for educational and analytical purposes. No proprietary assets, binaries, or tools will be distributed as part of this work. 1. Patch*#.mpk vs Resource*#.mpk — Structural Similarity, Semantic Differences At a container level, Patch#.mpk* and Resource#.mpk* share the same base format: Indexed via mpkinfo / mpkdb Entries contain: FileID Size Offset MpkIndex Use the same compression stack: LZ4 / ZSTD / LZMA EZST (AES-encrypted) However, despite this structural similarity, their extraction semantics differ significantly. 2. Patch*#.mpk — Partial Transparency, Direct Asset Access Patch MPKs behave primarily as delta / override containers: Assets retain near-original offsets Internal file structures remain largely recognizable Minimal indirection compared to Resource MPKs As a result: ~99% of audio assets can be recovered successfully A subset of Lua scripts becomes readable after decompression Non-audio assets (including some images) are present and partially accessible Testing on Patch3100.mpk confirmed that Patch archives are not limited to sound assets. Conclusion: Patch MPKs prioritize update efficiency and fast access, with limited transformation of payload data. 3. Resource*#.mpk — Indirection and Shared Data Resource MPKs represent the primary asset pool and introduce several additional complexities: The Size field often represents a logical or expanded size, not the physically stored payload Naïve extraction pipelines tend to: materialize padding and alignment blocks duplicate shared data blobs inflate archive size artificially (e.g. ~2 GB → tens of GB) This behavior explains why Resource MPKs appear far more “opaque” when extracted without validation. Important observations: Fixed headers such as 02 02 02 01 … frequently represent logical structures (e.g. texture containers with multiple mip blocks) Headers of the form size (4 bytes) + size × 20 bytes often describe lookup tables, not standalone assets Many assets are shared, aliased, or referenced indirectly Conclusion: While Patch and Resource MPKs share the same container format, they do not share the same extraction semantics. Resource MPKs require strict validation, deduplication, and asset-type verification to avoid false positives. 4. Audio Extraction & Tooling Initial testing with Ravioli Game Tools proved sub-optimal for this pipeline: Inconsistent parsing of Patch audio assets Unreliable WAV output in multiple cases Switching to vgmstream yielded significantly better results: Correct parsing of recovered audio data Clean, fully functional WAV output Proper handling of codec structures Current result: Audio extraction and conversion are fully verified and stable. 5. Resource.mpk Extraction – Current Progress Testing on Resources49.mpk (~740.9 MB) produced the following results: Successfully extracted: Audio assets → converted to valid .wav files Over 8,500 audio files identified in a single Resource MPK Video files (.mp4) → fully playable Lua scripts (.lua) → extracted but still encrypted / obfuscated Unresolved: Files detected as images (.png, .jpg, .bmp) Headers contain the string “messiah” Strongly suggests custom packing, encryption, or misclassification Currently not valid image data despite file extensions 6. Current Status ✔️All Patch*#.mpk, Resource*#.mpk, and lt*.mpk archives can be unpacked ✔ Audio assets fully recovered and validated ✔ MP4 video assets fully playable ✔ Lua scripts extracted but not yet readable 7. Next Steps Ongoing research will focus on: Reversing additional transformation layers in Resource*#.mpk Identifying: secondary encryption stages compression chaining block or stream reordering Investigating the custom image container format Further analysis of Lua encryption / obfuscation Automating Patch vs Resource handling as two distinct pipelines Notes on Tooling & Distribution All tools used in this project are: developed entirely from scratch created specifically for WWM research used strictly for educational purposes At this stage: no tools, binaries, or scripts will be released no proprietary assets will be redistributed Closing This thread is intended solely as a technical research and documentation log, not as a release, redistribution, or exploitation guide. In accordance with applicable intellectual property laws and forum policies, no tools, binaries, scripts, or extracted assets will be posted or shared, either publicly or privately. All game data, assets, file formats, and related materials discussed here remain the exclusive proprietary property of NetEase and the developers of Where Winds Meet. Any references to assets or file structures are made strictly for educational, analytical, and research purposes, with no intent to enable misuse, circumvention, or redistribution of protected content. Should any concerns arise regarding compliance or scope, I am fully open to cooperating with forum moderation and adjusting the visibility or content of this thread accordingly. Thank you for your understanding and for supporting responsible technical research. — Myro Below are a few illustrative screenshots and log excerpts from the current stage of the research, provided solely to contextualize the findings outlined above.3 points

-

3 points

-

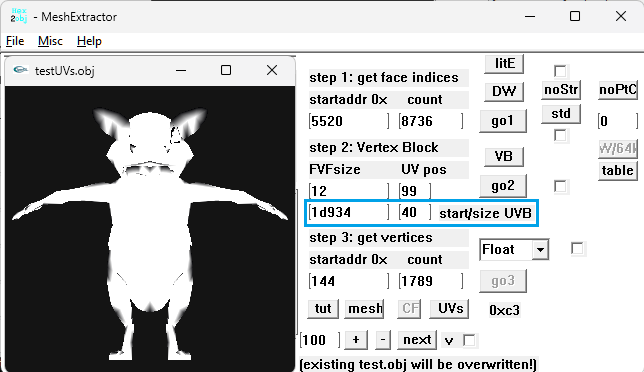

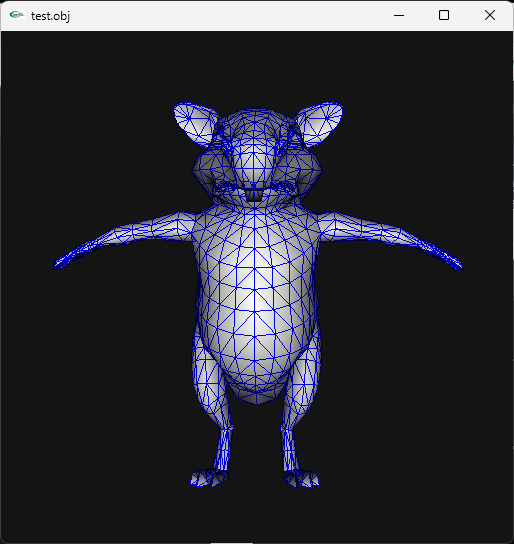

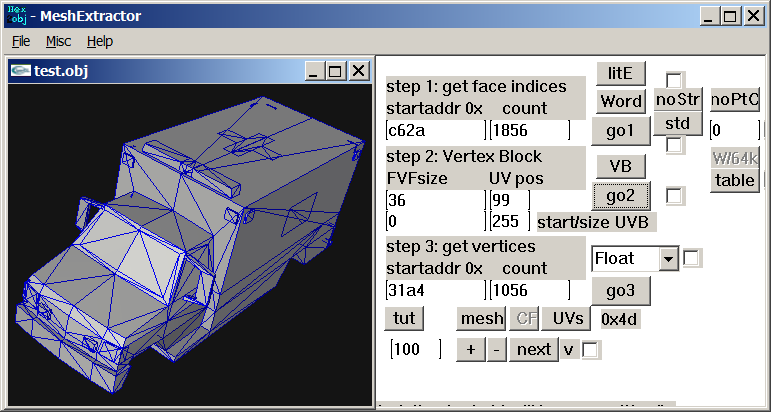

I don't know if there are more models in that unpacked folder, you need to check that so examine each file there. Just remember that characters use shorts in vertices buffer, I think I saw other file with floats but maybe that file is not a character or maybe it is but with floats, I really don't know, lol. Here is the script if you want to test it: fmt_black_ps2_prototype_DB.py3 points

-

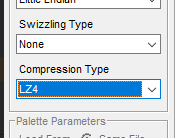

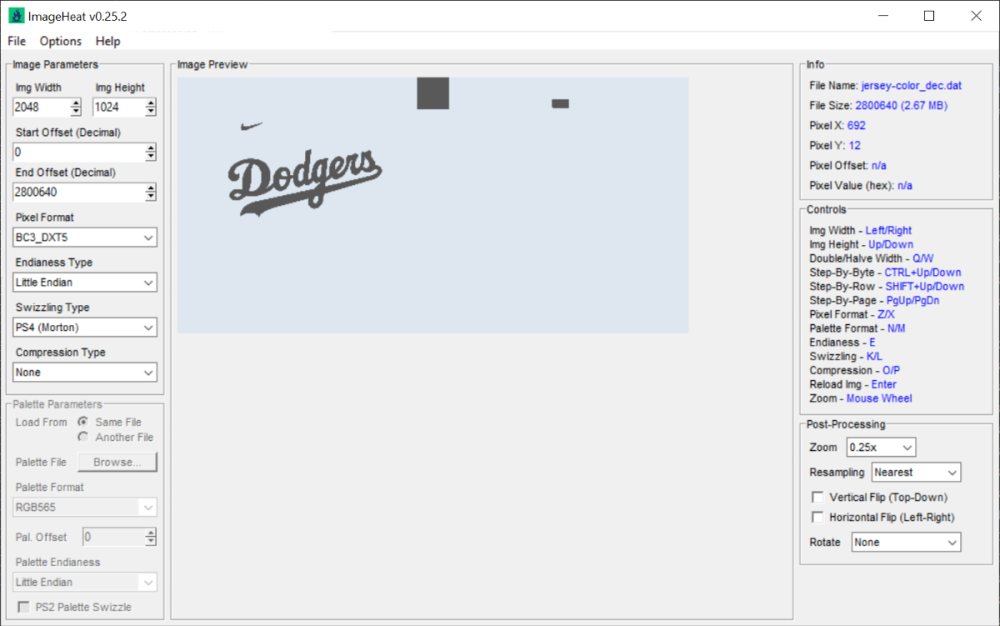

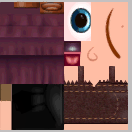

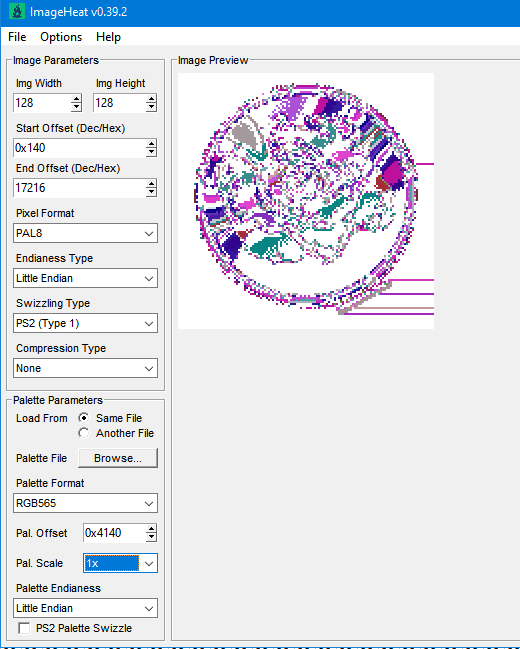

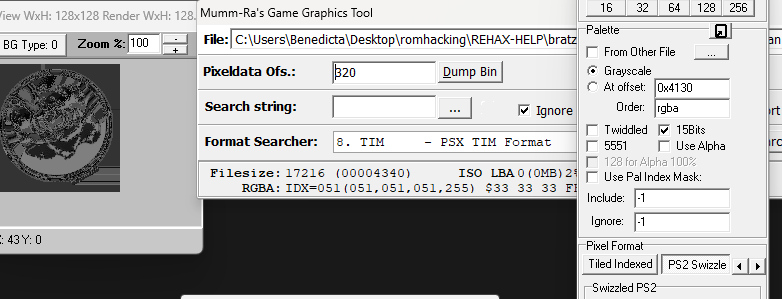

You'd be better using ImageHeat, as it has more options for swizzling, etc. For these, the image is PS2 swizzled, but I can't work out the palette. It doesn't seem to use any of the standard PS2 palette formats, maybe a different swizzling method. This is from "t_compact_rockangelz_closed_00000003":2 points

-

2 points

-

The "repeated offsets" pointing to new blocks indicate that the main BIGFILE.CAT is acting as a master container that holds smaller, self-contained archives inside it. The Master Index: Points to large chunks of data (e.g., "Level 1 Data", "Level 2 Data"). The "New Block": When you go to that offset, you find a new header (signature 01 00 01 00). The Inner Index: This new header has its own list of files. Because this block is treated as a standalone file by the game engine once loaded, its offsets start at 0 (relative to the start of that block), not relative to the start of the whole disc. [ MASTER CAT (BIGFILE) ] |-- Header |-- Index Entry 1: Offset 1000 -> Points to "Level 1 Block" |-- Index Entry 2: Offset 5000 -> Points to "Level 2 Block" | |... [Data at Offset 1000] ... | +-> [ NESTED CAT (Level 1) ] |-- Header (starts at Master Offset 1000) |-- Index Entry A: Offset 10 (Absolute: 1010) |-- Index Entry B: Offset 50 (Absolute: 1050) |-- Data... Why did developers do this? (The Logic) This approach was necessary due to the hardware limitations of the PlayStation 1 (PS1): RAM Constraints: The PS1 has only 2MB of RAM. It cannot keep a massive table of thousands of file offsets in memory at all times. Modular Loading: The game loads the "Master Index" to find the location of the current level's data. It then streams that specific "Block" (Nested CAT) into memory. Relative Addressing: Once the "Block" is loaded into a specific memory address, the game engine reads the inner offsets. Since these offsets are relative to the start of the block (0), the engine can easily calculate memory pointers without needing to know where the block was originally located on the CD.2 points

-

There is the tool PS2JunjouUnpacker-decompressor.zip2 points

-

I've just released new version of ImageHeat 🙂 https://github.com/bartlomiejduda/ImageHeat/releases/tag/v0.39.1 Changelog: - Added new Nintendo Switch unswizzle modes (2_16 and 4_16) - Added support for PSP_DXT1/PSP_DXT3/PSP_DXT5/BGR5A3 pixel formats - Fixed issue with unswizzling 4-bit GameCube/WII textures - Added support for hex offsets (thanks to @MrIkso ) - Moved image rendering logic to new thread (thanks to @MrIkso ) - Added Ukrainian language (thanks to @MrIkso ) - Added support for LZ4 block decompression - Added Portuguese Brazillian language (thanks to @lobonintendista ) - Fixed ALPHA_16X decoding - Adjusted GRAY4/GRAY8 naming - Added support section in readme file2 points

-

2 points

-

It's been a while since this topic is up and i have found a way to deal with this: -Step 1: From the .farc files, use either the tool mentioned at the first post of this thread, or download QuickBMS and use the virtua_fighter_5 bms script i included in the zip file below to extract them into bin files. -Step 2: Download noesis and install the noesis-project-diva plugin (https://github.com/h-kidd/noesis-project-diva/tree/main , or in the included zip file) in order to view and extract the textures/models and use them in Blender or a 3d modeling software of your choice. KancolleArcade.zip2 points

-

1 point

-

1 point

-

1 point

-

UModel is mis-parsing the package, usually because the game’s UE2 fork / package format doesn’t match what UModel expects with the current settings. Umodel to my knowledge works with this game. Your settings are not correct. I will elaborate using your error. FArray::Empty: -116 x 12 UModel tried to allocate an array with -116 elements of 12 bytes each. A negative element count is impossible, so the size overflows to a negative number (-1392) and appMalloc blows up. UModel is reading the wrong structure at that position (e.g., thinks this field is “NumVerts” but it’s actually some other 32-bit value), because the package layout / engine profile doesn’t match what UModel expects for “game=ue2” in this case. The rest of the stack confirms it dies while trying to load a skeletal mesh from that .ukx, not on textures or trivial data. In easy to understand terms. "wrong assumptions about how this game’s .ukx stores its skeletal meshes". Umodel should have a selection on the side for where you can pick game targets. If the game isn't there then you might be out of luck. However, I think this game shares a target with another game. Just trial and error to see if any of them work. Also, if this is not a "PC" version of the game you need to change the "Auto" on the bottom right of the gui to the specific platform. I know the topic says PC. People do make mistakes. Unreal Engine 2 games are rare and few in between. Not many people cared to work with any of them. Even the Wheels of Time game didn't get much investment. Also, turn off all forms of textures/material when first trying with unreal engine 2 games to speed up the process especially with whack a mole trial and error. The only people that would even bother adding support to this game which is extremely unlikely are on Gildor's forum. Again, very few people would ever care for an Unreal Engine 2 or 1 game. I am sorry if this wasn't helpful.1 point

-

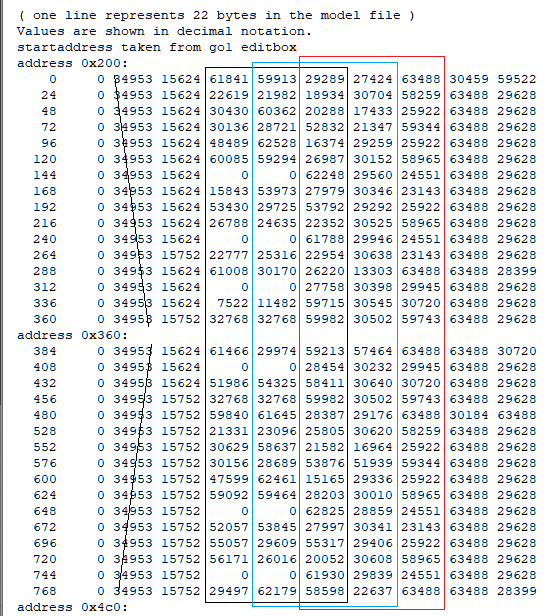

This is actually very helpful. Thank you. I seen the same repeating groups of ~10 unsigned 16-bit value and came to a similar conclusion. The constants like 0, 1632x (~0x3FCx), 21845 (0x5555), 39322 (0x999A), 43691 (0xAAAB), 52429 (0xCCCC), 56798 (0xDDDE), 63488 (0xF800) hex patterns are classic fixed-point / normalized values (fractions of 0xFFFF), which is exactly what you’d expect for compressed curves (rotations, maybe scales or tangents)... I am not 100% sure though. Your step back that you are seeing is because multiple tracks/bones are interleaved via small index tables or there’s a separate header that says “keyframe list starts at X for track Y. I have not figured it out. Also, I am only assuming. It is an educated guess. 2d72 1100 looks more smooth because it is probably for the static pieces. That is also a guess. I don't really know. I haven't found a clean stride/format yet from any area to the extent I was happy with any result. Thankfully your poking proves it isn't baked matrices. However, it might have "junk" data inside of it or switch between the two different formats on the fly which would have a call/read from the game engine the game was made with "elf statements/running". For the last two days I have been looking for a header or track and still haven't found one. I don't know what the meaning "definition" for each of the 10 values per entry (time? quat? s,t,r? tangents? flags?). Also haven't figured out how these numbers convert back to usable floats/matrices for a bone rig. You found the right haystack to be looking for the needle here and I thank you for this. The repeated 16-bit values like 0x3FCx, 0x5555, 0xAAAA, 0xF800, and the way they change over “time” gave light on this. That matches my expectation that GARO is storing proper animation curves rather than just baked matrices which most people would have assumed because of the static model additive animations. Sorry for repeating myself here. I have a client for this game that is CONSTANTLY having me repeat over and over some of this information and it started to turn into habit. Going back to the “step back” jumps you pointed out I believe show the timeline might be split into several blocks (per-bone or per-channel segments) instead of one clean linear stream, which is probably why tools that only understand standard RWANM fail on this game. I don’t have much experience with your viewer. The tools I use are far different and I have been doing a lot of direct hex poking along with using renderware tools, so I’m still trying to figure out the parameters you showcase here. When you mention 2d72 1100 in step 3, is that essentially a stride / FVF setup you’re using to visualize the data as a point cloud? That was my original assumption but now I am second guessing myself. Do you have any thoughts yet on how those 10-value records break down (e.g. time + rotation + something else), or on where the per-track headers might sit? You helped a lot with this and I am very thankful.1 point

-

As per i am a good for nothing in 3d model issues., i can´t tell if te unpack works, but studying DB, i can see 2 types of TOC. Attached the py script, if someone wants to take a look. just drop the files DB or bins in .py or double click in .py black_ps2_unpack.py1 point

-

Hmm, you know there's some hero here who got the trick for PS2? I couldn't get over myself to make use of his dll but it's my firm decision to tackle this and I'll tell the result as soon as I get it working for me. (If it works there's several dozens of PS2 projects I'd need to correct and I fear the amount of work, somehow.) (It's my bet that it has to do with changing the face winding and I'd like to find it out by myself instead of using other people's dll.)1 point

-

1 point

-

I am uploading all animations for the character gar here. I should have done that in the previous post but it slipped my mind. So now you have a complete set of animations for one character. gar.rws.dec_be_-15_extracted.rar1 point

-

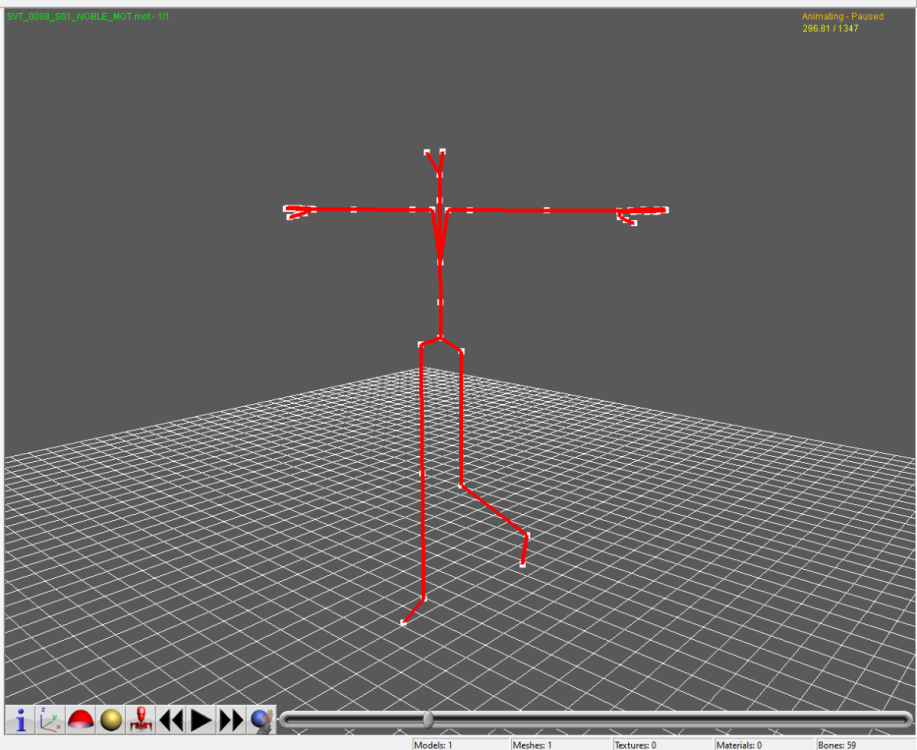

The armature is with the model. When you import the DFF using DragonFF in blender it will have the armature with it. The bones are very small. You can use the side menu GUI to better find the bones. Models and Skeletons are 100% workable. It is the custom renderware animation format that is the problem here. In the files I uploaded in the topic post, there should be two rwanm files. Those are some of the animations. If you have the game I can give you one of my scripts that will extract everything for you so you can go through all the files for testing. I tried figuring this out through the elf file. Let me know if you want my script so you can poke at all the animations in the game. There are two different sets of animations per character even if they don't have a weapon. The animations specific for weapons would be much easier to look at since they are smaller. There are mot files that give some additional information but for the most part they are irrelevant. Mot files for this game is more of a listing of animations and positioning for static model pieces. Not the main animations. I am uploading the mot file so you can see it is mostly just for static pieces. The txt file for the character abbreviated with gar that states all the files for him and the gmobj data base text for it as well. I used a renderware tool "forgot the name of it" that allows me to look at how the files are structured. Sending you a section_tree.txt that was made by that program. I was offered a job to make a modding tool for this game that would include editing animations..... As it stands, I don't believe I will be able to finish it. Will just make everything I have for this game public if that happens. Adding two scripts as well. The gar_rws_test.py script does a simple test on the files for the correct compression to decompress the files so you can get everything basically. The garou_rws_extractor is a slightly upgraded version that needs work and is for extracting the models/animations. On aluigi's website there is a bms script called ougon_kishi_garo. That would be your first step to getting all the files out. Then you will have to use the scripts I posted here. The unfinished one will have errors. The test one will work better. gar_mot.rar gar_files_txt.rar gmobj_DB_Files_txt.rar Section_Tree_txt.rar gar_rws_test.py Garou_RWS_extractor.py1 point

-

Drag and drop .resources files into this script, it will extract all of the assets from there (.texdb files not implemented yet). Nest steps: .MD6MESH - https://reshax.com/topic/18614-wolfenstein-the-new-colossus-md6mesh-files/ .BIMAGE/.TGA - https://reshax.com/topic/18617-wolfenstein-the-new-colossus-bimage/#comment-101572 .PACK (.WEM .BNK) - https://reshax.com/topic/18618-wolfenstein-the-new-colossus-pack-containers-wem-and-bnk-files/#comment-101573 wolfenstein_2_resources.py wolfenstein_2_resources.zip1 point

-

if there are any unreleased models they should be pretty easy to find, afaik most(probably all?) of the package files have the same checksums as the retail game (dvddata\aid\zpackage) - i cant say ive tested every single one but of the ones i have that has been the case. there are some other assets that are in the file system but i dont know if any are different, eg dvddata\aid\bfdmodel\characters\SinglePlayerVehicles\MachineGunTurret is actually the model shown in my original post, theyre not compressed so you can easily use a texture viewer to see if anything looks interesting (almost all textures are DXT1/DXT5) sometimes theyre just the same model as the original but with a larger texture, eg 512x512 instead of 128x128, the demo version of conker is an example of this as well, his texture on the demo is higher res than the retail game. if anything most of the "easter eggs" are probably buried in the original/actual game and just go un noticed, for example in one of my chats with Uber Winfrey he pointed out Berrys dresser has this ontop of it: or the gargoyle statues: safe to say the rareware guys had an interesting sense of humor. ------------------------------------------ as for any tool, the closest thing to it would be Uber Winfrey's blender script. Most of my work has just been around documenting the structs/data, and while extracting some of the raw data is easy enough there is still a ton of stuff that needs to be figured out like for example Animation data is still WIP and would probably need to translate the shaders for the model to look right eg Fur, Cloth, Shine etc since it uses old VSH/PSH shaders instead of HLSL, they're pre compiled so they need to be decompiled from binary data, figuring out the shader params and what/how they're used for etc. these aren't unsolvable problems but its definitely outside of my skillset which means even if i find time for it its going to be slow.1 point

-

When debugging function 0010D850, I found these filenames in the t0 register (after the decryption result; it also loads the filenames of files with the .JRS header into memory): LOGO.JRS MAINSCRIPT.JRS SCENARIO.JRS SCENARIO00_ROMA.JRS SCENARIO00_ROMA_TGS.JRS SCENARIO00_ROMA_TRIAL.JRS SCENARIO01_EGOI.JRS SCENARIO01_EGOI_TGS.JRS RES/SCRIPT RES/SCRIPT/SC RES/SCRIPT/SC/00_ROMA RES/SCRIPT/SC/01_EGOI RES/SCRIPT/SC/02_TERO RES/SCRIPT/SC/10_KAISOU SCRIPT.SVL etc... The flow is: SCRIPT.PTD (disk) → [AT GAME STARTUP] → Decompress YKLZ → Decrypt .JRS → Load into PS2 memory --- Knowing this, I set a read/write/change breakpoint in the PS2 debugger after the initial file-loading process in RAM. In this case, I set it at 0056AC20 (which corresponds to `SCENARIO00_ROMA.JRS`), and as expected, this is the first dialogue shown in the Romantica route. 0011A010 (game_load_resource) ↓ 0010DED0 (file_system_wrapper) ↓ 0010DB58 (process_filename) → Converts "SCENARIO00_ROMA.JRS" to something ↓ 0010DC48 (binary_search) → Searches table using 0018E4FC (optimized strcmp) ↓ 0010DD98 (get_file_info) → Returns data_ptr (already in memory?) ↓ RETURNS to 0011A010 ↓ ...... 00106800 (PROCESS .JRS?) → UNKNOWN ↓ 001068D0 (FINISHES?) → Another unknown --- FUNCTIONS 0010DC48 – Binary Search - Searches for files in a master table sorted alphabetically (only to verify file calls are correct) 0010DD98– Get File Info - Returns data_ptr (already in memory), size, and flags 0010DED0 – File System Wrapper - Orchestrates the search and retrieval process 0011A010 – Game Resource Loader - Manages memory pool - Calls the entire system --- The only thing left to do is trace the flow and see what gets called after the .JRS file is loaded (which is obviously to process and render the text). With the information on how the game processes and displays text, we can process the previously extracted files from script.ptd to view the Japanese dialogues. I need to rest my brain… haha1 point

-

1 point

-

1 point

-

animewwise just closes instantly if you try it on there. Regular wwise works https://github.com/mortalis13/Wwise-Unpacker BeyondToolsMod-net9.zip1 point

-

1. FILE STRUCTURE (script.ptd) | Offset | Size | Content | |--------|------|---------| | `0x00` | 32 bytes | **Header** with signature "PETA" (50 45 54 41) | | `0x20` | 256 bytes | **SBOX** (Substitution Box for decryption) | | `0x120` | 1,728,224 bytes | **Encrypted data** | 2. DECRYPTION & DECOMPRESSION PROCESS script.ptd → Decryption → YKLZ/LZSS → Binary Script 249 YKLZ/LZSS compressed sections found in decrypted data YKLZ/LZSS decompression works correctly 3. DECOMPRESSED SCRIPT ANALYSIS Header identified: `純ロマ = "JUNROMAN" Current issue:Shift-JIS Japanese text not found - appears encrypted even after decompression Example decompressed output: 純ロマ####@###シg##@###ト###4.##X)##イ*##4... 4. SUSPECTED SCRIPT STRUCTURE?? [Shift-JIS Text] [Padding "####"] [Command "@" + 3 params] [More Text]... Parsing logic??? 1. Parser detects `@` (0x40) → Command indicator? 2. Reads next 3 bytes → Command parameters? 3. Processes Shift-JIS text (1-2 byte characters)? 4. Skips padding `#` (0x23) → Alignment bytes 5. GAME EXECUTION FLOW (pcsx2 debugger) fcn.0010e048 (Interpreter) ↓ fcn.0010ded0 (Parser) ↓ fcn.00119fc0 / fcn.0011a0ec (Handles dialogues?) ↓ fcn.00106800 (Context configuration) ↓ fcn.001068d0 (Text rendering) ↓ fcn.0016e400 / fcn.0016e4a8 (Unknown final processing) 6. CURRENT PROBLEM The text appears garbled/encrypted even after YKLZ decompression....... Additional encryption layer** after LZSS decompression??1 point

-

*.abc is font map. Maybe you can add some characters in it. Now for the texture. Original is 32 bit rgba, yours is DXT5 which is not exact as org. Also i noticed you didn't change alpha channel of the char which is crucial for correct display.1 point

-

D:\88>py fgo_arcade_mot_parser.py --mot CHARA_POSE_SVT_0088_S01.mot --skl SVT_0088_S02.skl --bone arm_r --frames 100 0 0.000007 0.402854 0.002541 0.915261 1 0.000120 0.975284 0.052175 -0.214706 2 0.069035 0.000000 0.000000 -0.997614 3 0.000000 -0.276927 -0.000008 -0.960891 4 0.000000 -0.736034 -0.000022 -0.676945 5 0.000000 0.738174 0.000023 0.674610 6 0.000000 0.848413 0.000028 0.529335 7 0.000000 0.998036 0.000034 0.062648 8 0.000000 0.955551 0.000035 0.294825 9 0.000000 0.787267 0.000030 0.616612 10 0.000000 0.853046 0.000034 0.521836 11 0.000000 0.978375 0.000040 0.206837 12 0.000000 0.950974 0.000040 -0.309270 13 0.000000 0.988709 0.000043 -0.149846 14 0.000000 0.955742 0.000043 0.294205 15 0.000000 0.335155 0.000015 0.942163 16 0.000000 0.042013 0.000002 0.999117 17 0.000000 0.055729 0.000004 0.998446 18 0.000000 0.600873 0.000037 0.799344 19 0.000000 0.431736 0.000025 0.902000 20 0.000000 -0.474964 -0.000031 0.880005 21 0.000000 0.005943 0.000002 0.999982 22 0.000000 0.472832 0.000034 0.881153 23 0.000000 0.562161 0.000044 0.827028 24 0.000000 0.544627 0.000045 0.838678 25 0.000000 0.498805 0.000045 0.866714 26 0.000000 0.663745 0.000065 0.747959 27 0.000000 0.598301 0.000061 0.801271 28 0.000000 0.237227 0.000025 0.971454 29 0.000000 -0.314591 -0.000039 0.949227 30 0.000000 -0.813891 -0.000100 0.581017 31 0.000000 -0.989480 -0.000131 -0.144672 32 0.000000 -0.995146 -0.000143 -0.098406 33 0.000000 -0.920574 -0.000144 0.390568 34 0.000000 -0.220975 -0.000032 0.975279 35 0.000000 0.662326 0.000116 0.749216 36 0.000000 0.995018 0.000179 -0.099691 37 0.000000 0.960525 0.000182 0.278192 38 0.000000 0.592240 0.000118 0.805762 39 0.000000 -0.021210 -0.000011 0.999775 40 0.000000 -0.047665 -0.000008 0.998863 41 0.000000 0.314114 0.000081 0.949385 42 0.000000 0.421506 0.000118 0.906826 43 0.000000 0.740391 0.000225 0.672176 44 0.000000 0.996086 0.000322 -0.088392 45 0.000000 0.610455 0.000199 -0.792051 46 0.000000 -0.147819 -0.000063 -0.989014 47 0.000000 -0.758611 -0.000329 -0.651544 48 0.000000 -0.633201 -0.000271 -0.773987 49 0.000000 0.144974 0.000071 -0.989435 50 0.327163 0.016943 0.000008 -0.944816 51 0.000075 0.714520 0.031271 -0.698916 52 0.000000 0.231376 0.000223 0.972864 53 0.000000 0.589910 0.000409 0.807469 54 0.000000 0.814108 0.000585 0.580713 55 0.000000 0.510365 0.000383 0.859958 56 0.000000 0.119739 0.000083 0.992805 57 0.000000 -0.291270 -0.000258 0.956641 58 0.000000 -0.409780 -0.000399 0.912184 59 0.000000 -0.622229 -0.000662 0.782835 60 -0.000002 -0.941428 -0.001064 0.337213 61 -0.000002 -0.966760 -0.001144 0.255684 62 -0.000002 -0.940971 -0.001175 0.338484 63 -0.000002 -0.957375 -0.001293 0.288845 64 -0.000003 -0.922744 -0.001354 0.385411 65 -0.000003 -0.793428 -0.001291 0.608662 66 -0.000004 -0.998795 -0.001788 -0.049037 67 -0.000003 -0.836432 -0.001628 -0.548068 68 -0.000004 -0.931258 -0.001932 -0.364356 69 -0.000005 -0.993580 -0.002216 -0.113106 70 -0.000005 -0.983486 -0.002361 0.180970 71 -0.000005 -0.812511 -0.002115 0.582941 72 -0.000005 -0.710143 -0.002080 0.704054 73 -0.000006 -0.820454 -0.002625 0.571706 74 -0.000008 -0.999984 -0.003401 0.004570 75 -0.000007 -0.792709 -0.002817 -0.609593 76 -0.000002 -0.308776 -0.001126 -0.951134 77 0.000004 0.259678 0.001201 -0.965695 78 0.000009 0.733887 0.003312 -0.679263 79 0.000013 0.975185 0.004912 -0.221336 80 0.000014 0.997418 0.005142 0.071628 81 0.000013 0.998641 0.004884 0.051882 82 0.000012 0.999473 0.004623 0.032116 83 0.000012 0.999914 0.004360 0.012337 84 0.000011 0.999964 0.004096 -0.007446 85 0.000010 0.999622 0.003830 -0.027227 86 0.000009 0.998889 0.003563 -0.046997 87 0.000009 0.997764 0.003294 -0.066748 88 0.000008 0.996250 0.003024 -0.086474 89 0.000007 0.994345 0.002753 -0.106165 90 0.000007 0.992051 0.002481 -0.125815 91 0.000006 0.989368 0.002208 -0.145416 92 0.000005 0.986298 0.001934 -0.164960 93 0.000004 0.982843 0.001659 -0.184439 94 0.000004 0.979002 0.001383 -0.203846 95 0.000003 0.974778 0.001107 -0.223173 96 0.000002 0.970173 0.000831 -0.242413 97 0.000001 0.965188 0.000554 -0.261558 98 0.000000 0.959825 0.000277 -0.280601 99 0.000000 0.954086 0.000000 -0.2995341 point

-

Introduction This question is probably the most asked one and it makes total sense why, the answer unfortunatelly is pretty generic in it's nature, it depends but if we dive deeper turns out it's not as hard as you think might be here is why I personally think this way... Reverse engineering the game, specifically for asset extraction, requires 4 different steps to create: 1. Extract Game Archive, (Reverse enigneer game's extractioon method, spot a compression method, decrypt xor keys (Rarely)) 2. Reverse Enigneer Binary 3D model files 3. Reverse egnineer Binary Texture files 4. Reverse egnineer the Binary Audio files While those are not extreamly hard to topics to learn, it can took some time to figure them out yourselfe. There are numereous ways to reverse engineer those tasks, you can do it manually via binary inspection, or by using, exploits or even by using leaked Beta Builds or reloaded versions, that often are packed with .PDB files (debug symbols) that can be loaded into Ghidra for near source code, code debugging experience. While the best one is still a binary inspection, there are already dedicated tools for this, for inspecting and extracting manually sample by sample, but currently in time being there aren't any automated programs for this so you must choose to rely on Python scripts. For extracting game archives I recommend QuickBMS for model extraction Model Researcher for Textures Raw Texture Cooker and Audacity for Audio... By extracting all of the game content don't forget about the Headers and Magic Numbers, No matter how Payload loos like, the headers are always the same and often contain super usefull info with them. Graphic Debuggers vs Reverse Engineering This is hot topic is the most intersting one, since yes, Dumping 3D Models and Textures + Recording the Audio's using Graphic Debuggers like RenderDoc, nvidia Nsight Graphics and NinjaRipper Exploit obviously way, way easier than any reverse engineering the proprietary files, it can be done in few minutes vs it can took a few days to mounths in Reverse Engineering so the difference is huge sometimes, hovewer after you reverse engineered the binary files you have access to extreamly fast asset "ripping" speeds without relying on the drawcalls and of course you have access to all of the cut contents and very very easier and faster Map/World "ripping". There are obviously upsides and downsides in both of the methods, I personally recommend using exactly what you need for, if there are already scripts for extracting and maybe even converting some binary proprietary assets then go for it!1 point

-

1 point

-

1 point

-

I think you've already set up the aes-key and the correct version of Octopath Traveler 0 (5.4) on fmodel. You need to add the usmap file, which I attached. To do this, go to Settings->Mapping File Path and select the game's usmap file. Then, in Fmodel, navigate to the correct folder (e.g., Content/Local/DataBase/GameText/Localize/EN-US/SystemText/GameTextUI.uasset) and export the file to .json, with right-click and then Save properties (.json).1 point

-

here you go : 0x09D01B34E843AC6BE08BD854B3CEDA0C4CA52281C08B02BF827F3ADA77173BCA1 point

-

1 point

-

When you choose "uncompressed" the file size should be bigger than for a DXT5 file. I'd try some other tool, maybe Gimp, for testing.1 point

-

ImageHeat v0.39.2 (HOTFIX) https://github.com/bartlomiejduda/ImageHeat/releases/tag/v0.39.2 Changes: - Fixed hex input - Changed endianess and pixel formats bindings (required for hex input fix)1 point

-

1 point

-

1 point

-

I don’t know if this has any effect. https://web.archive.org/web/20230000000000fw_/https://www.zenhax.com/viewtopic.php?t=35471 point

-

1 point

-

I've moved this topic to graphic file formats, rather than 3d Models where you posted it. Also, please don't start another post for the exact same thing. I've deleted your other post as a duplicate. Please read the rules before posting again.1 point

-

The game have update and they hard-coded new text in .mpk lua script, because some words have many different meaning depend on the context. With packet sniffing, i observed that the game download some .pak file from easebar.com and put them in .mpk file. These file are encrypted.1 point

-

They are still pck files. I can find many wwise .bnk files in AA462ABBFEC319B665666E14585F97D9_EndfieldBeta with ravioli explorer , RavioliGameTools_v2.10.zip (if you need)and I think quickbms also work. By the way I guess the really wem audio files are in another pck files. there are over 5000 bnk files in AA462ABBFEC319B665666E14585F97D9_EndfieldBeta. That means the bnk files may not store any actual audio files1 point

-

Please use this updated script to repackage the data file. If you have any questions, please let me know so that other capable people or you can continue to process these .pxc files yourself # Update the decompression of pxc file(script 0.2) get FILE_SIZE asize xmath TOC_PTR "FILE_SIZE - 8" goto TOC_PTR get TOC_OFFSET long goto TOC_OFFSET get FILE_COUNT long for i = 0 < FILE_COUNT get OFFSET long get SIZE long get COMP_FLAG byte get NAME_LEN short getdstring NAME NAME_LEN get UNK long savepos TOC_ENTRY_POS if COMP_FLAG == 0 goto OFFSET getdstring MAGIC 4 if MAGIC == "PxZP" comtype zlib get UNCOMP_SIZE long get COMP_SIZE long savepos DATA_START clog NAME DATA_START COMP_SIZE UNCOMP_SIZE else log NAME OFFSET SIZE endif else goto OFFSET get MAGIC long get UNCOMP_SIZE long get COMP_SIZE long savepos COMP_START clog NAME COMP_START COMP_SIZE UNCOMP_SIZE endif goto TOC_ENTRY_POS next i pxc.zip1 point

-

use my plugin for Noesis arc_zlib_plzp_lang_vfs.py (which I mentioned earlier) it recursively unpacks all files, at the output you will get *.png, *.wav, *.pm3, *.vram, *.text, *.pvr and e.t.c You can also find a link to the plugin for 3D models *.vram above in the same topic. (*.pvr can open in PVRTexTool)1 point

-

Mostly, PNG files are decompressed, so it's fairly easy to edit and reimport them. However, you need to compress your PNG files using the site iLoveIMG. For example, if the original PNG is 10 KB, your PNG must be 10 KB or less, so you will need to compress it on the site. I’ve attached 3 files: Two BMS scripts: One script will unpack the data, decompressing all files. The other script will unpack the file without decompressing it (this is the one you should use for reimporting; reimport with -r, not reimport 2 in quick bms). A Python script (.py): This program decompresses and compresses zlib files individually. I have set a compression level to reduce the file size even further. Use this if you need to handle compressed files. zlib_DeCompressor.py BMS.ZIP1 point

ResHax.com: Empowering Curious Minds in the World of Reverse Engineering

Delving into the Art of Code Unraveling: ResHax.com - Your Gateway to the Thrilling World of Reverse Engineering, Where Curiosity Meets Innovation!

.thumb.png.08ad2d1b17847ccbf06b8097d586bddf.png)

.png.10d8f98d25c93338d0d9941a56af370e.png)