Leaderboard

Popular Content

Showing content with the highest reputation since 12/13/2025 in all areas

-

3 points

-

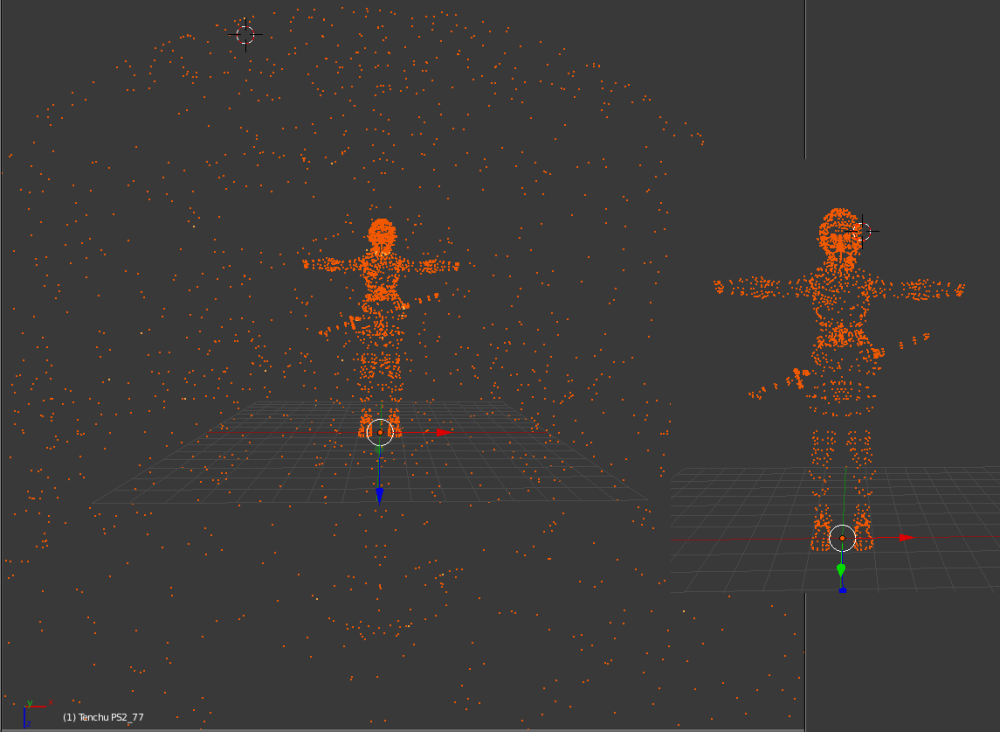

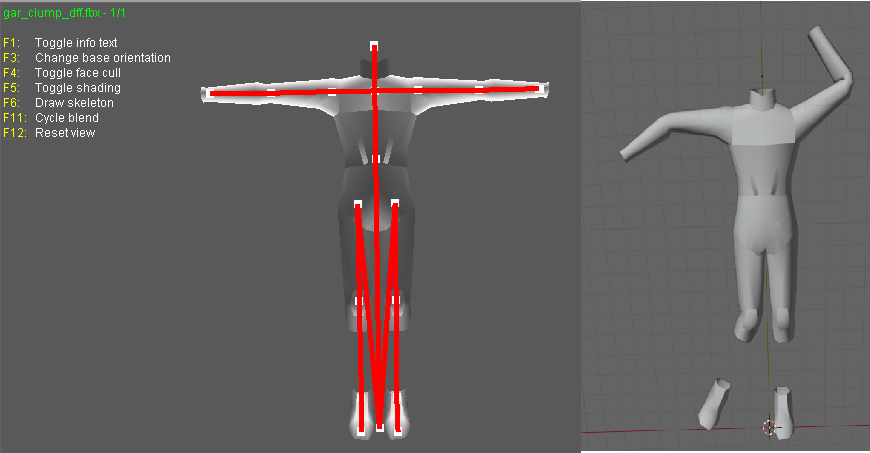

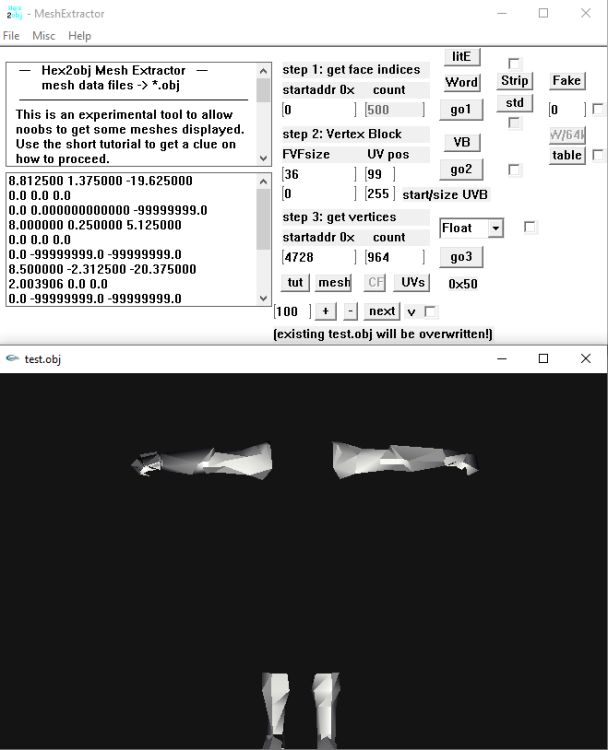

I don't know if there are more models in that unpacked folder, you need to check that so examine each file there. Just remember that characters use shorts in vertices buffer, I think I saw other file with floats but maybe that file is not a character or maybe it is but with floats, I really don't know, lol. Here is the script if you want to test it: fmt_black_ps2_prototype_DB.py3 points

-

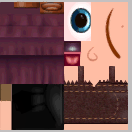

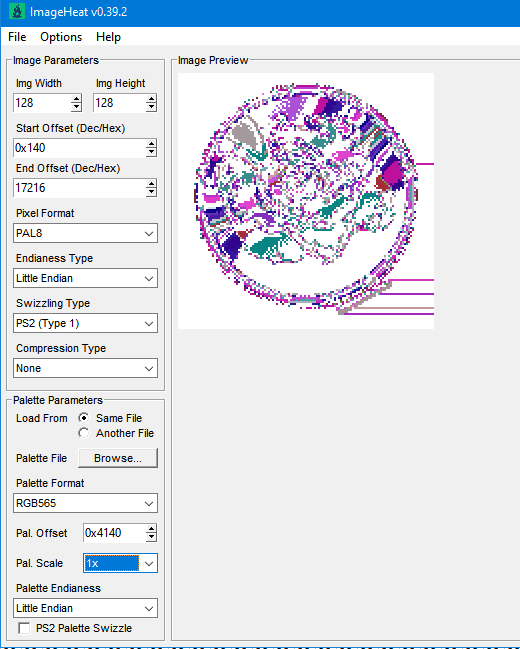

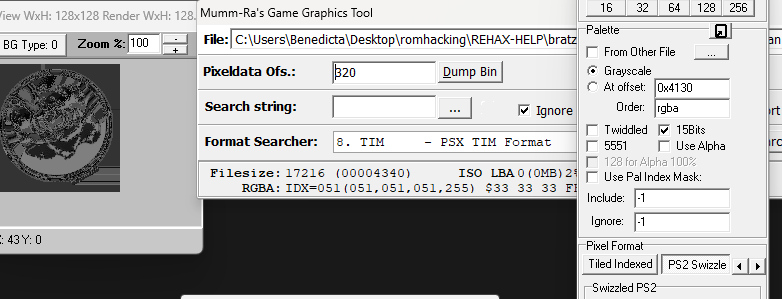

You'd be better using ImageHeat, as it has more options for swizzling, etc. For these, the image is PS2 swizzled, but I can't work out the palette. It doesn't seem to use any of the standard PS2 palette formats, maybe a different swizzling method. This is from "t_compact_rockangelz_closed_00000003":2 points

-

The "repeated offsets" pointing to new blocks indicate that the main BIGFILE.CAT is acting as a master container that holds smaller, self-contained archives inside it. The Master Index: Points to large chunks of data (e.g., "Level 1 Data", "Level 2 Data"). The "New Block": When you go to that offset, you find a new header (signature 01 00 01 00). The Inner Index: This new header has its own list of files. Because this block is treated as a standalone file by the game engine once loaded, its offsets start at 0 (relative to the start of that block), not relative to the start of the whole disc. [ MASTER CAT (BIGFILE) ] |-- Header |-- Index Entry 1: Offset 1000 -> Points to "Level 1 Block" |-- Index Entry 2: Offset 5000 -> Points to "Level 2 Block" | |... [Data at Offset 1000] ... | +-> [ NESTED CAT (Level 1) ] |-- Header (starts at Master Offset 1000) |-- Index Entry A: Offset 10 (Absolute: 1010) |-- Index Entry B: Offset 50 (Absolute: 1050) |-- Data... Why did developers do this? (The Logic) This approach was necessary due to the hardware limitations of the PlayStation 1 (PS1): RAM Constraints: The PS1 has only 2MB of RAM. It cannot keep a massive table of thousands of file offsets in memory at all times. Modular Loading: The game loads the "Master Index" to find the location of the current level's data. It then streams that specific "Block" (Nested CAT) into memory. Relative Addressing: Once the "Block" is loaded into a specific memory address, the game engine reads the inner offsets. Since these offsets are relative to the start of the block (0), the engine can easily calculate memory pointers without needing to know where the block was originally located on the CD.2 points

-

1 point

-

1 point

-

1 point

-

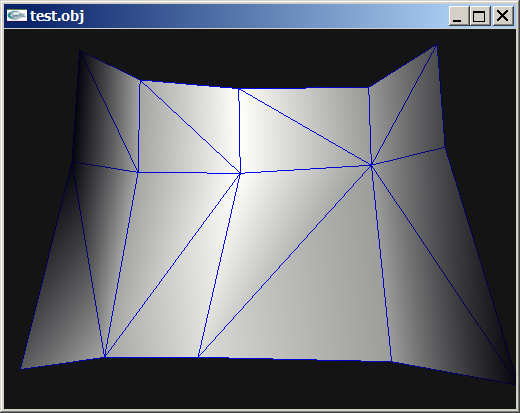

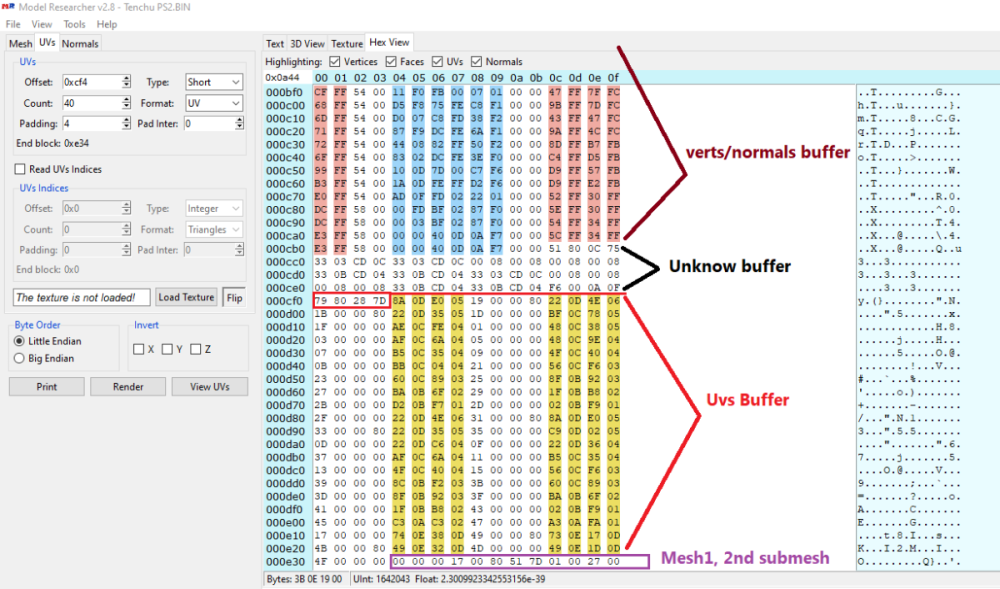

Wait, I think I did something wrong, lol. I can see other unknow block or buffer before UVs. I thought that was the start of UVs but no! So Uvs have the same count as verts after all. The format is: verts/normals buffer: 3 shorts verts, 1 short flag, 3 shorts normals, 1 short flag. unk buffer: ?? UVs buffer: 2 shorts UVs, 4 bytes unk(maybe vertex colors?) Well, it looks like that but I am not sure. About the meshes with floats is: verts/normals buffer: 3 floats verts, 1 short flag, 1 short pad, 3 floats normals, 1 short flag, 1 short pad UVs buffer: 2 shorts UVs, 4 bytes unk(maybe vertex colors?) So meshes with floats don't have that unk buffer. And like always, each buffer has a 4 bytes tag, Here is the last part of the 1st submesh, you cn see that unk buffer before UVs:1 point

-

Sometimes there's a 2nd uv channel. Do you mean that? (In another case one could also double special vertices to get the same amount like uvs but I forgot how to do that... iterate through faces, somehow. edit: found it, answer from Daniol Dan, ""thus each vertex has its own single UV coord"" Not sure whether this will apply to your problem, though.1 point

-

i suspect the tool would require some minor modification but yes more or less.1 point

-

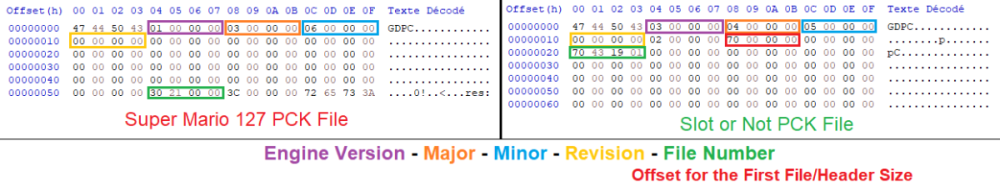

Hello! Out of boredom, I decided to replace texture from a Godot game, in this case Slot or Not, by using Python but failing and failing over again exhausted me. My script has data and their MD5 hash code replacement, and data header change. I doubt that something doesn’t work or they are others data somewhere. I also think even if both WebP data from Godot and PIL are working, their data processing are different thus incompatible. Not even replacing them with PNG data works. I suspect there is a checksum for PCK somewhere. I compared its PCK file with Super Mario 127’s. Although the WebP data for CTEX and STEX are the same, the data pointers in SM127 are at the start of the file, after the header; SoN are at the bottom. The PCK for V1 is documented in Xentax wiki but not for V3. It may be small but this comparison shouldn’t be dismissed. What I need: If there is a checksum for PCK, where it is? The Bytes leading to the data header thus making the Python script adaptable If the WebP data is the issue, a better understanding of Godot’s WebP data Feel free to reply if you find anything! Slot or Not (PCK File V3) son PCK File.zip Godot Picture Replacer Script (Work in progress) Godot Pictures Replacer Script.py Note for the creator of Slot Or Not, you don’t have to turn it into an moddable game. The game need its own resource to be good game. I do this because why not and for research purpose. Knowledge GODOT PCK FILE FORMAT (Little Endian) (From Slot or Not) HEADER (112 Bytes) 4 Bytes = Signature ("GDPC") 4 Bytes = 03 00 00 00 (Engine Version) 4 Bytes = 04 00 00 00 (Major) 4 Bytes = 05 00 00 00 (Minor) 4 Bytes = 00 00 00 00 (Revision) 4 Bytes = 02 00 00 00 4 Bytes = 70 00 00 00 (First File Pointer) 4 Bytes = 00 00 00 00 4 Bytes = File Numbers 4 x 19 Bytes = 00s (Reserved) DATA HEADER (Found at the last part of the file) 4 Bytes = Length of Path, Including the 00s X Bytes = Path Name + 00s (If not divisible by 4) 8 Bytes = Data Pointer - 112 8 Bytes = File Size 16 Bytes = Data MD5 Checksum 4 Bytes = 00 00 00 00 GODOT CTEX FILE FORMAT (Little Endian) For WebP Format { 4 Bytes = String GTS2 4 Bytes = Use Alpha or Not (01 = Yes; 00 = No) 4 Bytes = Height 4 Bytes = Width 4 Bytes = 00 00 00 0D 4 Bytes = FF FF FF FF 4 x 3 Bytes = 00s (Reserved?) 4 Bytes = 02 00 00 00 2 Bytes = Height 2 Bytes = Width 4 Bytes = 00s 4 Bytes = 05 00 00 00 4 Bytes = Data Size after this Byte 4 Bytes = String RIFF 4 Bytes = WebP Data Size after this Byte 8 Bytes = WEBPVP8L / Tag for Loseless Encoded Image Data 4 Bytes = Data Size (or Data Size - 1 if WebP Chunk ends with 00) (Rest of the File) X Bytes = WEBP VP8L Chunk (On Python, the settings are Loseless = True, EXIF = False) 0-15 Bytes = 00 for Fill Up if the CTex Data Size isn't a divisible of 16 } [NOTE: You can get the WebP data by removing the GTS2 Header.] For PNG Format { X Bytes = PNG File Data 0-15 Bytes = 00 for Fill Up if the CTex Data Size isn't a divisible of 16 } X Bytes = String for [Remap], Settings, Path, Texture, Vram X Bytes = 00s (if the length the String isn’t a divisible of 16)1 point

-

UModel is mis-parsing the package, usually because the game’s UE2 fork / package format doesn’t match what UModel expects with the current settings. Umodel to my knowledge works with this game. Your settings are not correct. I will elaborate using your error. FArray::Empty: -116 x 12 UModel tried to allocate an array with -116 elements of 12 bytes each. A negative element count is impossible, so the size overflows to a negative number (-1392) and appMalloc blows up. UModel is reading the wrong structure at that position (e.g., thinks this field is “NumVerts” but it’s actually some other 32-bit value), because the package layout / engine profile doesn’t match what UModel expects for “game=ue2” in this case. The rest of the stack confirms it dies while trying to load a skeletal mesh from that .ukx, not on textures or trivial data. In easy to understand terms. "wrong assumptions about how this game’s .ukx stores its skeletal meshes". Umodel should have a selection on the side for where you can pick game targets. If the game isn't there then you might be out of luck. However, I think this game shares a target with another game. Just trial and error to see if any of them work. Also, if this is not a "PC" version of the game you need to change the "Auto" on the bottom right of the gui to the specific platform. I know the topic says PC. People do make mistakes. Unreal Engine 2 games are rare and few in between. Not many people cared to work with any of them. Even the Wheels of Time game didn't get much investment. Also, turn off all forms of textures/material when first trying with unreal engine 2 games to speed up the process especially with whack a mole trial and error. The only people that would even bother adding support to this game which is extremely unlikely are on Gildor's forum. Again, very few people would ever care for an Unreal Engine 2 or 1 game. I am sorry if this wasn't helpful.1 point

-

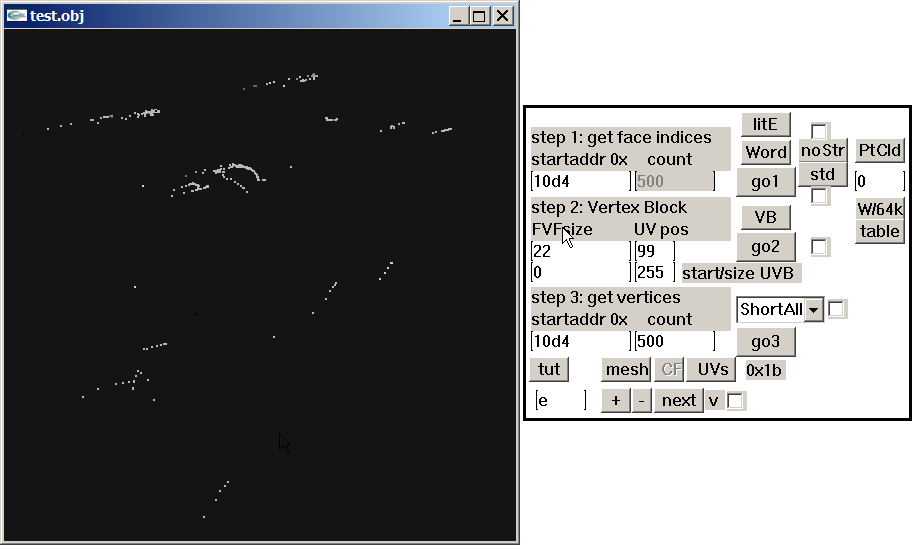

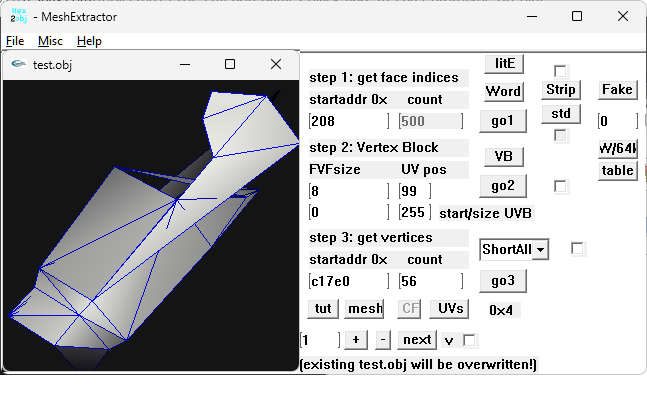

This is actually very helpful. Thank you. I seen the same repeating groups of ~10 unsigned 16-bit value and came to a similar conclusion. The constants like 0, 1632x (~0x3FCx), 21845 (0x5555), 39322 (0x999A), 43691 (0xAAAB), 52429 (0xCCCC), 56798 (0xDDDE), 63488 (0xF800) hex patterns are classic fixed-point / normalized values (fractions of 0xFFFF), which is exactly what you’d expect for compressed curves (rotations, maybe scales or tangents)... I am not 100% sure though. Your step back that you are seeing is because multiple tracks/bones are interleaved via small index tables or there’s a separate header that says “keyframe list starts at X for track Y. I have not figured it out. Also, I am only assuming. It is an educated guess. 2d72 1100 looks more smooth because it is probably for the static pieces. That is also a guess. I don't really know. I haven't found a clean stride/format yet from any area to the extent I was happy with any result. Thankfully your poking proves it isn't baked matrices. However, it might have "junk" data inside of it or switch between the two different formats on the fly which would have a call/read from the game engine the game was made with "elf statements/running". For the last two days I have been looking for a header or track and still haven't found one. I don't know what the meaning "definition" for each of the 10 values per entry (time? quat? s,t,r? tangents? flags?). Also haven't figured out how these numbers convert back to usable floats/matrices for a bone rig. You found the right haystack to be looking for the needle here and I thank you for this. The repeated 16-bit values like 0x3FCx, 0x5555, 0xAAAA, 0xF800, and the way they change over “time” gave light on this. That matches my expectation that GARO is storing proper animation curves rather than just baked matrices which most people would have assumed because of the static model additive animations. Sorry for repeating myself here. I have a client for this game that is CONSTANTLY having me repeat over and over some of this information and it started to turn into habit. Going back to the “step back” jumps you pointed out I believe show the timeline might be split into several blocks (per-bone or per-channel segments) instead of one clean linear stream, which is probably why tools that only understand standard RWANM fail on this game. I don’t have much experience with your viewer. The tools I use are far different and I have been doing a lot of direct hex poking along with using renderware tools, so I’m still trying to figure out the parameters you showcase here. When you mention 2d72 1100 in step 3, is that essentially a stride / FVF setup you’re using to visualize the data as a point cloud? That was my original assumption but now I am second guessing myself. Do you have any thoughts yet on how those 10-value records break down (e.g. time + rotation + something else), or on where the per-track headers might sit? You helped a lot with this and I am very thankful.1 point

-

the lvl file is essentially like a zip/container file containing a bunch of .rbm/.rba (CAFF) files and the .rbm/.rba files are basically containers with the data for any given asset, eg models will include a model(header), scenegraph, textures and may additional things like hits/animation data packed inside too if its relevant to the model and/or not shared between a bunch of other models in which case they are usually packed inside the zpackage rbm files.1 point

-

As per i am a good for nothing in 3d model issues., i can´t tell if te unpack works, but studying DB, i can see 2 types of TOC. Attached the py script, if someone wants to take a look. just drop the files DB or bins in .py or double click in .py black_ps2_unpack.py1 point

-

Version 1.0.0

3 downloads

This tool is a dedicated utility designed for modifying Konami games on the PlayStation 1 (PSX). It specifically manages .FAD files, which are proprietary archives used by Konami to store game data. Key Features: Unpacker: It allows you to extract the contents of .FAD archives, giving access to internal game assets such as textures, models, and audio. Repacker: It enables you to rebuild valid .FAD files from extracted folders. This is crucial for inserting modified files back into the game ISO. Compatibility: The tool supports a variety of Konami titles on the PS1 that utilize this specific file structure. FadTool-Konami.zip1 point -

Hmm, you know there's some hero here who got the trick for PS2? I couldn't get over myself to make use of his dll but it's my firm decision to tackle this and I'll tell the result as soon as I get it working for me. (If it works there's several dozens of PS2 projects I'd need to correct and I fear the amount of work, somehow.) (It's my bet that it has to do with changing the face winding and I'd like to find it out by myself instead of using other people's dll.)1 point

-

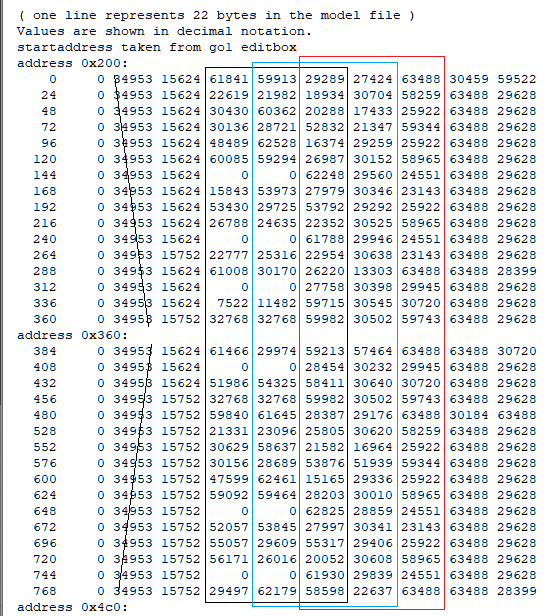

Sounds complicated. Anyways, in gar.rws.dec_be_-15_anim_77.rwanm I found parts which might be animation curves. But the timeline looks somehow cut in pieces. Not talking about gaps (which might be filled by interpolated frames, as you may know), it's these steps "back in time," haha. address 0x10c8: 4392 0 48060 16315 0 0 61541 30111 24841 63488 62256 4416 0 48060 16315 32768 0 21047 30717 23725 63488 62256 4440 0 0 16320 53301 30676 22558 24629 63330 63488 63488 step back? 4152 0 26214 16358 0 0 24224 30693 29960 63488 62256 4176 0 0 16320 52246 24488 61447 30130 30720 63488 62256 4464 0 0 16320 29178 29826 41088 49358 58949 63488 62256 one single interruption? 2616 0 0 16320 19549 62621 53216 29139 26173 63488 62256 4512 0 0 16320 57603 60936 21535 30255 58578 63488 62256 4536 0 0 16320 0 0 61231 30230 24841 63488 62256 4560 0 0 16320 57771 18609 21233 30669 23725 63488 62256 4584 0 0 16320 54112 29811 54832 29180 26173 63488 62256 4608 0 0 16320 25814 28071 57693 30186 58578 63488 62256 4632 0 0 16320 0 0 61565 30096 24841 63488 62256 4656 0 0 16320 52935 22002 20388 30710 23725 63488 62256 4680 0 17476 16324 28679 28658 61447 61426 63420 30697 62911 one single int/ step back 3024 0 17476 16324 44238 15564 43253 30720 57885 63488 62256 address 0x1228: 4248 0 17476 16324 29178 29826 11892 49786 58949 63488 62256 4776 0 17476 16324 20137 62612 53593 29149 26173 63488 62256 4800 0 17476 16324 57236 61086 21117 30230 58578 63488 62256 4824 0 17476 16324 0 0 61456 30162 24841 63488 62256 4848 0 17476 16324 62036 28766 59130 28692 23725 63488 62256 4872 0 17476 16324 54297 29800 55031 29192 26173 63488 62256 4896 0 17476 16324 26484 27725 58682 30174 58578 63488 62256 4920 0 17476 16324 0 0 61606 30070 24841 63488 62256 4944 0 17476 16324 55822 24945 19244 30661 23725 63488 62256 4968 0 34953 16328 53047 30677 22461 24619 63330 63488 63488 step back 4704 0 17476 16324 52133 24362 61452 30130 30720 63488 62256 4752 0 52429 16332 28686 28644 61454 61412 63418 30697 62906 4992 0 21845 16341 10682 15408 39411 30720 57885 63488 62256 5016 0 39322 16345 29178 29826 43782 50136 58949 63488 62256 5040 0 34953 16328 20579 62599 53912 29163 26173 63488 62256 5064 0 34953 16328 56688 61223 20816 30203 58578 63488 62256 address 0x1388: 5088 0 34953 16328 0 0 61592 30079 24841 63488 62256 5112 0 34953 16328 62115 28717 61186 26846 23725 63488 62256 5136 0 34953 16328 54424 29790 55156 29204 26173 63488 62256 5160 0 34953 16328 27053 27110 59618 30138 58578 63488 62256 5184 0 34953 16328 0 0 61665 30032 24841 63488 62256 5208 0 34953 16328 57532 26626 19092 30549 23725 63488 62256 5232 0 34953 16328 52021 24237 61456 30129 30720 63488 62256 5280 0 52429 16332 53754 30675 55574 57291 63330 63488 60606 one single step back 4488 0 52429 16332 20774 62580 54116 29185 26173 63488 62256 5376 0 52429 16332 56853 61202 20694 30206 58578 63488 62256 5400 0 52429 16332 0 0 61788 29946 24841 63488 62256 5424 0 52429 16332 62044 28380 61696 26151 23725 63488 62256 5448 0 52429 16332 54547 29777 55268 29218 26173 63488 62256 5472 0 52429 16332 27445 26230 60149 30073 58578 63488 62256 5496 0 52429 16332 0 0 61728 29989 24841 63488 62256 5520 0 52429 16332 58316 27162 19461 30435 23725 63488 62256 address 0x14e8: 5544 0 39322 16345 52592 30677 22509 24635 63330 63488 63488 5256 0 21845 16341 52111 24340 61455 30128 30720 63488 62256 5568 0 0 16384 28686 28644 61454 61412 63326 30697 62825 5304 0 43691 16362 30693 57026 15938 9533 26173 63488 62256 strange 576 0 4369 16337 20851 62555 54153 29215 26173 63488 62256 5616 0 4369 16337 57604 60892 21068 30267 58578 63488 62256 5640 0 4369 16337 0 0 62039 29750 24841 63488 62256 5664 0 4369 16337 62168 28395 61422 26828 23725 63488 62256 5688 0 4369 16337 54672 29762 55334 29235 26173 63488 62256 5712 0 4369 16337 27496 25516 60464 30038 58578 63488 62256 5736 0 4369 16337 0 0 61802 29936 24841 63488 62256 5760 0 4369 16337 58935 27260 19496 30384 23725 63488 62256 5784 0 43691 16362 53606 30676 55498 57321 63330 63488 60606 5592 0 21845 16341 20844 62524 54074 29253 26173 63488 62256 5904 0 21845 16341 58154 60378 22229 30354 58578 63488 62256 5928 0 21845 16341 0 0 62292 29517 24841 63488 62256 address 0x1648: 5952 0 21845 16341 62256 28360 60963 27198 23725 63488 62256 5976 0 21845 16341 54773 29744 55367 29255 26173 63488 62256 6000 0 21845 16341 27366 25291 60654 30032 58578 63488 62256 6024 0 21845 16341 0 0 61886 29873 24841 63488 62256 6048 0 21845 16341 59449 27129 19091 30370 23725 63488 62256 6072 0 43691 16362 0 0 24688 30684 29960 63488 62256 step back 4224 0 21845 16341 19132 57034 61493 30107 30720 63488 62256 step back 3264 0 39322 16345 14059 16222 10554 30720 57885 63488 62256 5328 0 39322 16345 20817 62489 53965 29295 26173 63488 62256 6120 0 39322 16345 58505 59791 23174 30435 58578 63488 62256 6144 0 39322 16345 0 0 62518 29271 24841 63488 62256 6168 0 39322 16345 62322 28254 60582 27535 23725 63488 62256 6192 0 39322 16345 54856 29727 55388 29274 26173 63488 62256 6216 0 39322 16345 27173 25274 60786 30038 58578 63488 62256 6240 0 39322 16345 0 0 61964 29812 24841 63488 62256 6264 0 39322 16345 59711 26929 17414 30364 23725 63488 62256 address 0x17a8: 6288 0 39322 16345 52220 24462 61451 30128 30720 63488 62256 and so on 5832 0 43691 16362 19209 57095 61486 30110 30720 63488 62256 6336 0 56798 16349 15191 16672 12805 30720 57885 63488 62256 6360 0 56798 16349 29178 29826 46966 49975 58949 63488 62256 5352 0 56798 16349 20837 62449 53905 29341 26173 63488 62256 6384 0 56798 16349 58615 59023 23685 30505 58578 63488 62256 6408 0 56798 16349 0 0 62719 29013 24841 63488 62256 6432 0 56798 16349 62391 28041 60163 27919 23725 63488 62256 6456 0 56798 16349 54925 29711 55403 29292 26173 63488 62256 6480 0 56798 16349 27014 25159 60900 30044 58578 63488 62256 6504 0 56798 16349 0 0 62028 29759 24841 63488 62256 6528 0 56798 16349 59959 26765 47430 30348 23725 63488 62256 6552 0 43691 16362 52854 30676 22596 24625 63330 63488 63488 edit: using 2d72 1100 in step 3 will give some more obvious animation curves, imho edit 2: press 'mesh' to get the visualization. (toggle 'PtCld' to 'noPtC' and press 'table' to get the timeline list)1 point

-

1 point

-

1 point

-

Hi i was wondering if there is a way to open the .arc files for The Sims on the original Xbox I'm curious to see if the archived files are in .IFF format i tried using The Sims 2 .arc QuickBMS script but to no avail EDIT: Found a script that work ironically it was a Hulk .arc script. Thank You ikskoks1 point

-

Ok, thanks. You rule that format (besides the anims). I'll check the files tomorrow. (If I can't help maybe someone else can, with all the files provided.) Good night. edt: well, being more the "simple analyzer" I focussed on the skeleton (21 bones?) in the dff file and the gar.rws.dec_be_-15_anim_27.rwanm: I think the 5th column here could be the frame time in msec with translation and rotation values to follow: address 0x1b6: 30486 29945 63488 29628 58856 65209 0 0 51393 54393 57171 30685 30720 63488 29628 58856 65209 0 0 32768 32768 59982 30502 59743 63488 29628 58856 65209 34953 15752 0 0 0 30720 63488 63488 29628 0 0 34953 15624 61841 59913 29289 27424 63488 30459 59522 24 0 34953 15624 22619 21982 18934 30704 58259 63488 29628 48 0 34953 15624 30430 60362 20288 17433 25922 63488 29628 72 0 34953 15624 30136 28721 52832 21347 59344 63488 29628 96 0 34953 15624 48489 62528 16374 29259 25922 63488 29628 120 0 34953 15624 60085 59294 26987 30152 58965 63488 29628 144 0 34953 15624 0 0 62248 29560 24551 63488 29628 168 0 34953 15624 15843 53973 27979 30346 23143 63488 29628 192 0 34953 15624 53430 29725 53792 29292 25922 63488 29628 216 0 34953 15624 26788 24635 22352 30525 58965 63488 29628 240 0 34953 15624 0 0 61788 29946 24551 63488 29628 264 0 34953 15752 22777 25316 22954 30638 23143 63488 29628 288 0 34953 15624 61008 30170 26220 address 0x316: 13303 63488 63488 28399 312 0 34953 15624 0 0 27758 30398 29945 63488 29628 336 0 34953 15624 7522 11482 59715 30545 30720 63488 29628 360 0 34953 15752 32768 32768 59982 30502 59743 63488 29628 384 0 34953 15624 61466 29974 59213 57464 63488 63488 30720 408 0 34953 15624 0 0 28454 30232 29945 63488 29628 432 0 34953 15624 51986 54325 58411 30640 30720 63488 29628 456 0 34953 15752 32768 32768 59982 30502 59743 63488 29628 480 0 34953 15752 59840 61645 28387 29176 63488 30184 63488 528 0 34953 15752 21331 23096 25805 30620 58259 63488 29628 552 0 34953 15752 30629 58637 21582 16964 25922 63488 29628 576 0 34953 15752 30156 28689 53876 51939 59344 63488 29628 600 0 34953 15752 47599 62461 15165 29336 25922 63488 29628 624 0 34953 15752 59092 59464 28203 30010 58965 63488 29628 648 0 34953 15752 0 0 62825 28859 24551 63488 29628 672 0 34953 15752 52057 53845 27997 30341 23143 63488 29628 696 0 34953 15752 55057 29609 55317 address 0x476: 29406 25922 63488 29628 720 0 34953 15752 56171 26016 20052 30608 58965 63488 29628 744 0 34953 15752 0 0 61930 29839 24551 63488 29628 768 0 34953 15752 29497 62179 58598 22637 63488 63488 28399 816 0 34953 15752 0 0 28323 30266 29945 63488 29628 840 0 34953 15752 7817 11470 59999 30499 30720 63488 29628 864 0 34953 15752 29655 61689 27187 25651 63488 63488 30720 912 0 34953 15752 0 0 29009 29954 29945 63488 29628 936 0 34953 15752 52130 54326 59350 30587 30720 63488 29628 960 0 29287 16404 44589 16658 52439 //49164 29287 16404 44589 16658 9 16448 21 0 1 2 I checked 40 blocks with a size of 22 bytes but none of the point clouds resembled an animation curve (although you can get some points in a line sometimes).1 point

-

I am uploading all animations for the character gar here. I should have done that in the previous post but it slipped my mind. So now you have a complete set of animations for one character. gar.rws.dec_be_-15_extracted.rar1 point

-

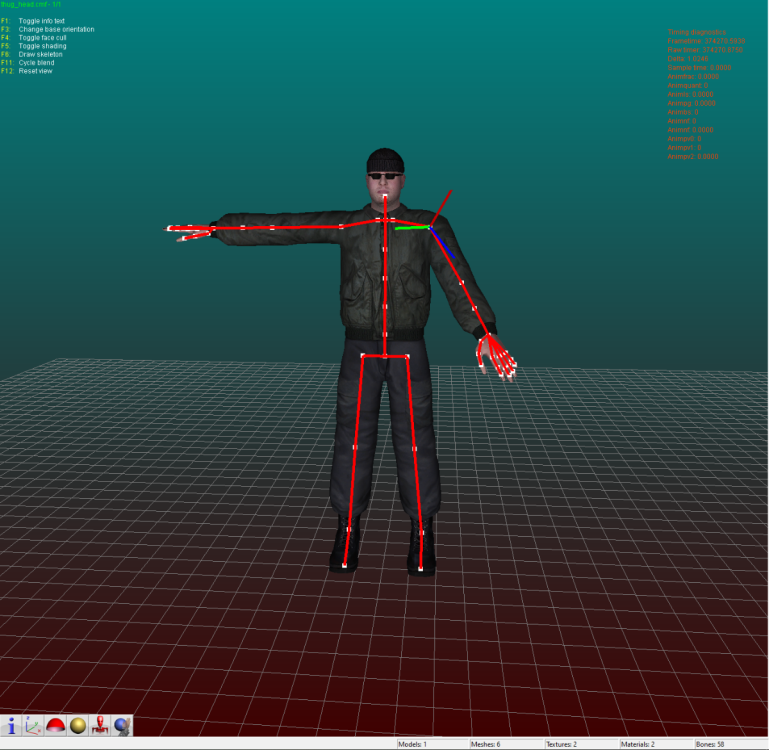

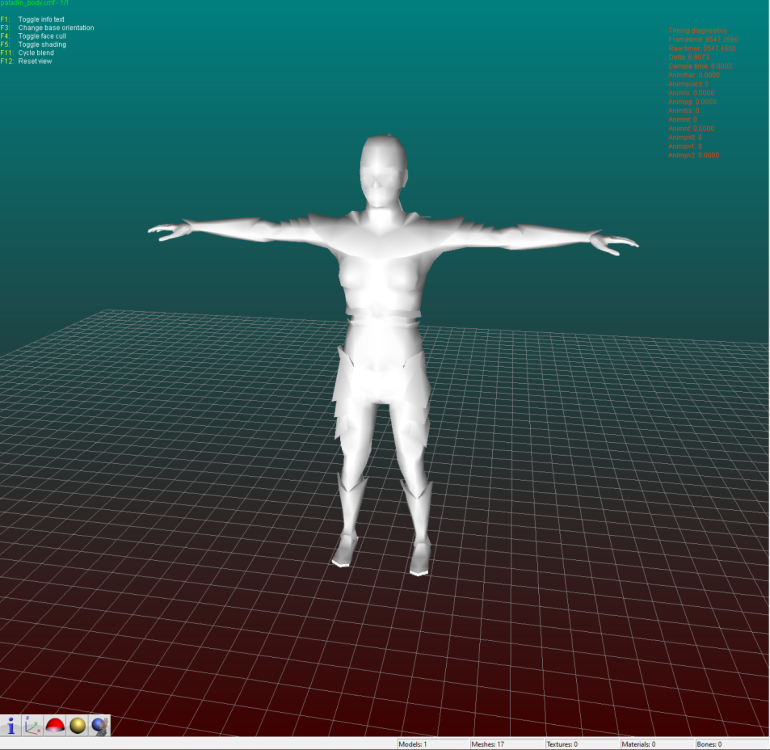

The armature is with the model. When you import the DFF using DragonFF in blender it will have the armature with it. The bones are very small. You can use the side menu GUI to better find the bones. Models and Skeletons are 100% workable. It is the custom renderware animation format that is the problem here. In the files I uploaded in the topic post, there should be two rwanm files. Those are some of the animations. If you have the game I can give you one of my scripts that will extract everything for you so you can go through all the files for testing. I tried figuring this out through the elf file. Let me know if you want my script so you can poke at all the animations in the game. There are two different sets of animations per character even if they don't have a weapon. The animations specific for weapons would be much easier to look at since they are smaller. There are mot files that give some additional information but for the most part they are irrelevant. Mot files for this game is more of a listing of animations and positioning for static model pieces. Not the main animations. I am uploading the mot file so you can see it is mostly just for static pieces. The txt file for the character abbreviated with gar that states all the files for him and the gmobj data base text for it as well. I used a renderware tool "forgot the name of it" that allows me to look at how the files are structured. Sending you a section_tree.txt that was made by that program. I was offered a job to make a modding tool for this game that would include editing animations..... As it stands, I don't believe I will be able to finish it. Will just make everything I have for this game public if that happens. Adding two scripts as well. The gar_rws_test.py script does a simple test on the files for the correct compression to decompress the files so you can get everything basically. The garou_rws_extractor is a slightly upgraded version that needs work and is for extracting the models/animations. On aluigi's website there is a bms script called ougon_kishi_garo. That would be your first step to getting all the files out. Then you will have to use the scripts I posted here. The unfinished one will have errors. The test one will work better. gar_mot.rar gar_files_txt.rar gmobj_DB_Files_txt.rar Section_Tree_txt.rar gar_rws_test.py Garou_RWS_extractor.py1 point

-

1 point

-

1 point

-

1 point

-

if there are any unreleased models they should be pretty easy to find, afaik most(probably all?) of the package files have the same checksums as the retail game (dvddata\aid\zpackage) - i cant say ive tested every single one but of the ones i have that has been the case. there are some other assets that are in the file system but i dont know if any are different, eg dvddata\aid\bfdmodel\characters\SinglePlayerVehicles\MachineGunTurret is actually the model shown in my original post, theyre not compressed so you can easily use a texture viewer to see if anything looks interesting (almost all textures are DXT1/DXT5) sometimes theyre just the same model as the original but with a larger texture, eg 512x512 instead of 128x128, the demo version of conker is an example of this as well, his texture on the demo is higher res than the retail game. if anything most of the "easter eggs" are probably buried in the original/actual game and just go un noticed, for example in one of my chats with Uber Winfrey he pointed out Berrys dresser has this ontop of it: or the gargoyle statues: safe to say the rareware guys had an interesting sense of humor. ------------------------------------------ as for any tool, the closest thing to it would be Uber Winfrey's blender script. Most of my work has just been around documenting the structs/data, and while extracting some of the raw data is easy enough there is still a ton of stuff that needs to be figured out like for example Animation data is still WIP and would probably need to translate the shaders for the model to look right eg Fur, Cloth, Shine etc since it uses old VSH/PSH shaders instead of HLSL, they're pre compiled so they need to be decompiled from binary data, figuring out the shader params and what/how they're used for etc. these aren't unsolvable problems but its definitely outside of my skillset which means even if i find time for it its going to be slow.1 point

-

there is a lot of overlap, in fact the Kameo alpha uses the exact same CAFF version as the Conker Demo. the lvl files are just an archive/container of sorts while the assets themselves are packaged inside individual "CAFF" containers. for example: the file struct is fairly basic for the LVL container itself(for imhex): struct LVL_ENTRY { u32 unk_00; u32* address : u32; }; struct LVL_FILE_TABLE { u32 count; LVL_ENTRY array[count]; }; LVL_FILE_TABLE table @ 0x00; they're similar in a sense that Conker stores the assets inside a "ZPackage" CAFF container instead of using an external container(.LVL) to store the individual CAFF assets. unlike the earlier games like GBTG kameo also stores a lot of data inside pushbuffer commands including things like the triangles/shaders/shaderparams. but as far as models go theyre very similar (as thats what i've spent the most time on) not sure about the other assets tho.1 point

-

When debugging function 0010D850, I found these filenames in the t0 register (after the decryption result; it also loads the filenames of files with the .JRS header into memory): LOGO.JRS MAINSCRIPT.JRS SCENARIO.JRS SCENARIO00_ROMA.JRS SCENARIO00_ROMA_TGS.JRS SCENARIO00_ROMA_TRIAL.JRS SCENARIO01_EGOI.JRS SCENARIO01_EGOI_TGS.JRS RES/SCRIPT RES/SCRIPT/SC RES/SCRIPT/SC/00_ROMA RES/SCRIPT/SC/01_EGOI RES/SCRIPT/SC/02_TERO RES/SCRIPT/SC/10_KAISOU SCRIPT.SVL etc... The flow is: SCRIPT.PTD (disk) → [AT GAME STARTUP] → Decompress YKLZ → Decrypt .JRS → Load into PS2 memory --- Knowing this, I set a read/write/change breakpoint in the PS2 debugger after the initial file-loading process in RAM. In this case, I set it at 0056AC20 (which corresponds to `SCENARIO00_ROMA.JRS`), and as expected, this is the first dialogue shown in the Romantica route. 0011A010 (game_load_resource) ↓ 0010DED0 (file_system_wrapper) ↓ 0010DB58 (process_filename) → Converts "SCENARIO00_ROMA.JRS" to something ↓ 0010DC48 (binary_search) → Searches table using 0018E4FC (optimized strcmp) ↓ 0010DD98 (get_file_info) → Returns data_ptr (already in memory?) ↓ RETURNS to 0011A010 ↓ ...... 00106800 (PROCESS .JRS?) → UNKNOWN ↓ 001068D0 (FINISHES?) → Another unknown --- FUNCTIONS 0010DC48 – Binary Search - Searches for files in a master table sorted alphabetically (only to verify file calls are correct) 0010DD98– Get File Info - Returns data_ptr (already in memory), size, and flags 0010DED0 – File System Wrapper - Orchestrates the search and retrieval process 0011A010 – Game Resource Loader - Manages memory pool - Calls the entire system --- The only thing left to do is trace the flow and see what gets called after the .JRS file is loaded (which is obviously to process and render the text). With the information on how the game processes and displays text, we can process the previously extracted files from script.ptd to view the Japanese dialogues. I need to rest my brain… haha1 point

-

1 point

-

1 point

-

1 point

-

animewwise just closes instantly if you try it on there. Regular wwise works https://github.com/mortalis13/Wwise-Unpacker BeyondToolsMod-net9.zip1 point

-

1 point

-

1 point

ResHax.com: Empowering Curious Minds in the World of Reverse Engineering

Delving into the Art of Code Unraveling: ResHax.com - Your Gateway to the Thrilling World of Reverse Engineering, Where Curiosity Meets Innovation!

.thumb.png.08ad2d1b17847ccbf06b8097d586bddf.png)